Abstract

Image features are widely used in computer vision applications. They need to be robust to scene changes and image transformations. Designing and comparing feature descriptors requires the ability to evaluate their performance with respect to those transformations. We want to know how robust the descriptors are to changes in the lighting, scene, or viewing conditions. For this, we need ground truth data of different scenes viewed under different camera or lighting conditions in a controlled way. Such data is very difficult to gather in a real-world setting. We propose using a photorealistic virtual world to gain complete and repeatable control of the environment in order to evaluate image features. We then use our virtual world to study the effects on descriptor performance of controlled changes in viewpoint and illumination. We also study the effect of augmenting the descriptors with depth information to improve performance.

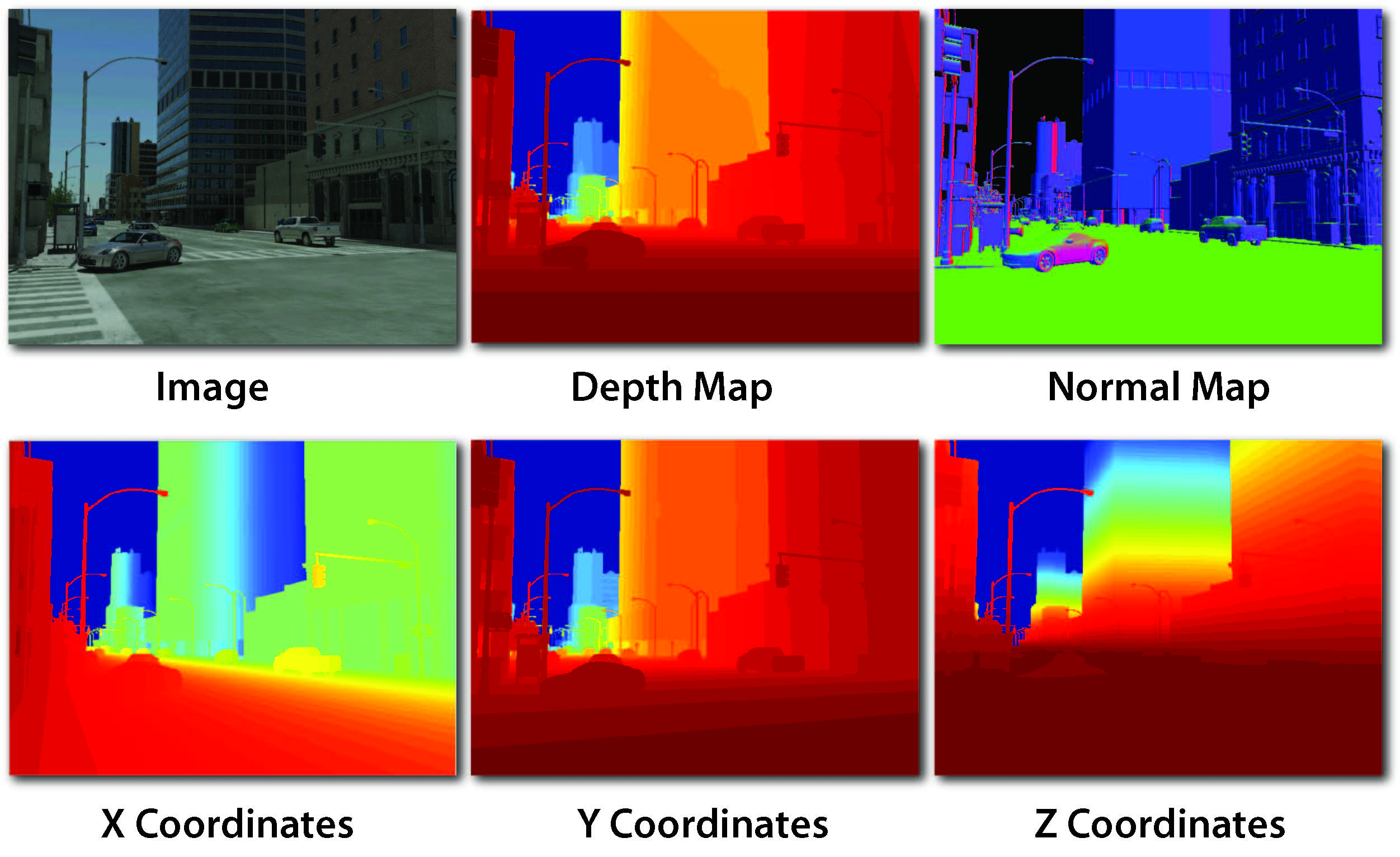

Virtual City Database

The database contains 3000 images of the virtual city plus ground truth data for each camera location/orientation as shown below. All the images are in png format at 640x480 resolution. The images provided here are for research purposes only.

The download contains a Matlab demo (demo.m) for loading and displaying the data for a given scene.

Download (tar file 2.0GB)

Paper

Biliana Kaneva, Antonio Torralba, William Freeman. Evaluation of Image Features Using a Photorealistic Virtual World. ICCV, 2011BibTex

@InProceedings{Kaneva_2011,

author = {Biliana Kaneva and Antonio Torralba and William T. Freeman},

title = {Evaluating Image Feaures Using a Photorealistic Virtual World},

booktitle = {IEEE International Conference on Computer Vision},

year = {2011}

}

Acknowledgments

Funding for this research was provided by Shell Research, Quanta Computer, ONR-MURI Grant N00014-06-1-0734, CAREER Award No. 0747120, ONR MURI N000141010933 and by gifts from Microsoft, Adobe, and Google.