Human-Assisted Motion Annotation

Ce Liu1 William T. Freeman1 Edward H. Adelson1 Yair Weiss1,2

1CSAIL, MIT 2The Hebrew University of Jerusalem

{celiu,billf,adelson}@csail.mit.edu, yweiss@cs.huji.ac.il

To be presented at CVPR 2008 [pdf]

(a) A frame of a video sequence |

(b) User-aided layer segmentation |

(c) User-annoated motion |

(d) Output of a flow algorithm [2] |

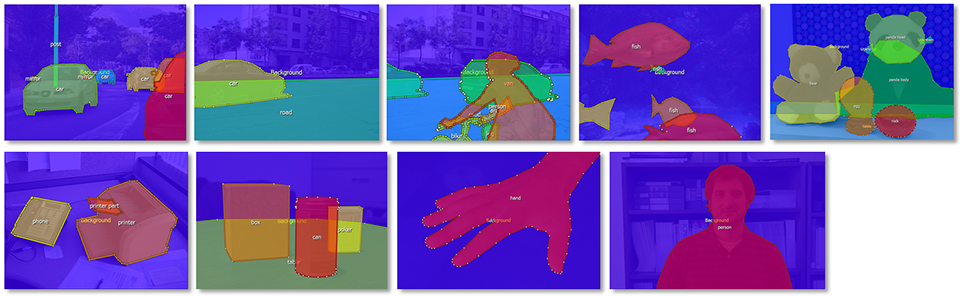

Figure 1. We designed a system to allow the user to specify layer configurations and motion hints (b). Our system uses these hints to

calculate a dense flow field for each layer. We show that the flow (c) is repeatable and accurate. (d): The output of a representative optical

flow algorithm [2], trained on the Yosemite sequence, shows many differences from the labeled ground truth for this and other realistic

sequences we have labeled. This indicates the value of our database for training and evaluating optical flow algorithms. |

|||

Abstract

Obtaining ground-truth motion for arbitrary, real-world video sequences is a challenging but important task for both algorithm evaluation and model design. Existing groundtruth databases are either synthetic, such as the Yosemite sequence, or limited to indoor, experimental setups, such as the database developed in [1]. We propose a human-inloop methodology to create a ground-truth motion database for the videos taken with ordinary cameras in both indoor and outdoor scenes, using the fact that human beings are experts at segmenting objects and inspecting the match between two frames. We designed an interactive computer vision system to allow a user to efficiently annotate motion. Our methodology is cross-validated by showing that human annotated motion is repeatable, consistent across annotators, and close to the ground truth obtained by [1]. Using our system, we collected and annotated 10 indoor and outdoor real-world videos to form a ground-truth motion database. The source code, annotation tool and database is online for public evaluation and benchmarking.

What is motion?

Motion can be the physical movement of pixels or human percept. Can we rely on human perception of motion for motion annotation? Hope this page of "what is motion" can make you think about it.

Download the code and database

Layer segmentation and motion annotation tools are separated because many people only want to use the layer segmentation tool. These two systems were coded using Visual Studio 2005 and Qt 4.3 under gpl license. You can download the source code and binary (compiled and run in Windows Vista) for the two systems. I am still in the process of writing a detailed readme.

Layer segmentation: LayerAnnotationSource.zip, LayerAnnotationBinary.zip

Motion annotation: MotionGroundTruthSource.zip, MotionGroundTruthBinary.zip

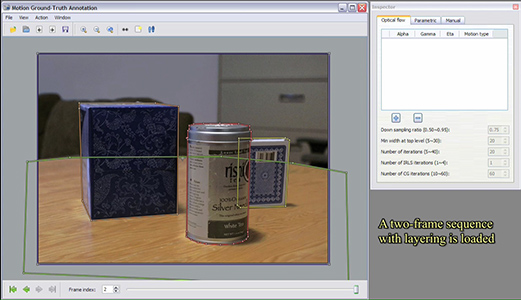

The demos of how to use these two systems are shown below

|

|

Demo: layer labeling |

Demo: motion annotation |

Here you can download some videos and the annotated flow.

Table (54MB) |

Toy (166MB) |

Hand (249MB) |

Cameramotion (155MB) |

Fish (295MB) |

Here you can download the zip file of layer segmentation and MATLAB files for loading layer information [download].

Interface