Scene Reconstruction from High Spatio-Angular Resolution Light Fields

Project Members

Changil Kim (Disney Research Zurich)

Henning Zimmer (Disney Research Zurich)

Yael Pritch (Disney Research Zurich)

Alexander Sorkine-Hornung (Disney Research Zurich)

Markus Gross (Disney Research Zurich)

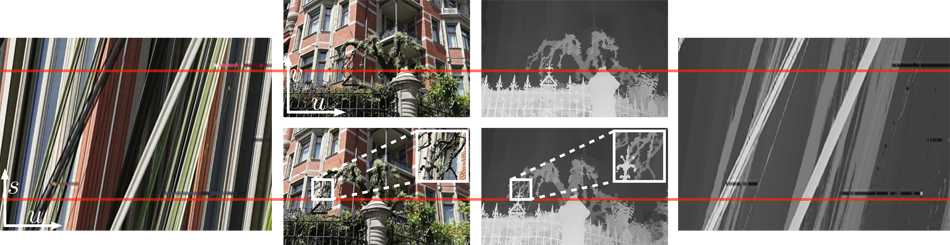

The images on the left show a 2D slice of a 3D input light field, a so called epipolar-plane image (EPI), and two out of one hundred 21 megapixel images that were used to construct the light field. Our method computes 3D depth information for all visible scene points, illustrated by the depth EPI on the right. From this representation, individual depth maps or segmentation masks for any of the input views can be extracted as well as other representations like 3D point clouds. The horizontal red lines connect corresponding scanlines in the images with their respective positions in the EPI.

Abstract

This paper describes a method for scene reconstruction of complex, detailed environments from 3D light fields. Densely sampled light fields in the order of 10^9 light rays allow us to capture the real world in unparalleled detail, but efficiently processing this amount of data to generate an equally detailed reconstruction represents a significant challenge to existing algorithms. We propose an algorithm that leverages coherence in massive light fields by breaking with a number of established practices in image-based reconstruction. Our algorithm first computes reliable depth estimates specifically around object boundaries instead of interior regions, by operating on individual light rays instead of image patches. More homogeneous interior regions are then processed in a fine-to-coarse procedure rather than the standard coarse-to-fine approaches. At no point in our method is any form of global optimization performed. This allows our algorithm to retain precise object contours while still ensuring smooth reconstructions in less detailed areas. While the core reconstruction method handles general unstructured input, we also introduce a sparse representation and a propagation scheme for reliable depth estimates which make our algorithm particularly effective for 3D input, enabling fast and memory efficient processing of “Gigaray light fields” on a standard GPU. We show dense 3D reconstructions of highly detailed scenes, enabling applications such as automatic segmentation and image-based rendering, and provide an extensive evaluation and comparison to existing image-based reconstruction techniques.

Datasets

This website provides the datasets including all the images and the results used in the paper. Please cite the above paper if you use any part of the images or results provided on the website or in the paper. For questions or feedback please contact Changil Kim at kimc at disneyresearch dot com.

We provide the following as part of the datasets:

- Camera calibration information

- Raw input images we have captured

- Radially undistorted, rectified, and cropped images used to produce the results in the paper

- Depth maps resulting from our reconstruction and propagation algorithm

- Depth maps computed at each available view by the reconstruction algorithm without the propagation applied

Mansion

Focal length f = 50mm = 7800 pixels, camera separation b = 10mm

Raw input images (ZIP, 1.06 GB) — 101 5616×3744 images

Pre-processed images (ZIP, 1.24 GB) — 101 5490×3450 images

Computed depth maps (ZIP, 352.2 MB) — 51 5490×3450 depth maps

Computed depth maps without propagation (ZIP, 331.4 MB) — 51 5490×3450 depth maps

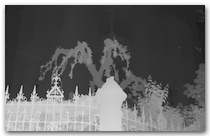

Church

Focal length f = 50mm = 5667 pixels, camera separation b = 10mm

Raw input images (ZIP, 411 MB) — 101 4080×2720 images

Pre-processed images including segmentation masks (ZIP, 503.7 MB) — 101 4007×2622 images

Computed depth maps (ZIP, 100.9 MB) — 51 4007×2622 depth maps

Computed depth maps without propagation (ZIP, 100.2 MB) — 51 4007×2622 depth maps

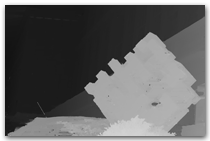

Couch

Focal length f = 50mm = 5667 pixels, camera separation b = 2mm

Raw input images (ZIP, 474.3 MB) — 101 4080×2720 images

Pre-processed images (ZIP, 580.1 MB) — 101 4020×2679 images

Computed depth maps (ZIP, 209 MB) — 51 4020×2679 depth maps

Computed depth maps without propagation (ZIP, 191.6 MB) — 51 4020×2679 depth maps

Bikes

Focal length f = 50mm = 7800 pixels, camera separation b = 5mm

Raw input images (ZIP, 403.4 MB) — 101 5616×3744 images

Pre-processed images (ZIP, 83.9 MB) — 101 2676×1752 images

Computed depth maps (ZIP, 111.5 MB) — 51 2676×1752 depth maps

Computed depth maps without propagation (ZIP, 107.4 MB) — 51 2676×1752 depth maps

Statue

Focal length f = 50mm = 7800 pixels, camera separation b = 5.33mm

Raw input images (ZIP, 1.12 GB) — 151 5616×3744 images

Pre-processed images (ZIP, 212.1 MB) — 151 2622×1718 images

Computed depth maps (ZIP, 62.9 MB) — 51 2622×1718 depth maps

Computed depth maps without propagation (ZIP, 71.4 MB) — 51 2622×1718 depth maps

Please click here (ZIP, 7.6 GB) to download all five datasets including the images and depth maps as a single zipped archive.

Acquisition

All images provided here have been captured using a Canon EOS 5D Mark II DSLR camera and a Canon EF 50mm f/1.4 USM lens, with an exception of the Couch dataset which was captured with a Canon EF 50mm f/1.2 L USM lens. A Zaber T-LST1500D motorized linear stage was used to drive the camera to shooting positions. The focus and aperture settings vary between datasets, but were kept identical within each dataset. The camera focal length is 50mm and the sensor size is 36x24mm for all datasets. PTLens was used to radially undistort the captured images, and Voodoo Camera Tracker was used to estimate the camera poses for rectification. See the paper for detail. Additionally, the exposure variation was compensated additively for the Bikes and Statue datasets.

File Formats

All images are provided in JPEG format. Depth maps are provided in two different formats. Those in .dmap files contain the dense disparity values d as defined in Equation 1 of the paper. The first and the second 32-bit unsigned integers respectively indicate the width and the height of the depth map. The rest of the file is an uncompressed sequence of width x height 32-bit floats in row-major order. Little-endian is assumed for all 4-byte words. The depth values z can be obtained by applying Equation 1 given the camera focal length f in pixels and the camera separation b for each dataset. Additionally, depth maps are stored as grayscale images in PNG format for easier visual inspection.