Abstract

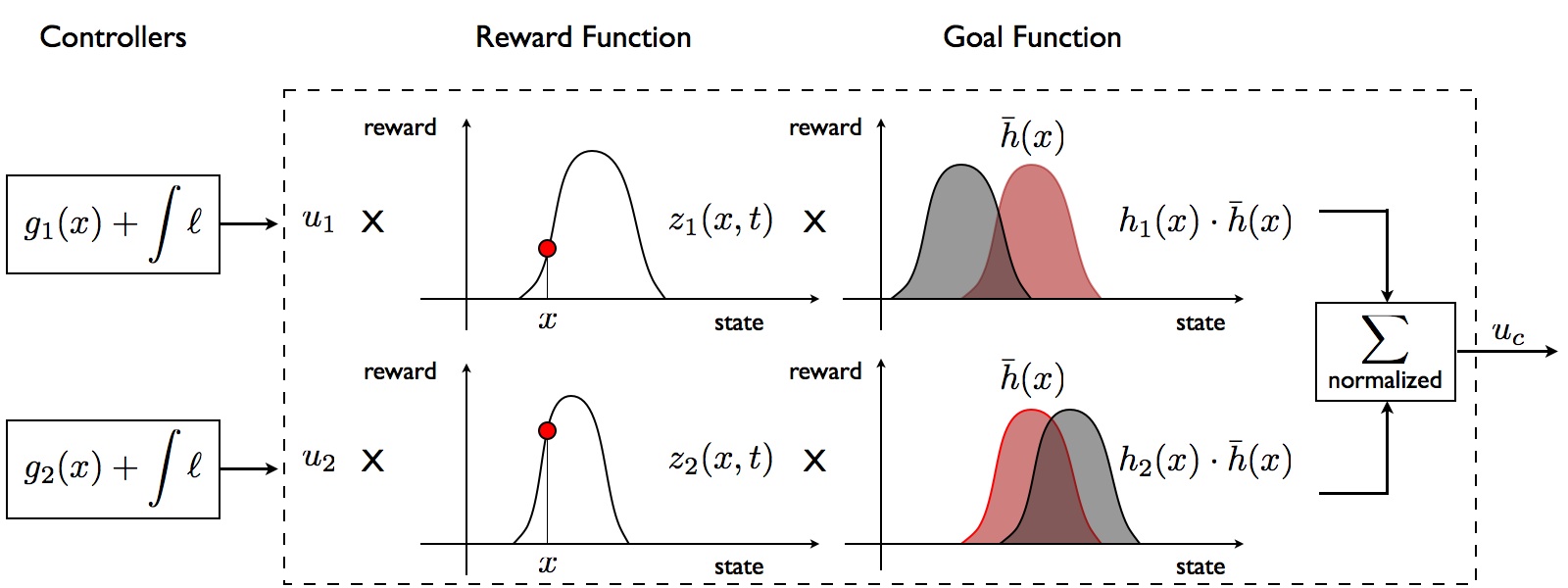

Controllers are necessary for physically-based synthesis of character animation. However, creating controllers requires either manual tuning or expensive computer optimization. We introduce linear Bellman combination as a method for reusing existing controllers. Given a set of controllers for related tasks, this combination creates a controller that performs a new task. It naturally weights the contribution of each component controller by its relevance to the current state and goal of the system. We demonstrate that linear Bellman combination outperforms naive combination often succeeding where naive combination fails. Furthermore, this combination is provably optimal for a new task if the component controllers are also optimal for related tasks. We demonstrate the applicability of linear Bellman combination to interactive character control of stepping motions and acrobatic maneuvers.

Acknowledgments

This project was inspired by Emo Todorov's work on linearly solvable Markov decision processes. Russ Tedrake provided some early feedback on how combination might be used in practice. Emo provided a MATLAB implementation of his z-iteration algorithm which allowed us to test combination on simple examples. Michiel van de Panne provided a detailed and helpful early review of our paper. This work was supported by grants from the Singapore-MIT Gambit Game Lab, the National Science Foundation (2007043041, CCF-0810888), Adobe Systems, Pixar Animation Studios, and software donations from Autodesk and Adobe systems.

BibTex

@article{dasilva:2009:lbc,

author = {Marco da Silva and

Fr{\'e}do Durand and

Jovan Popovi{\'c}},

title = {Linear Bellman Combination for Control of Character Animation},

journal = {ACM Trans. Graph.},

volume = {26},

number = {3},

year = {2009},

pages = {1--1},

}

Marco da Silva Last modified: Tue Aug 11 15:44:52 EDT 2009