Group Norm for Learning Latent Structural SVMs

NIPS Workshop on Optimization for Machine Learning, 2011

Abstract

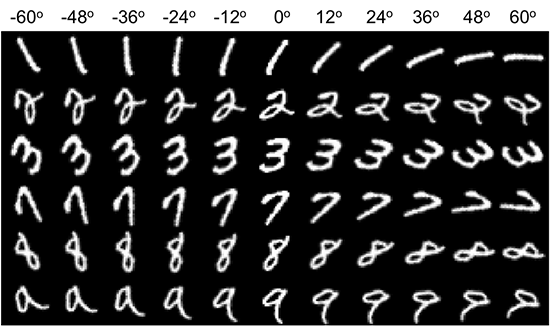

Latent variables models have been widely applied in many problems in machine learning and related fields such as computer vision and information retrieval. However, the complexity of the latent space in such models is typically left as a free design choice. A larger latent space results in a more expressive model, but such models are prone to overfitting and are slower to perform inference with. The goal of this paper is to regularize the complexity of the latent space and learn which hidden states are really relevant for the prediction problem. To this end, we propose regularization with a group norm such as l1-l2 to estimate parame- ters of a Latent Structural SVM. Our experiments on digit recognition show that our approach is indeed able to control the complexity of latent space, resulting in significantly faster inference at test-time without any loss in accuracy of the learnt model.

Download

Cite

BibtexThis work was supported by funds from Google Grants and from Shell Research.