Abstract:

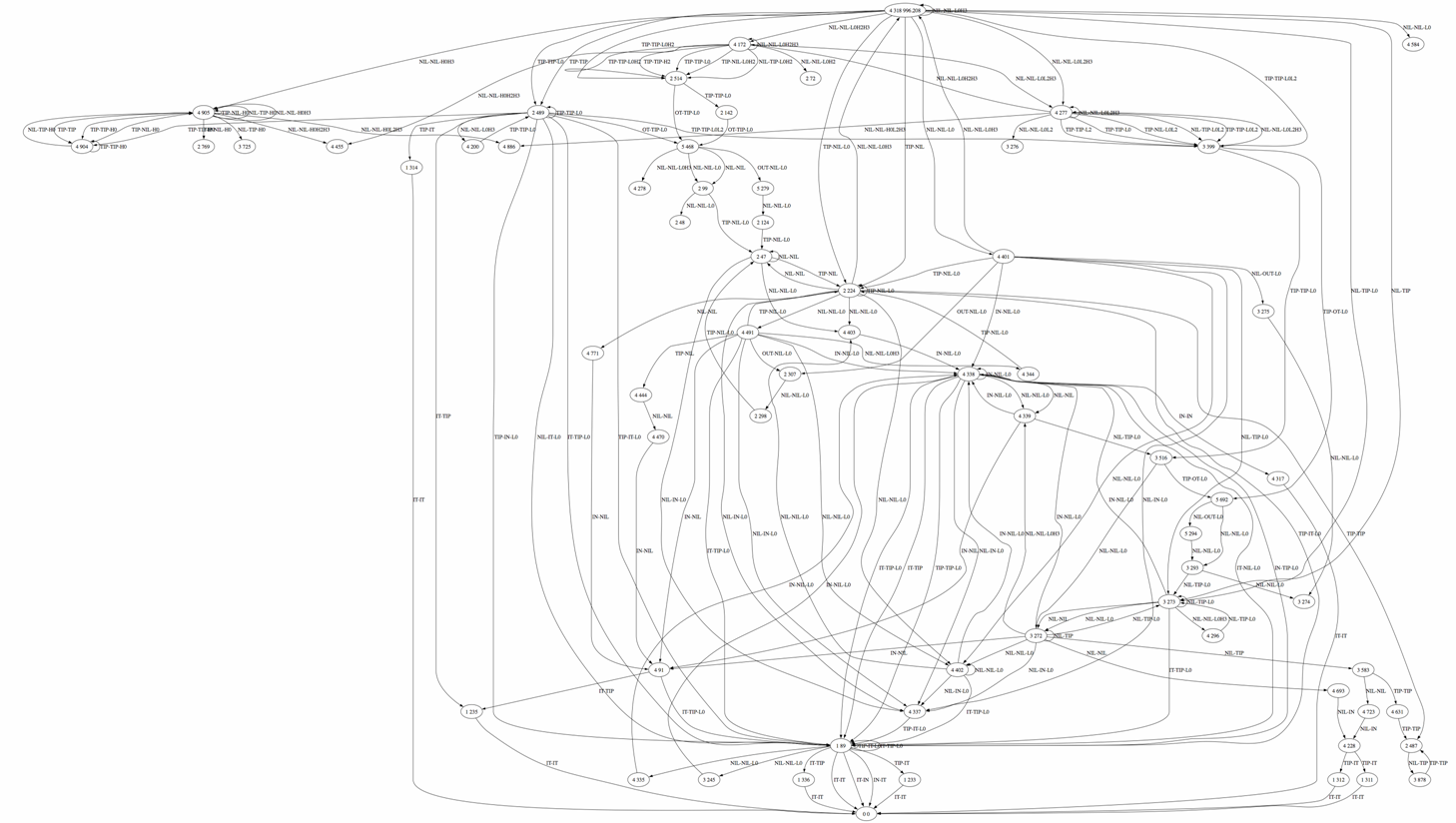

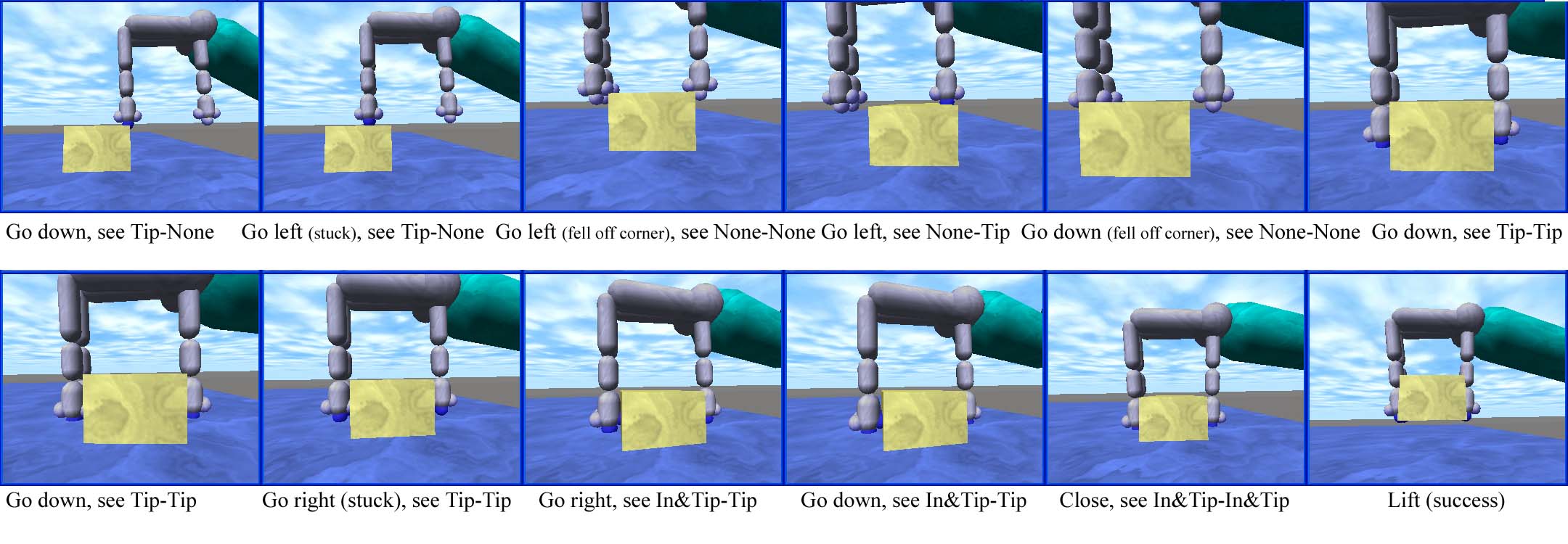

We provide a method for planning under uncertainty

for robotic manipulation by partitioning the configuration

space into a set of regions that are closed under

compliant motions. These regions can be treated as abstract states

in a partially observable Markov decision process (POMDP),

which can be solved to yield optimal control policies under

uncertainty. We demonstrate the approach on simple grasping

problems both in simulation and on an actual Barrett Arm, showing

that it can construct highly robust, efficiently

executable solutions.

Papers:

"Grasping POMDPs: Theory and Experiments," Ross Glashan and Kaijen Hsiao and Leslie Pack Kaelbling and Tomas Lozano-Perez, RSS Manipulation Workshop: Sensing and Adapting to the Real World, 2007.

"Grasping POMDPs," Kaijen Hsiao and Leslie Pack Kaelbling and Tomas Lozano-Perez. ICRA, 2007.

More details are provided in Chapter 2 of my thesis.

Videos (simulated robot):

Two fingered grasping of boxes

Two-fingered grasping of stepped blocks

Videos (real Barrett Arm):

Placing one finger relative to a stepped block

Two-fingered grasping of a box