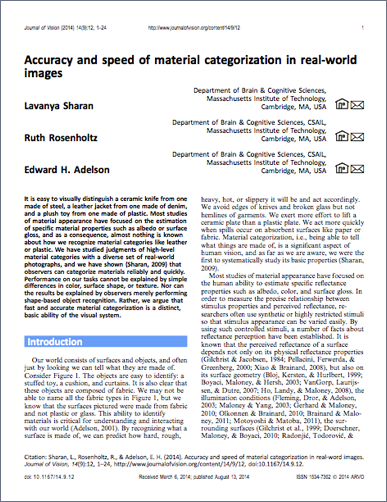

Accuracy and speed of material categorization in real-world images

|

|

|

| [Flickr Material Database] | [JoV paper] | [PhD thesis] |

|

Figure 1: Ordinary objects that are made of fabric [image credit] |

Our world consists of surfaces and objects, and often just by looking we can tell what they are made of. Consider Figure 1. The objects are easy to identify: a stuffed toy, a couch cushion, and curtains. It is also clear that these objects are made of fabric. This ability to identify materials, known as material categorization, is a significant aspect of human vision, and as far as we are aware, we were the first to systematically study its basic properties.

We collected a diverse set of real-world photographs, and we presented these photographs to human observers in a variety of presentation conditions to establish the accuracy and speed of material categorization. We found that observers could categorize high-level material categories (e.g., leather, plastic) reliably and quickly. In addition, we examined the role of surface properties like color and texture, and of object properties like surface shape and object identity. Simple strategies based on color, texture, or surface shape could not account for our results. Nor could our results be explained by observers merely performing shape-based object recognition. Rather, we argue that fast and accurate material categorization is a distinct, basic ability of the visual system.

This webpage summarizes our main findings. Please refer to the paper or thesis for details. This work was also presented at VSS'08 (oral), OPAM'08 (poster), VSS'09 (oral), and ICCNS'09 (poster).

It is well known that observers can quickly extract a lot of information about objects and scenes from photographs that they have never seen before (Biederman et al. 1974; Potter 1975, 1976; Thorpe et al. 1996). We wanted to understand if this was true for materials as well. We presented photographs from our dataset, one at a time, and asked observers to report if surfaces belonging to a target material category (e.g., metal) were present. These demos illustrate our task:

Demo 1: Plastic or non-plastic? [answer] |

Demo 2: Metal or non-metal? [answer] |

We found that observers could easily categorize materials in these challenging conditions, achieving 80.2% accuracy (chance = 50%) for exposures as brief as 40 ms. This performance was similar to that reported for object (Bacon-Mace et al., 2005) and scene categorization tasks (Greene & Oliva, 2009), which suggests that the time course of material category judgments is comparable to those for objects and scenes.

As reported in the paper, we also measured the reaction time (RT) required to make a categorization response. We found that median RTs for material categorization tasks (e.g., plastic vs. non-plastic) were approximately 100 ms slower than for baseline tasks of color (red vs. blue) and orientation discrimination (45° vs. -45°), suggesting that 100 ms extra processing is needed for material recognition to occur.

Put together, these findings establish that material categories can be identified quickly and accurately.

|

|

|

|

Original |

Grayscale |

Grayscale Blurred |

Grayscale Negative |

|

|

|

|

Shape I |

Shape II |

Texture I |

Texture II |

|

|

||

Color I |

Color II |

||

Figure 2: We manipulated each photograph in our database to either (1st row) degrade much of the information from a particular surface property while preserving the information from other properties or (2nd & 3rd rows) vice-versa. The Grayscale manipulation tests the necessity of color information. Grayscale Blurred degrades texture information, and Grayscale Negative makes it hard to see reflectance cues like specular highlights and shadows. The Shape I and II manipulations preserve either the global silhouette of the object or a line drawing-like sketch of the surface shape. The Texture I and II manipulations preserve local spatial-frequency content, including color variations, but lose overall surface shape. The Color I and II manipulations preserve aspects of the distribution of colors within the material, but lose all shape information, and all or much of the texture. [image credit] | |||

In deciding whether an image contained a target material category, observers may have employed heuristics based on color, reflectance, or shape. For example, wooden surfaces tend to be brown, metal surfaces tend to be shiny, and plastic surfaces tend to be smooth. Numerous studies have shown that human observers can reliably estimate surface properties such as color, albedo, gloss, and 3-D shape from images (Todd, 2004; Gilchrist, 2006; Adelson, 2008; Brainard, 2009; Shevell & Kingdom, 2010; Anderson, 2011; Maloney, Gerhard, Boyaci, & Doerschner, 2011; Fleming, 2012). Is it possible, therefore, that judgments of material categories are merely judgments of surface properties such as reflectance and shape?

We addressed this question by measuring the contributions of four surface properties—color, gloss, texture, and shape—to material category judgments. We modified the photographs in our dataset to either emphasize or deemphasize these surface properties. We then presented the modified images to observers and compared the material categorization performance on the modified images to that on the original photographs. The differences in performance reveal the role of each surface property in high-level material categorization.

We found that observers had no trouble judging the material category when one or more surface properties were deemphasized (Figure 2, 1st row); however, they struggled when only one surface property was emphasized (2nd & 3rd rows). These results tell us that the knowledge of color, gloss, texture, or shape, in itself, is not sufficient for material categorization. This is not to suggest that these properties are not important for the task, but that they are not useful individually.

The material(s) that an object is made of are usually not arbitrary. Keys are made of metallic alloys, candles of wax, and tires of rubber. It is reasonable to believe that we form associations between objects and the materials they are made of. Given the object identity (e.g., book), we can easily infer the material identity (e.g., paper) on the basis of such learned associations. Is it possible then that material category judgments are simply derived from object knowledge? Perhaps, the speeds we have measured for material categorization are merely a consequence of fast object recognition (Biederman et al., 1974; Potter, 1975, 1976; Intraub, 1981; Thorpe et al., 1996) followed by inferences based on object-material relationships?

|

Figure 3: Examples from our dataset of real and fake objects: (1st row) a knit wool cupcake, flowers made of fabric, plastic fruits; (2nd row) genuine cupcake, flower, and fruits. [image credit] |

To understand the role of object knowledge in material categorization, we used a new set of images designed to dissociate object and material identities. We gathered 300 photographs of real and fake objects such as desserts, fruits, and flowers. These fake items and their genuine versions are useful for our purposes as they differ mainly in their material composition, but not their shape-based object category. Consider Figure 3. Recognizing the objects in these images as fruits or flowers is not sufficient; one has to assess whether the material appearance of these objects is consistent with standard expectations for that object category. The real vs. fake discrimination can be viewed as a material category judgment, even if it is a subtler judgment than, say, plastic vs. non-plastic.

We presented these photographs of real and fake objects to observers and asked them to identify the object category (dessert, fruit, or flower?) and the real vs. fake category. By comparing observers’ performance on these two tasks, we could determine whether material categorization is simply derived from shape-based object recognition. We found that object judgments were significantly faster and more accurate than real vs. fake judgments, which suggests that object categorization engages different mechanisms from material categorization.

To cite our work or our dataset, please use:

L. Sharan, R. Rosenholtz, and E. H. Adelson, "Accuracy and speed of material categorization in real-world images", Journal of Vision, vol. 14, no. 9, article 12, 2014.

@article{Sharan-JoV-14,

author = {Lavanya Sharan and Ruth Rosenholtz and Edward H. Adelson},

title = {Accuracy and speed of material categorization in real-world images},

journal = {Journal of Vision},

volume = {14},

number = {10},

year = {2014}

}

This work was supported by NIH grants R01-EY019262 and R21-EY019741 and a grant from NTT Basic Research Laboratories. We thank Aseema Mohanty for help with database creation, Alvin Raj for help with Texture manipulations, Aude Oliva for use of eye-tracking equipment, and for discussions: Aude Oliva, Michelle R. Greene, Barbara Hidalgo-Sotelo, Molly Potter, Nancy Kanwisher, Jeremy Wolfe, Roland W. Fleming, Shin’ya Nishida, Isamu Motoyoshi, Micah K. Johnson, Alvin Raj, Ce Liu, James Hays, and Bei Xiao.