About

I am a PhD candidate at MIT CSAIL. I work with Prof. Julie Shah in the Interactive Robotics Group. My research interests include reinforcement learning, interactive machine learning, and human-robot interaction. My aim is to develop intelligent robots that can adapt and generalize through interaction with people. Towards this goal, we are developing interpretable and interactive learning algorithms that can incorporate human feedback to improve transparency and learning in new environments.

I received my M.S. at MIT in 2015. My work involved developing algorithms to enable effective human-robot team training. Prior to MIT, I received my B.S. in Computer Science from Georgia Tech.

Research

Human-in-the-loop Simulation to Real World Transfer

Deploying AI systems safely in the real world is challenging. The complex nature of the open world makes it difficult for machines trained on limited data to adapt and generalize well. The errors that can result from an imperfect model can be extremely costly (e.g., car accidents, robot collisions). We identify error regions, or blind spots, that occur due to mismatches between simulator and real-world environments through the help of a human. Detecting these errors can lead to safer deployment of these systems.

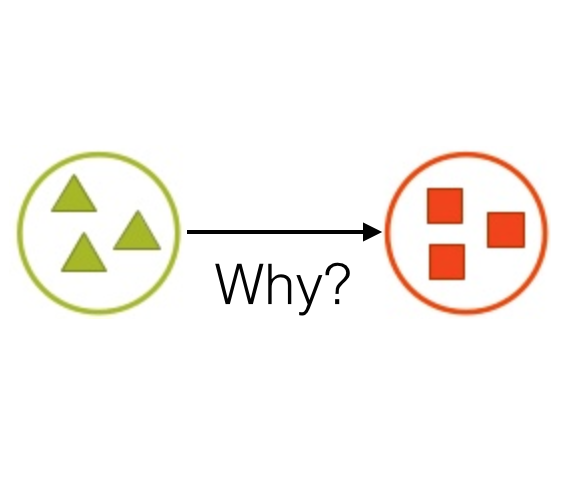

Interpretable Transfer Learning

Developing intelligent agents that can interact in the real world requires agents to be adaptable to both situations and people. Generalizing to new situations requires successful transfer learning, or the adaptation of prior learned knowledge to new tasks. Adapting to people requires an interpretable medium for human-machine interaction. We are working on developing more interpretable transfer learning algorithms that can be incorporated more easily into interactive systems.

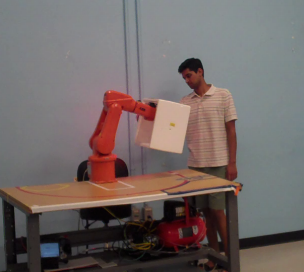

Learning Models for Human Preferences

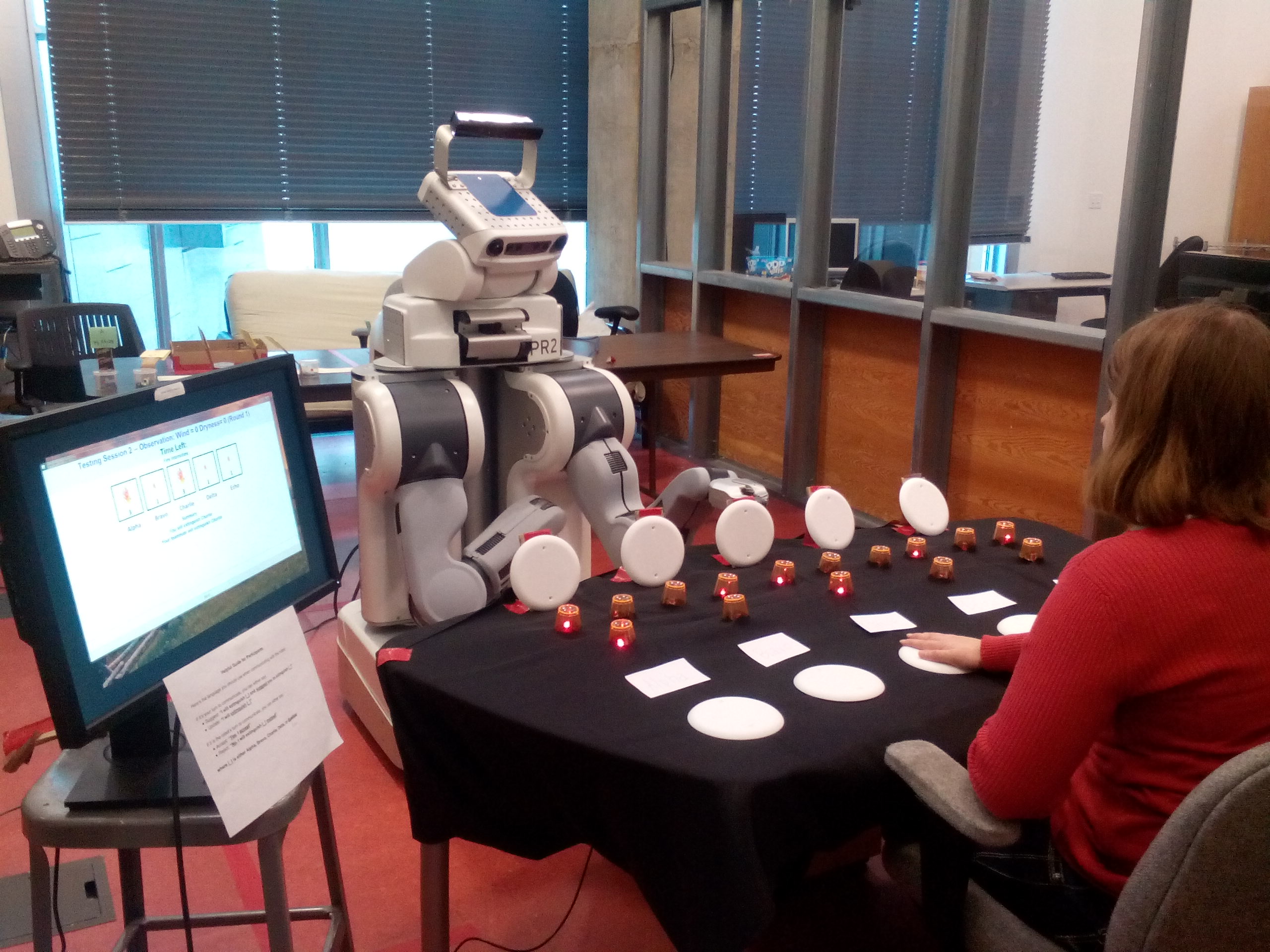

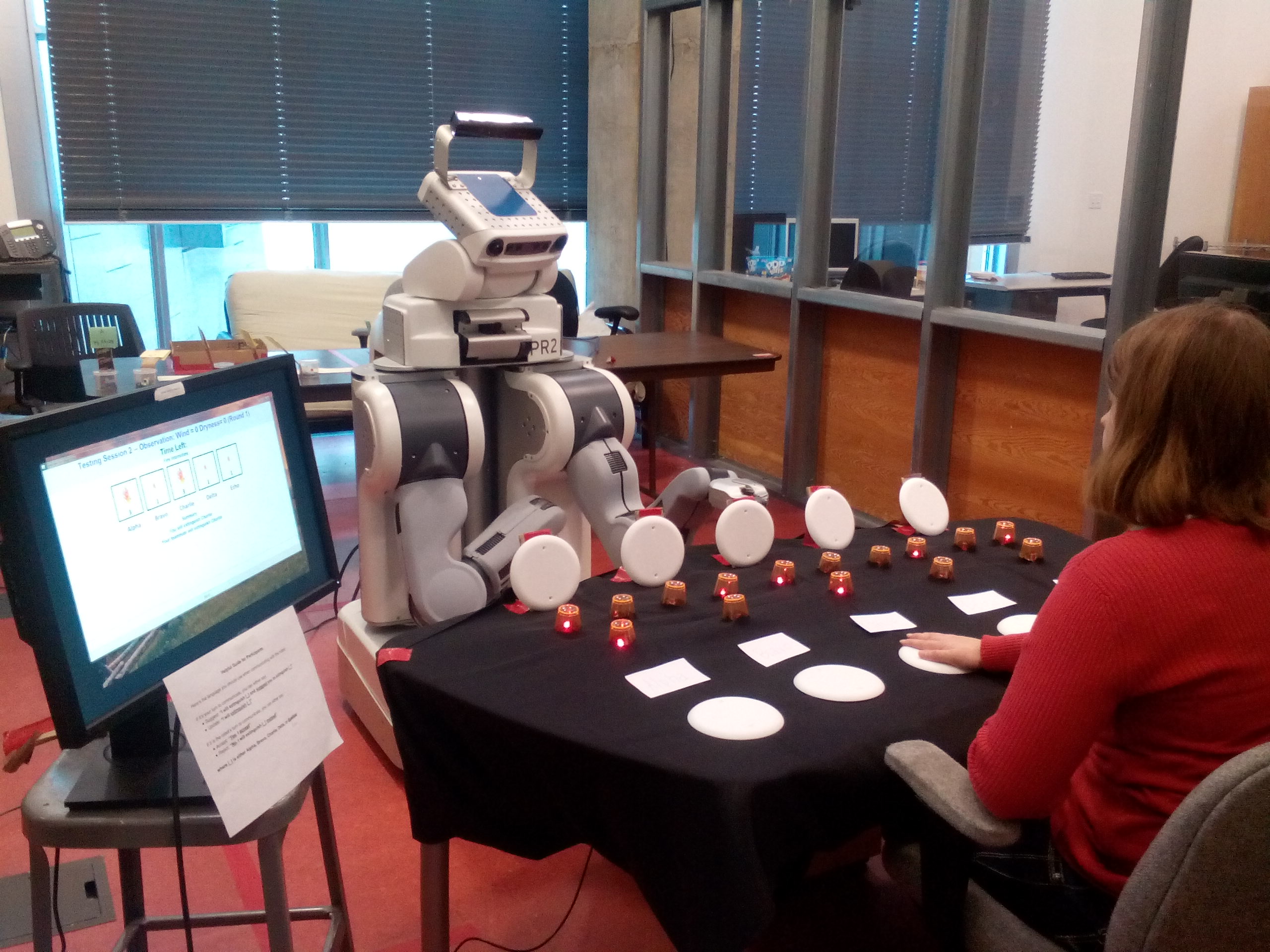

As robots are integrated more into environments with people, it is essential for robots to adapt to people's preferences. In this work, we automatically learn user models from joint-action demostrations. We first learn human preferences using an unsupervised clustering algorithm and then use inverse reinforcement learning to learn a reward function for each preference. When working with a new user, the hidden preference is inferred online. We demonstrate through human subject experiments on a collaborative refinishing task that the framework supports effective human-robot teaming.

Human-Robot Team Training

With a rise in joint human-robot teams, an important concern is how we can effectively train these teams. "Perturbation training" is a training approach used in human teams in which team members practice variations of a task to generalize to new situations. In this work, we develop the first end-to-end framework for human-robot perturbation training, which includes a multi-agent transfer learning algorithm, a human-robot co-learning framework, and a communication protocol. We perform computational and human subject experiments to validate the benefits of our framework.

Journal/Conference Publications

Blind Spot Detection for Safe Sim-to-Real Transfer

Ramya Ramakrishnan, Ece Kamar, Debadeepta Dey, Eric Horvitz, Julie Shah

Accepted to Journal of Artificial Intelligence Research (JAIR) 2019

Ramya Ramakrishnan, Ece Kamar, Debadeepta Dey, Eric Horvitz, Julie Shah

Accepted to Journal of Artificial Intelligence Research (JAIR) 2019

Overcoming Blind Spots in the Real World: Leveraging Complementary Abilities for Joint Execution

Ramya Ramakrishnan, Ece Kamar, Besmira Nushi, Debadeepta Dey, Julie Shah, Eric Horvitz

Association for Advancement of Artificial Intelligence (AAAI) 2019

Ramya Ramakrishnan, Ece Kamar, Besmira Nushi, Debadeepta Dey, Julie Shah, Eric Horvitz

Association for Advancement of Artificial Intelligence (AAAI) 2019

Discovering Blind Spots in Reinforcement Learning

Ramya Ramakrishnan, Ece Kamar, Debadeepta Dey, Julie Shah, Eric Horvitz

Autonomous Agents and Multiagent Systems (AAMAS) 2018

Ramya Ramakrishnan, Ece Kamar, Debadeepta Dey, Julie Shah, Eric Horvitz

Autonomous Agents and Multiagent Systems (AAMAS) 2018

Perturbation Training for Human-Robot Teams

Ramya Ramakrishnan, Chongjie Zhang, Julie Shah

Journal of Artificial Intelligence Research (JAIR) 2017

Ramya Ramakrishnan, Chongjie Zhang, Julie Shah

Journal of Artificial Intelligence Research (JAIR) 2017

Efficient Model Learning from Joint-Action Demonstrations for Human-Robot Collaborative Tasks

Stefanos Nikolaidis, Ramya Ramakrishnan, Keren Gu, Julie Shah

Human-Robot Interaction (HRI) 2015

[Best Paper Award - Enabling Technologies]

Stefanos Nikolaidis, Ramya Ramakrishnan, Keren Gu, Julie Shah

Human-Robot Interaction (HRI) 2015

[Best Paper Award - Enabling Technologies]

Improved Human-Robot Team Performance through Cross-Training, A Human Team Training Approach

Stefanos Nikolaidis, Pem Lasota, Ramya Ramakrishnan, Julie Shah

International Journal of Robotics Research (IJRR) 2015

Stefanos Nikolaidis, Pem Lasota, Ramya Ramakrishnan, Julie Shah

International Journal of Robotics Research (IJRR) 2015

Workshop/Symposium Publications

Bayesian Inference to Identify the Cause of Human Errors

Ramya Ramakrishnan*, Vaibhav Unhelkar*, Ece Kamar, Julie Shah

ICML: Generative Modeling and Model-Based Reasoning for Robotics and AI Workshop 2019

Ramya Ramakrishnan*, Vaibhav Unhelkar*, Ece Kamar, Julie Shah

ICML: Generative Modeling and Model-Based Reasoning for Robotics and AI Workshop 2019

Knowledge Transfer from a Human Perspective

Ramya Ramakrishnan, Julie Shah

AAMAS: Transfer in Reinforcement Learning Workshop 2017

Ramya Ramakrishnan, Julie Shah

AAMAS: Transfer in Reinforcement Learning Workshop 2017

Interpretable Transfer for Reinforcement Learning based on Object Similarities

Ramya Ramakrishnan, Karthik Narasimhan, Julie Shah

IJCAI: Interactive Machine Learning Workshop 2016

Ramya Ramakrishnan, Karthik Narasimhan, Julie Shah

IJCAI: Interactive Machine Learning Workshop 2016

Towards Interpretable Explanations for Transfer Learning in Sequential Tasks

Ramya Ramakrishnan, Julie Shah

AAAI Symposium: Intelligent Systems for Supporting Distributed Human Teamwork 2016

Ramya Ramakrishnan, Julie Shah

AAAI Symposium: Intelligent Systems for Supporting Distributed Human Teamwork 2016

Learning Human Types from Demonstration

Stefanos Nikolaidis, Keren Gu, Ramya Ramakrishnan, Julie Shah

AAAI Fall Symposium Series 2014

Stefanos Nikolaidis, Keren Gu, Ramya Ramakrishnan, Julie Shah

AAAI Fall Symposium Series 2014

From virtual to actual mobility: Assessing the benefits of active locomotion through an immersive virtual environment using a motorized wheelchair

Amelia Nybakke*, Ramya Ramakrishnan*, Victoria Interrante

IEEE Symposium on 3D User Interfaces (3DUI) 2012

Amelia Nybakke*, Ramya Ramakrishnan*, Victoria Interrante

IEEE Symposium on 3D User Interfaces (3DUI) 2012