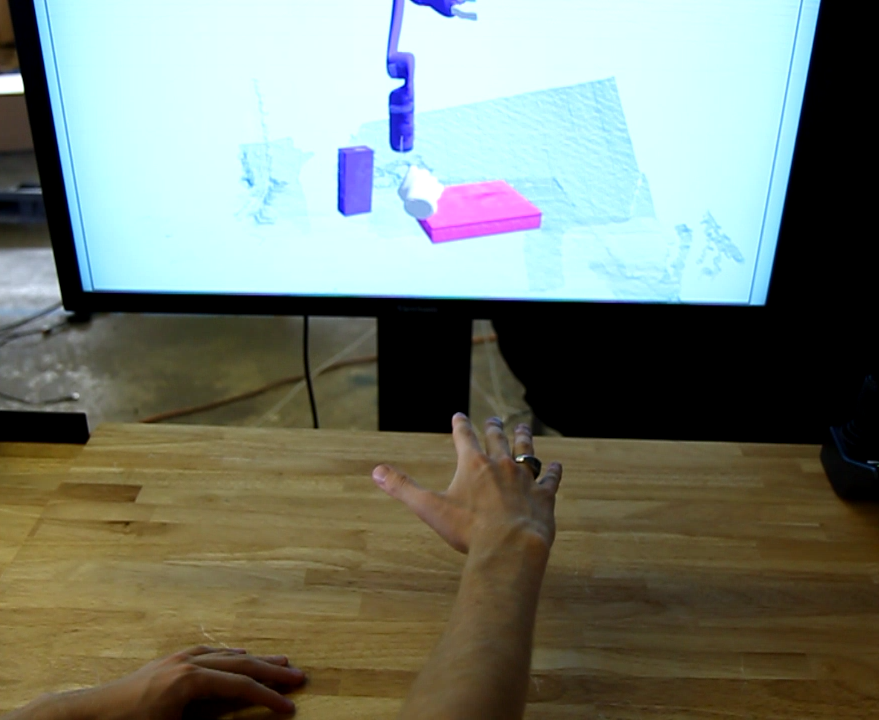

Virtual reality interface for robot task assignment

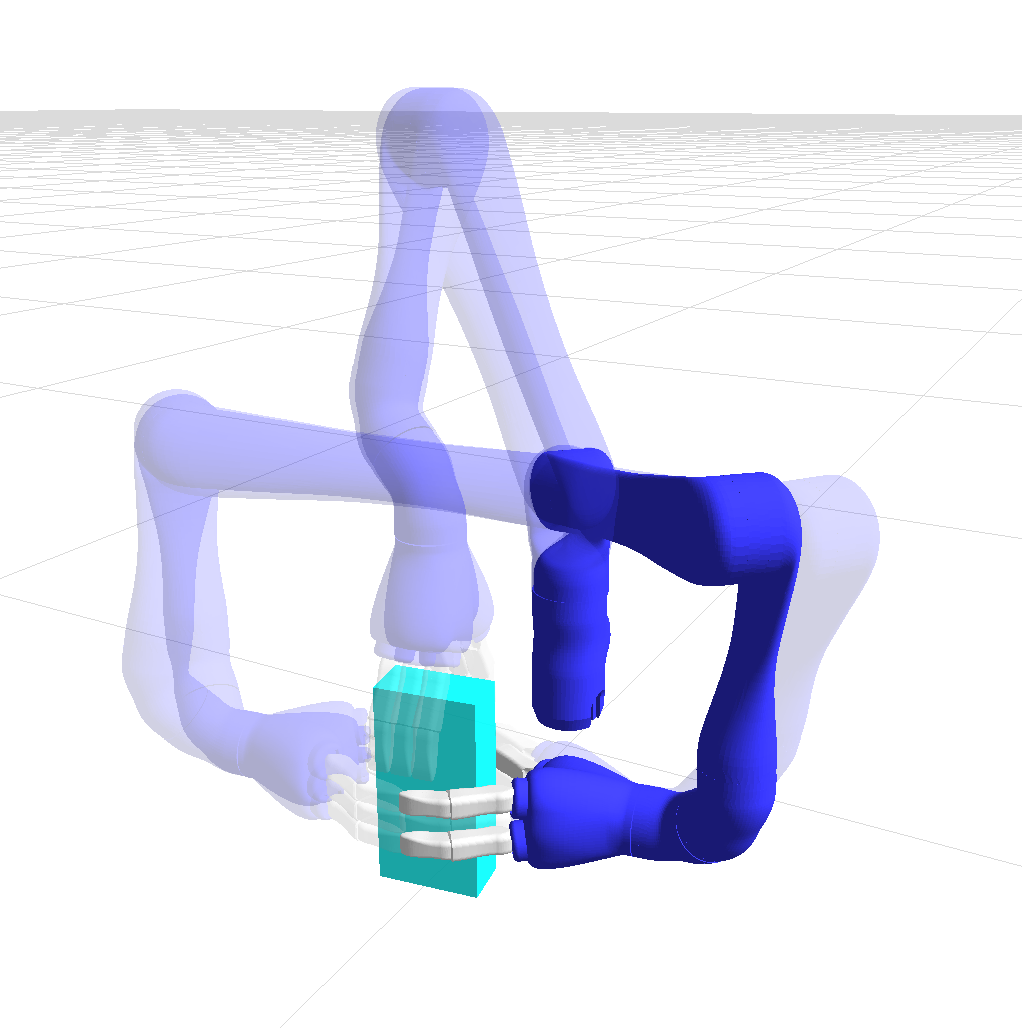

We present a user interface that utilizes hand- tracking and gesture detection to provide a natural and intuitive interface to a semi-autonomous robotic manipulation system. The developed interface leverages humans and robots for what they each do best. The robotic system performs object detection, and grasp and motion planning. The human operator oversees high-level task assignment and manages unexpected failures. We discuss how this low-cost, task-level interface can reduce operator training time, decrease an operator’s cognitive load, and increase their situational awareness. There are many potential applications for this system such as explosive ordnance disposal, logistics package handling, and assembly tasks.

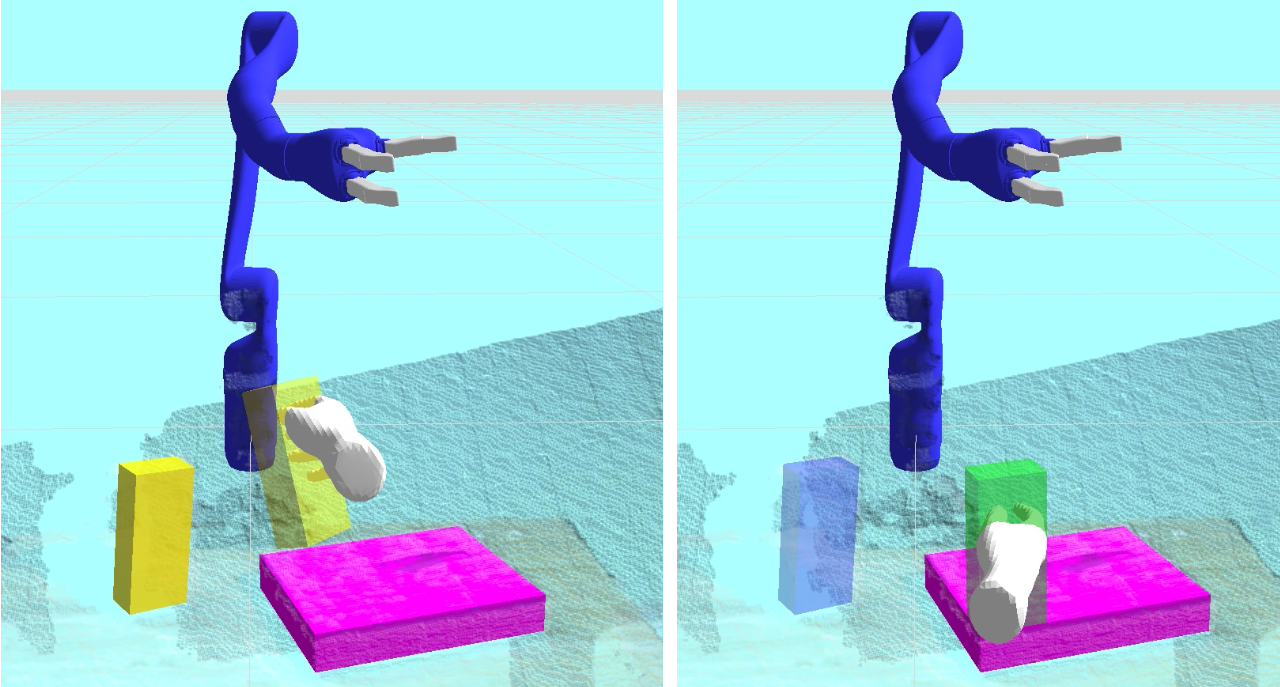

The interface consists of a 3D camera that tracks a users hand in 3D. The user can then manipulate virtual 3D objects that correspond to real-world objects, to specify goals. For example, stacking a virtual block on another in the virtual reality interface will cause the robot to autonomously pick and stack the corresponding real object.

This work was completed while I was an intern at Vecna Technologies in Cambridge, MA, and is joint work with others including Shawn Schaffert and Neal Checka.

Video

Publication

Please see the following publication, at the Workshop on Human-Robot Collaboration for Industrial Manufacturing at Robotics Science and Systems (RSS) 2014 in Berkeley, CA.

Natural User Interface for Robot Task Assignment. Steven J. Levine, Shawn Schaffert, Neal Checka. Workshop on Human-Robot Collaboration for Industrial Manufacturing at Robotics Science and Systems (RSS) 2014 in Berkeley, CA