Please support our work with a donation!

- Go to MIT's Donation Form for the project.

- Enter a donation amount, then press CONTINUE to finalize.

We are grateful to the Andrea Bocelli Foundation and to the MIT EECS Super-UROP program for their support of our research.

We are focusing first on three core capabilities:

Safe mobility and navigation:

Current focus:Where is the safe walking surface? Where are the trip and collision

hazards?

Current focus:Where is the safe walking surface? Where are the trip and collision

hazards?

In future: Where am I? Which way is it to my destination?

When is the next turn, landmark or other salient environmental aspect

coming up? What type of place am I in, or near? Do my surroundings

include text, and if so what is it? Where is the affordance (e.g. kiosk,

concierge desk, elevator lobby, water fountain) that I seek? What

transit options (bus, taxi, train etc.) are nearby or arriving?

Person detection & identification:

Current focus:Are there people nearby or approaching? If so, where is each person, and what is

his/her identity?

Current focus:Are there people nearby or approaching? If so, where is each person, and what is

his/her identity?

In future: What is the person's facial expression, body stance,

and body language? What kind of clothing is s/he wearing? What is s/he doing?

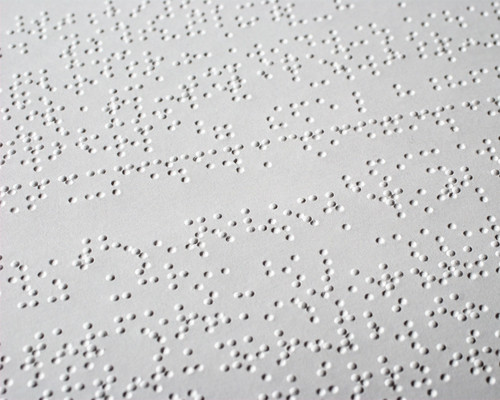

Tactile/aural interface:

Current focus:How can information be conveyed non-visually to

the user, for example through a MEMS tactile display?

Current focus:How can information be conveyed non-visually to

the user, for example through a MEMS tactile display?

In future:How can the system effectively engage in spoken dialogue

with the user, so that the user can specify his/her goals to the

system, with the system requesting clarification when needed? When

should the system deliver information to the user (e.g., during breaks

in conversation)?

Team members include:

- Prof. Seth Teller (MIT EECS/CSAIL) Assistive technology, machine perception, human-machine interaction.

- Dr. Jim Glass (MIT CSAIL) Spoken-language systems.

- Prof. Carol Livermore (NEU MechE) Microelectromechanical systems (MEMS).

- Prof. Rob Miller (MIT EECS/CSAIL) Human-computer interaction, user interfaces, crowdsourcing.

- Prof. Aude Oliva (MIT BCS/CSAIL) Human perception, scene understanding.

- Prof. Nick Roy (MIT Aero-Astro/CSAIL) Planning under uncertainty, machine learning.

- Prof. Antonio Torralba (MIT EECS/CSAIL) Machine vision, machine learning.