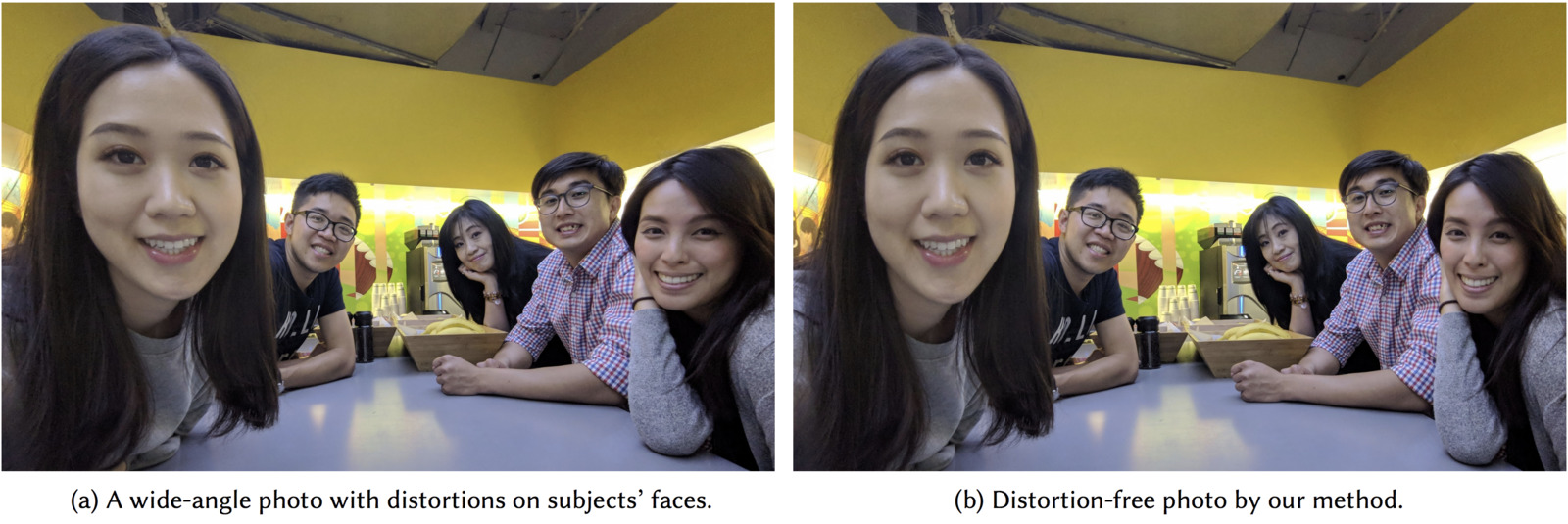

Distortion-Free Wide-Angle Portraits on Camera Phones

(a) A group selfie taken by a wide-angle 97°

field-of-view phone camera. The perspective projection

renders unnatural look to faces on the periphery: they are

stretched, twisted, and squished. (b) Our algorithm restores

all the distorted face shapes and keeps the background

unaffected.

(a) A group selfie taken by a wide-angle 97°

field-of-view phone camera. The perspective projection

renders unnatural look to faces on the periphery: they are

stretched, twisted, and squished. (b) Our algorithm restores

all the distorted face shapes and keeps the background

unaffected.

Publication

Abstract

Photographers take wide-angle shots to enjoy expanding views,

group portraits that never miss anyone, or composite subjects

with spectacular scenery

background. In spite of the rapid proliferation of wide-angle

cameras on mobile phones, a wider field-of-view (FOV)

introduces a stronger perspective distortion. Most notably,

faces are stretched, squished, and skewed, to

look vastly different from real-life. Correcting

such distortions requires professional editing skills,

as trivial manipulations can introduce other kinds

of distortions. This paper introduces a new algorithm to undistort faces

without affecting other parts of the photo. Given a portrait as an input, we

formulate an optimization problem to create a content-aware warping mesh

which locally adapts to the stereographic projection on facial regions, and

seamlessly evolves to the perspective projection over the background. Our

new energy function performs effectively and reliably for a large group

of subjects in the photo. The proposed algorithm is fully automatic and

operates at an interactive rate on the mobile platform. We demonstrate

promising results on a wide range of FOVs from 70° to 120°.

Video

Supplemental Materials

Press

Acknowledgements

We thank the reviewers for numerous suggestions on user study and

exposition. We also thank valuable inputs from Ming-Hsuan Yang,

Marc Levoy, Timothy Knight, Fuhao Shi, and Robert Carroll. We

thank Yael Pritch, David Jacobs, Neal Wadhwa, Juhyun Lee, and Alan

Yang for supports on subject segmenter and face detector integration,

Kevin Chen and Sung-fang Tsai for GPU acceleration. We thank

Sam Hasinoff, Eino-Ville Talvala, Gabriel Nava, Wei Hong, Lawrence

Huang, Chien-Yu Chen, Zhijun He, Paul Rohde, Ian Atkinson, and

Jimi Chen for supports on mobile platform implementations. We

thank Weber Tang, Jill Hsu, Bob Hung, Kevin Lien, Joy Hsu, Blade

Chiu, Charlie Wang, and Joy Tsai for image quality feedbacks, Karl

Rasche and Rahul Garg for proofreading. Finally, we give special

thanks to Denis Zorin and all the photography models in this work

for photo usage permissions and supports on data collection.