MultiMorph:

On-demand Atlas Construction

Bruce Fischl2 John Guttag1 Adrian Dalca1,2

Abstract

We present MultiMorph, a fast and efficient method for constructing anatomical atlases on the fly. Atlases capture the canonical structure of a collection of images and are essential for quantifying anatomical variability across populations. However, current atlas construction methods often require days to weeks of computation, thereby discouraging rapid experimentation. As a result, many scientific studies rely on suboptimal, precomputed atlases from mismatched populations, negatively impacting downstream analyses. MultiMorph addresses these challenges with a feedforward model that rapidly produces high-quality, population-specific atlases in a single forward pass for any 3D brain dataset, without any fine-tuning or optimization. MultiMorph is based on a linear group-interaction layer that aggregates and shares features within the group of input images. Further, by leveraging auxiliary synthetic data, MultiMorph generalizes to new imaging modalities and population groups at test-time. Experimentally, MultiMorph outperforms state-of-the-art optimization-based and learning-based atlas construction methods in both small and large population settings, with a 100-fold reduction in time. This makes MultiMorph an accessible framework for biomedical researchers without machine learning expertise, enabling rapid, high-quality atlas generation for diverse studies.

Method

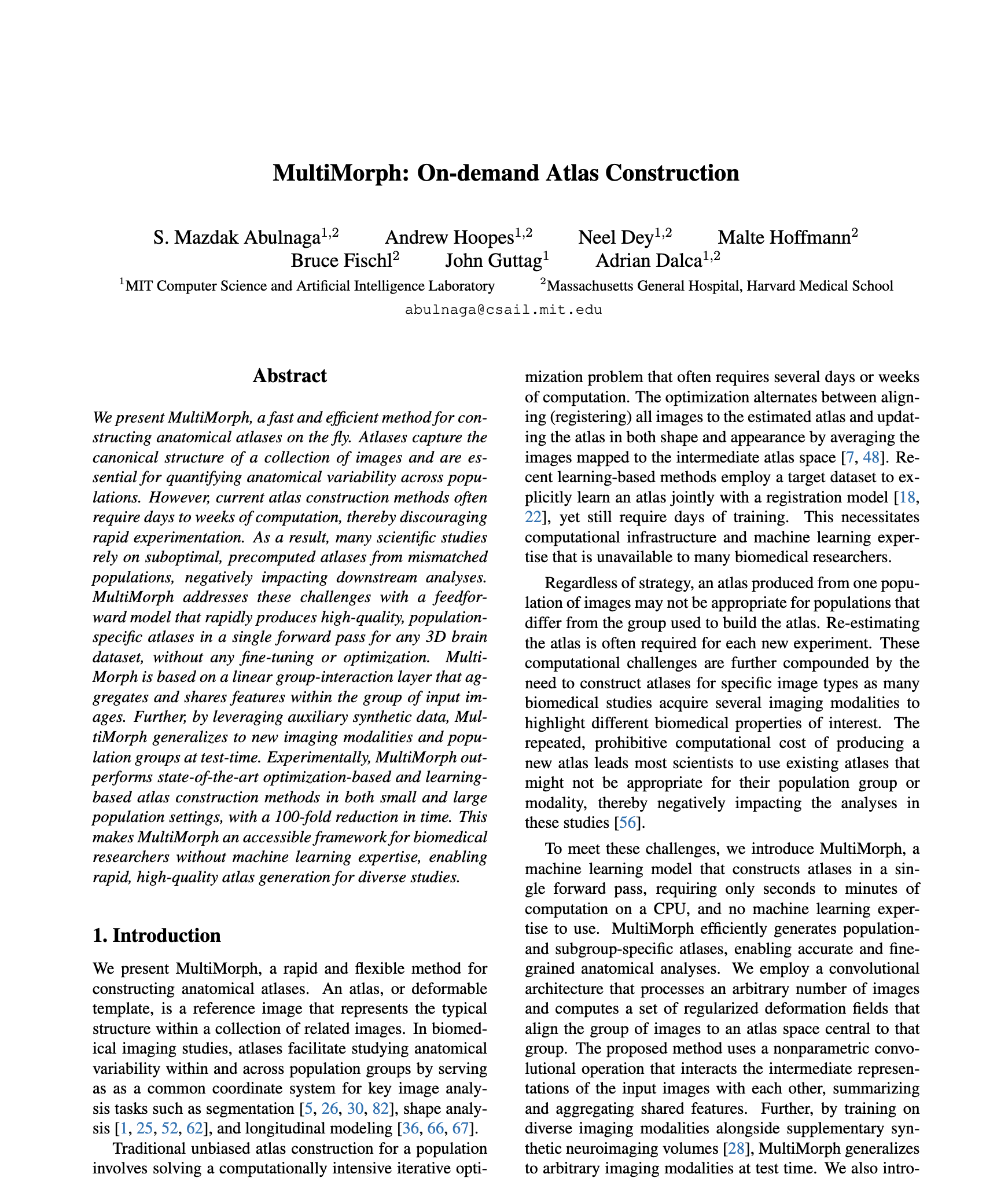

MultiMorph is a model for constructing 3D brain atlases for any population group on the fly. We train a model to learn a group-specific registration to a central atlas space. Key to our model is a group-interaction layer, named GroupBlock, to share features across a flexible number of input images. We train a single model with a mix of real and synthetic data to generalize to any imaging modality. Atlases are constructed in a single forward pass of the model at inference time.

We learn a set of diffeomorphic deformation fields φ to a group-specific, central atlas space. Our model takes as input a group of a flexible number of 3D brain scans and predicts velocity fields to the group-specific atlas space. The predicted velocity fields pass through a centrality layer that produces bias-free velocity fields by construction. Our model is trained using loss functions to minimize anatomical and structural variance in the atlas space, while encouraging regular deformation fields. We replace standard convolution layers with our GroupBlock layer. We train with a mix of synthetic and real data to produce one model that can generalize to any neuroimaging modality.

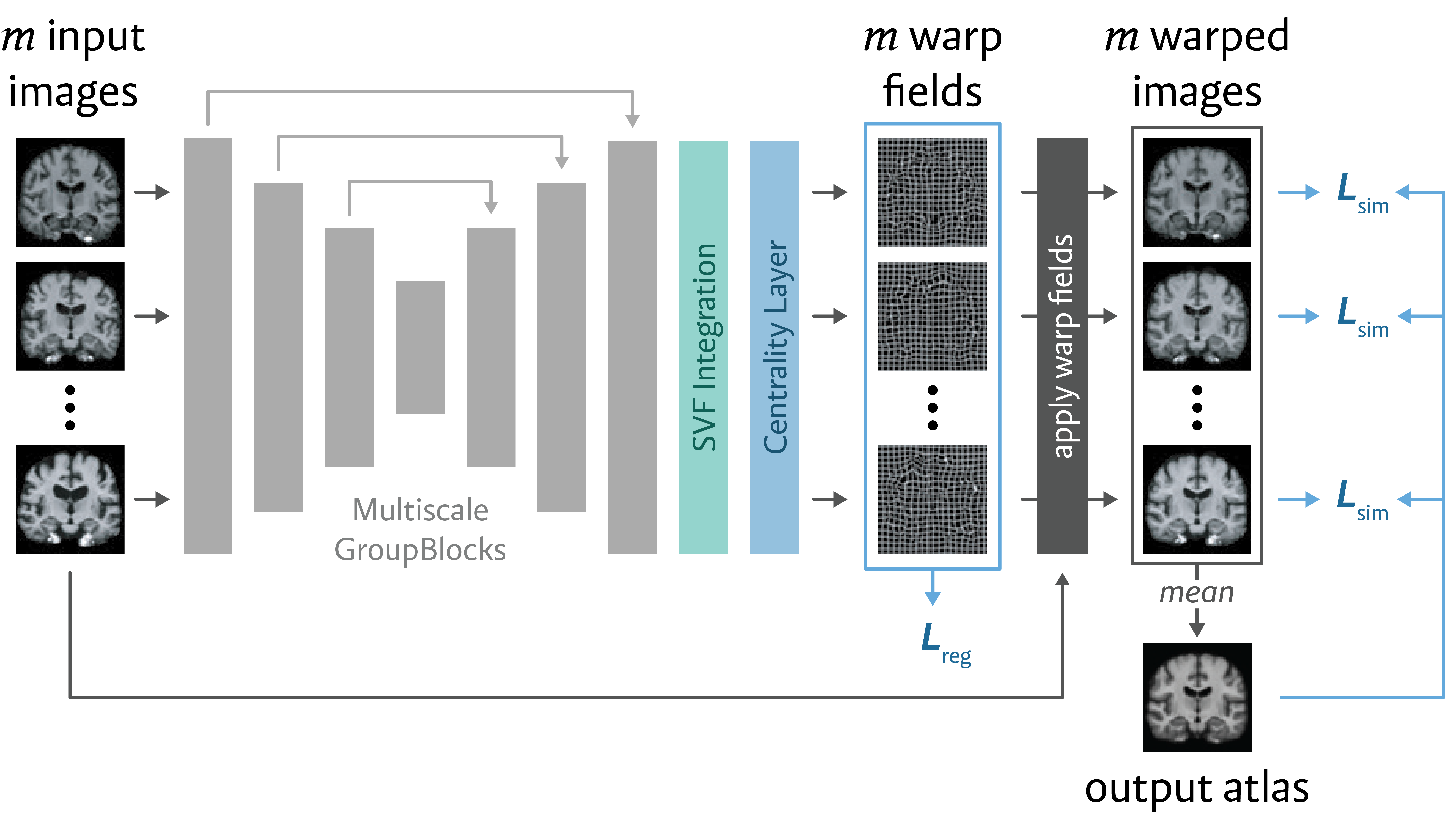

GroupBlock Layer

The GroupBlock layer is a linear feature aggregator that enables information sharing across a flexible number of input images. A summary statistic, such as the mean, is used to aggregate group features. These features are shared with each individual group element using the concatenation operation, followed by a standard convolution. Feature sharing improves subgroup alignment and group-specific registration.

Results

We train a single model using T1w and T2w scans from the OASIS and MBB datasets. We also train with synthetic data generated from these datasets. We assess our model's ability construct population and subpopulation atlases as well as its ability to generalize to unseen imaging modalities and population groups. We compare against the most widely-used classical method, ANTs, and two state-of-the-art deep learning methods: AtlasMorph and Aladdin. All baselines were trained on our held-out test set.

Our model produces all atlases in a single forward pass on the CPU without any retraining or fine-tuning.

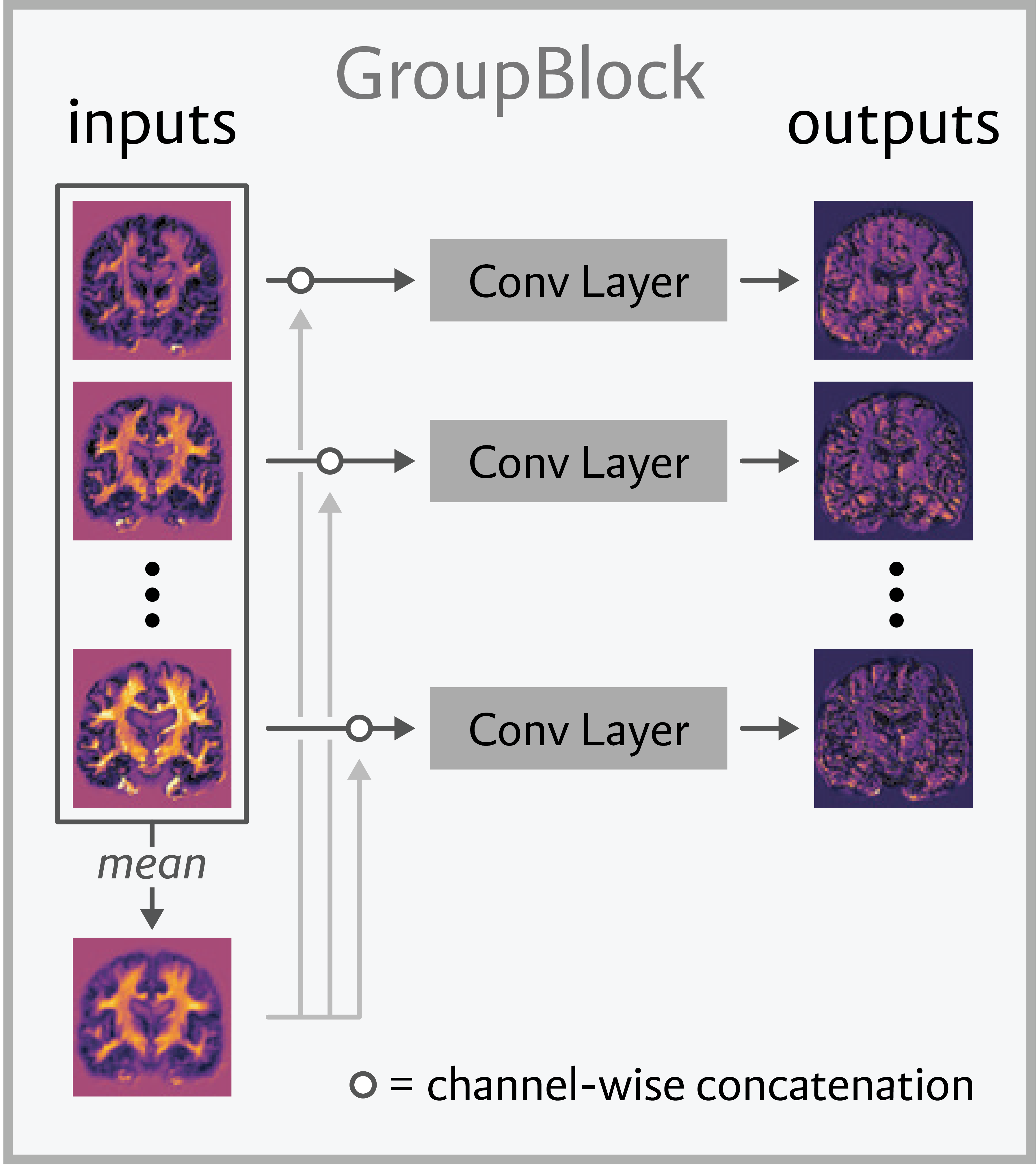

MultiMorph Produces High-Quality Atlases Orders of Magnitude Faster

Our method produces atlases up to 3 orders of magnitude faster without any re-training or fine-tuning.

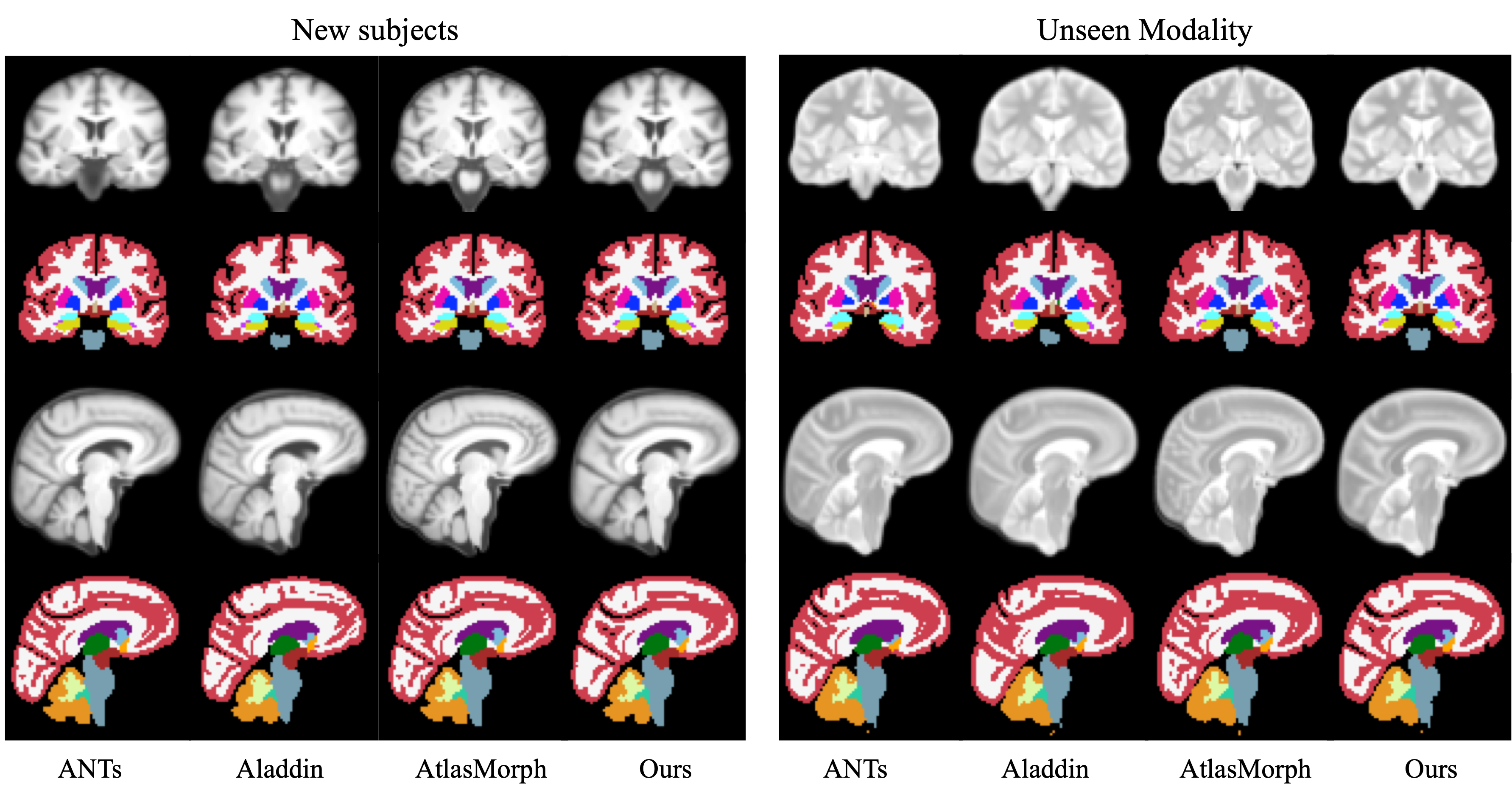

We produce high-quality atlases for both unseen imaging modalities and new subjects. All baselines were trained on the data above, while our method only saw the data at test time.

MultiMorph Enables Rapid and Flexible Construction of Conditional Atlases

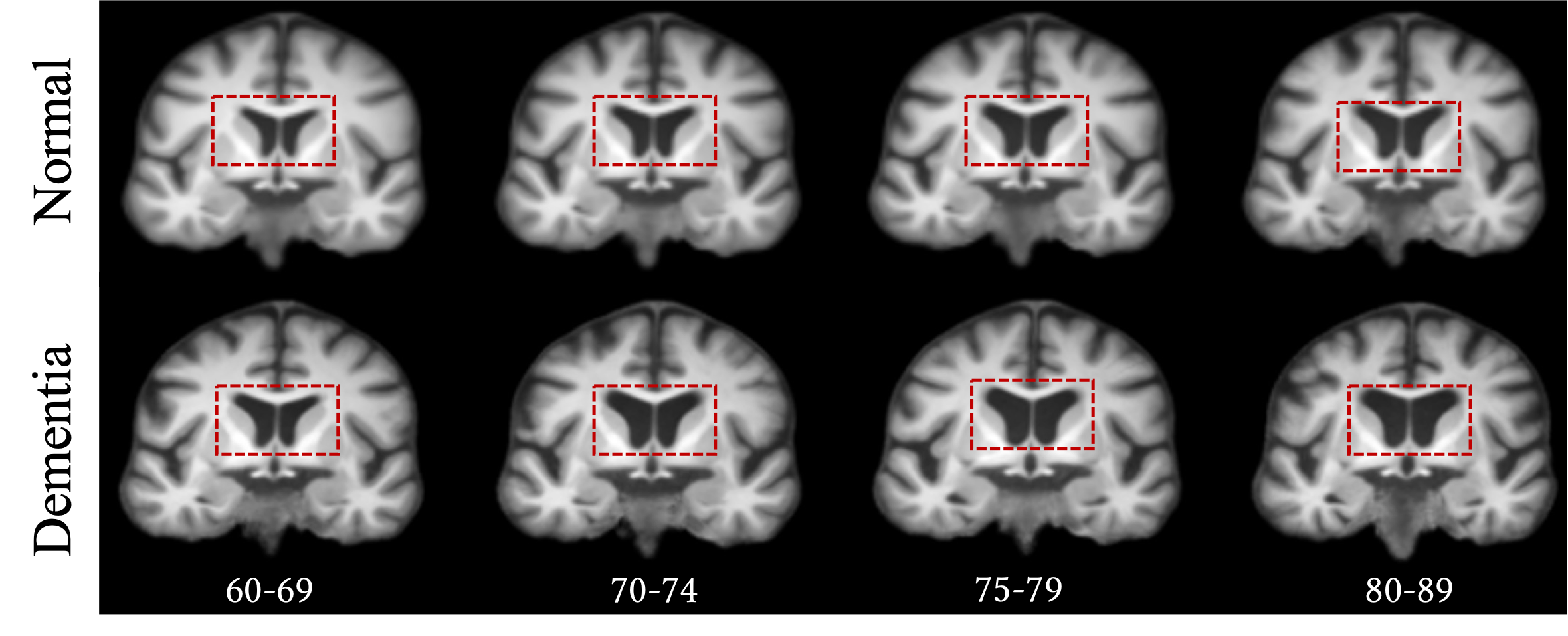

Age and disease conditional atlases constructed on the OASIS-3 dataset. Ventricle enlargment (red) observed with disease and increasing age.

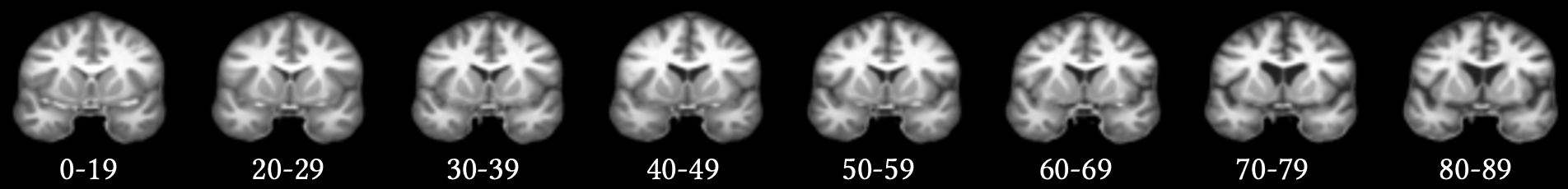

Age conditional atlases from OASIS-1.

Paper

S. Mazdak Abulnaga, Andrew Hoopes, Neel Dey, Malte Hoffmann, Bruce Fischl, John Guttag, Adrian V. Dalca

MultiMorph: On-demand Atlas Construction

Conference on Computer Vision and Pattern Recognition (CVPR), 2025

arXiv | BibTeX

@inproceedings{abulnaga2025multimorph,

title={MultiMorph: On-demand Atlas Construction},

author={Abulnaga, S. Mazdak and Hoopes, Andrew and Dey, Neel and Hoffmann, Malte and Fischl, Bruce and Guttag, John and Dalca, Adrian},

booktitle={Proceedings of the Computer Vision and Pattern Recognition Conference},

pages={30906--30917},

year={2025}

}