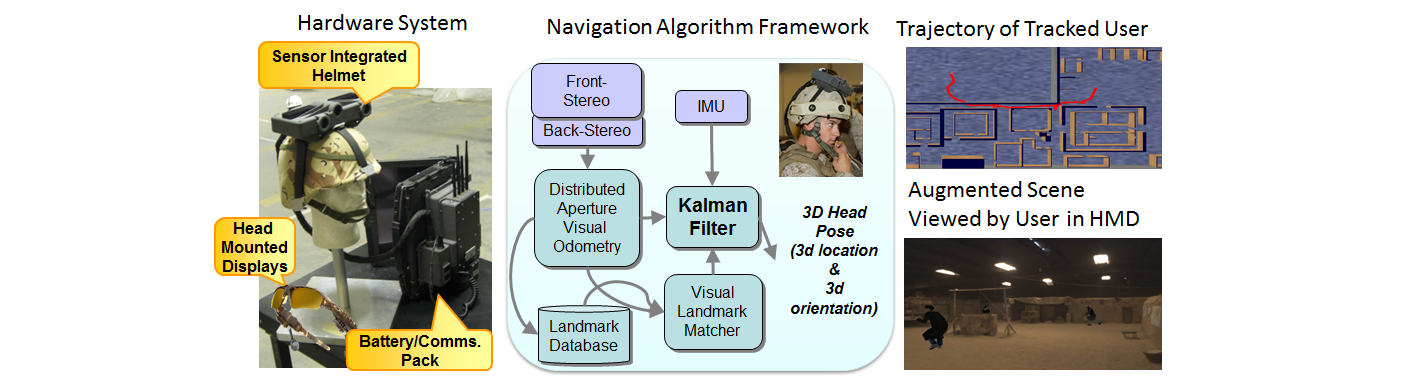

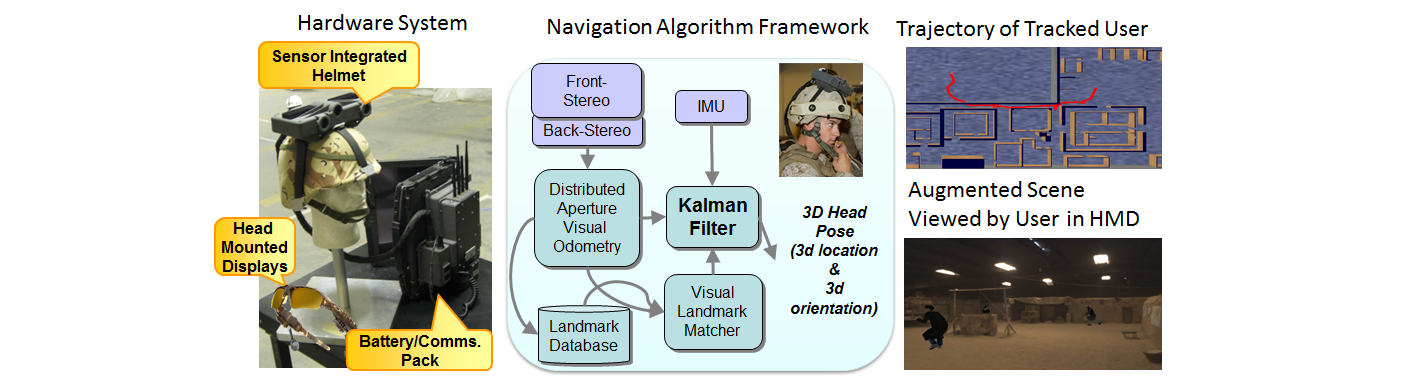

We develop a real-time large-area augmented reality training/gaming system using head mounted displays (HMDs) to fulfill the requirement of FITE-JCTD project supported by JFCOM/ONR. The pose tracking (3D head orientation and 3D head location) is achieved only with sensors (video cameras and an inertial measurement unit) mounted on the individual users. The users who worn the system can interact with virtual actors inserted in real scenes using head mounted displays.

The core component of this system is a novel approach we present to provide a drift-free and jitter-reduced vision-aided navigation solution. This approach is based on an error-state Kalman filter algorithm using both relative (local) measurements obtained from image based motion estimation through visual odometry, and global measurements as a result of landmark matching through a pre-built visual landmark database. We conduct a number of experiments aimed at evaluating different aspects of our Kalman filter framework, and show our approach can provide highly-accurate and stable pose in both indoors and outdoors over large areas. The results demonstrate both the long term stability and the overall accuracy of our algorithm as intended to provide a solution to the camera tracking problem in augmented reality applications.

Demos

Mobile Augmented Reality

Publications

Taragay Oskiper, Han-Pang Chiu, Zhiwei Zhu, Supun Samarasekera, and Rakesh

Kumar,

"Stable Vision-Aided Navigation for Large-Area Augmented Reality ",

IEEE

International Conference on Virtual Reality (VR), 2011.

[Best Paper Award]

pdf

slides

Zhiwei Zhu, Han-Pang Chiu, Taragay Oskiper, Saad Ali, Raia Hadsell, Supun Samarasekera, and Rakesh Kumar, "High-Precision Localization using Visual Landmarks Fused With Range Data ", IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), 2011. pdf