I was shown this technique by Anat Levin, who pointed me to the paper "User assisted separation of reflections from a single image using a sparsity prior" by Anat Levin and Yair Weiss as an example of how the method is used. I subsequently "reverse-engineered" the method a bit to get an idea for what it does.

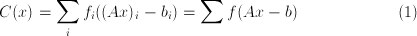

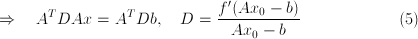

IRLS minimizes the cost function:

where my notation is:

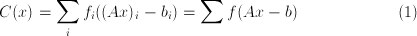

![<code>f(u) = left[ f_1(u_1) f_2(u_2) cdots right]^T</code>](itweightls-images/itweightls2.png)

are scalar potential functions

that are smallest at 0

are scalar potential functions

that are smallest at 0The intuitive explanation of the method is that each potential

function is approximated at each iteration by a parabola through the

origin that has the correct slope at the current value of  being

considered.

being

considered.

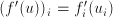

Taking the derivative of the cost function:

where the derivative of  is taken element-wise

(

is taken element-wise

( ).

).

If all the potentials were parabolas we could do least-squares. In

this case, all the  would be linear. Taking into account

that

would be linear. Taking into account

that  , we can approximate the functions

, we can approximate the functions  as

linear based on the current value of

as

linear based on the current value of  (denoted

(denoted  ), as

follows:

), as

follows:

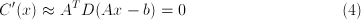

![<code>C(x) approx A^T left[ (Ax-b) ast fracf(Ax_0-b)Ax_0-bright]</code>](itweightls-images/itweightls13.png)

where  denotes element-wise multiplication and the fraction

bar denotes element-wise division. Defining a diagonal matrix

denotes element-wise multiplication and the fraction

bar denotes element-wise division. Defining a diagonal matrix  for the weights, we want the derivative of the cost function to

vanish:

for the weights, we want the derivative of the cost function to

vanish:

This is the least-squares problem we solve at each iteration to get the

new value  from the old value

from the old value  .

.

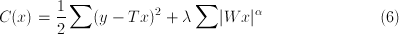

Suppose we wish to minimize the following cost function for  :

:

where we wish to recover an image  that has been transformed by a

known transformation

that has been transformed by a

known transformation  to yield a noisy observation

to yield a noisy observation  ,

,

is some positive scalar weight,

is some positive scalar weight,  takes

takes  into a wavelet

basis, and the absolute value and exponentiation are performed

element-wise.

into a wavelet

basis, and the absolute value and exponentiation are performed

element-wise.

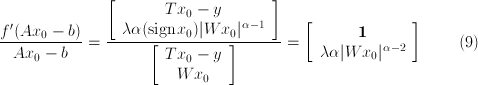

We define  ,

,  , and

, and  for this problem:

for this problem:

![<code>A = left[ c T W right] quad b = left[ c y 0 right]</code>](itweightls-images/itweightls31.png)

![<code>f(Ax-b) = fleft(left[c Tx-y Wx right] right) = left[ c (Tx-</code>](itweightls-images/itweightls32.png)

and calculate the weights:

where  is a vector consisting of the appropriate number

of ones. Writing out the diagonal matrix for the weights:

is a vector consisting of the appropriate number

of ones. Writing out the diagonal matrix for the weights:

![<code>D = left[cc I 0 0 Psi right] quad Psi = textdiagleft(lambda</code>](itweightls-images/itweightls35.png)

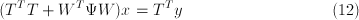

Remember that at each iteration we solve:

where  incorporates information from the previous iteration. In

this example, the equation becomes:

incorporates information from the previous iteration. In

this example, the equation becomes:

This works well when implemented.

Some tips: