Learning-based Video Motion Magnification

Abstract

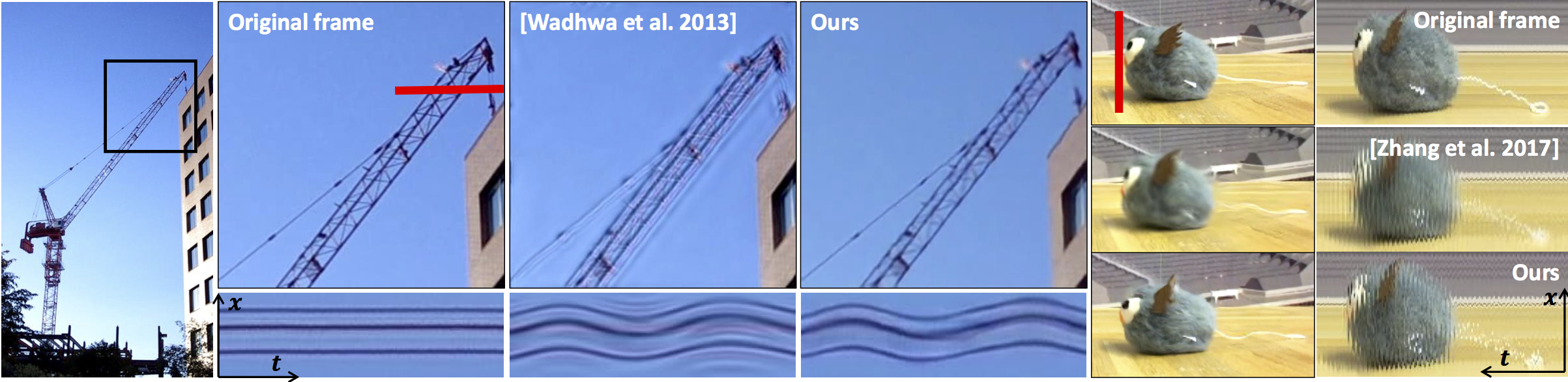

Video motion magnification techniques allow us to see small motions previously invisible to the naked eyes, such as those of vibrating airplane wings, or swaying buildings under the influence of the wind. Because the motion is small, the magnification results are prone to noise or excessive blurring. The state of the art relies on hand-designed filters to extract motion representations that may not be optimal. In this paper, we seek to learn the filters directly from examples using deep convolutional neural networks. To make training tractable, we carefully design a synthetic dataset that captures small motion well, and use two-frame input for training. We show that the learned filters achieve high-quality results on real videos, with less ringing artifacts and better noise characteristics than previous methods. While our model is not trained with temporal filters, we found that the temporal filters can be used with our extracted representations up to a moderate magnification, enabling a frequency-based motion selection. Finally, we analyze the learned filters and show that they behave similarly to the derivative filters used in previous works. Our code, trained model, and datasets will be available online.

*These authors contributed equally.

Reference

- [1] Wadhwa, Neal, et al. "Phase-based video motion processing." ACM Transactions on Graphics (TOG) 32.4 (2013): 80.

- [2] Zhang, Yichao, Silvia L. Pintea, and Jan C. van Gemert. "Video acceleration magnification." Computer Vision and Pattern Recognition (2017).

Video

Publication

T. Oh, R. Jaroensri, C. Kim, M. Elgharib, F. Durand, W. Freeman, W. Matusik "Learning-based Video Motion Magnification" arXiv preprint arXiv:1804.02684 (2018).

Accepted as an oral presentation to the European Conference on Computer Vision 2018.

Acknowledgement

The authors would like to thank Toyota Research Institute, Shell Research, and Qatar Computing Research Institute for their generous support of this project. Changil Kim was supported by a Swiss National Science Foundation fellowship P2EZP2 168785.