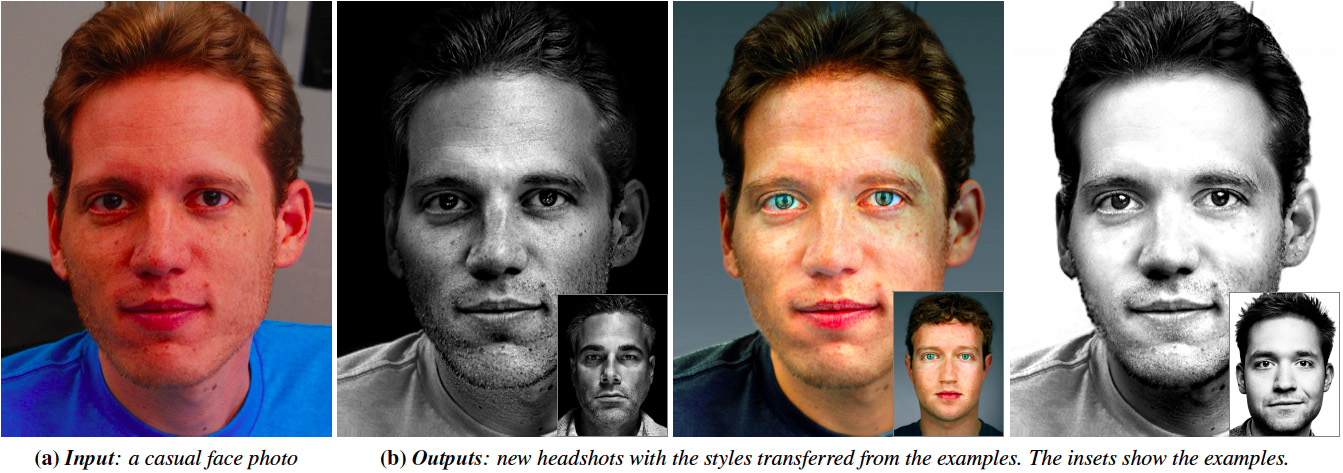

Style Transfer for Headshot Portraits

We transfer the styles from the example portraits in the insets in (b) to the input in (a). Our transfer technique is local and multi-scale,

and tailored for headshot portraits. First, we establish a dense correspondence between the input and the example. Then, we match the local

statistics in all different scales to create the output. Examples from left to right: image courtesy of Kelly Castro, Martin Schoeller, and Platon.

We transfer the styles from the example portraits in the insets in (b) to the input in (a). Our transfer technique is local and multi-scale,

and tailored for headshot portraits. First, we establish a dense correspondence between the input and the example. Then, we match the local

statistics in all different scales to create the output. Examples from left to right: image courtesy of Kelly Castro, Martin Schoeller, and Platon.

Publication

Abstract

Headshot portraits are a popular subject in photography but to

achieve a compelling visual style requires advanced skills that a

casual photographer will not have. Further, algorithms that automate

or assist the stylization of generic photographs do not perform well

on headshots due to the feature-specific, local retouching that a professional

photographer typically applies to generate such portraits.

We introduce a technique to transfer the style of an example headshot

photo onto a new one. This can allow one to easily reproduce

the look of renowned artists. At the core of our approach is a new

multiscale technique to robustly transfer the local statistics of an

example portrait onto a new one. This technique matches properties

such as the local contrast and the overall lighting direction while

being tolerant to the unavoidable differences between the faces of

two different people. Additionally, because artists sometimes produce

entire headshot collections in a common style, we show how

to automatically find a good example to use as a reference for a

given portrait, enabling style transfer without the user having to

search for a suitable example for each input. We demonstrate our

approach on data taken in a controlled environment as well as on a

large set of photos downloaded from the Internet. We show that we

can successfully handle styles by a variety of different artists.

Video (no audio, best viewed at 1920x1080)

Additional results

See our method on 94 images in Flickr dataset at here.

Supplemental materials

Press

Acknowledgement

We thank photographers Kelly Castro, Martin Schoeller, and Platon

for allowing us to use their photographs in the paper. We also

thank Kelly Castro for discussing with us how he works and for

his feedback, Michael Gharbi and Krzysztof Templin for being

our portrait models. We acknowledge the funding from Quanta

Computer and Adobe.