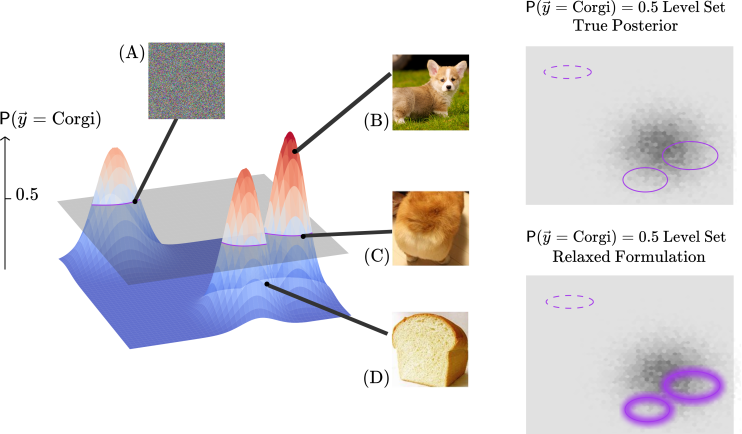

Does my classifier think that the image is a loaf of bread or a Corgi? Post-hoc explanation methods are gaining popularity as tools for interpreting, understanding, and debugging neural networks. Most post-hoc methods explain decisions in response to individual inputs drawn from the test set. However, the test set often fails to include highly confident misclassifications and ambiguous examples. To address these challenges, we introduce Bayes-TrEx for more flexible model inspection. It is a model- and data-generator-agnostic method for creating new distribution-conforming examples of known prediction confidence. Bayes-TrEx can be used to find highly confident misclassifications; to visualize class boundaries through ambiguous examples; to understand novel-class extrapolation; and to expose neural network overconfidence. We demonstrate Bayes-TrEx on CLEVR, MNIST, and Fashion-MNIST. Compared to inspecting test set examples, we show that Bayes-TrEx enables more flexible holistic model analysis. Our open source code is in supplemental.