Detecting Pulse from Head Motions in Video (CVPR 2013)

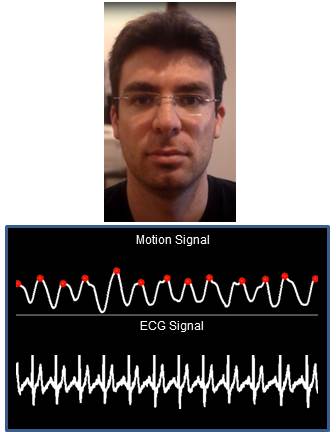

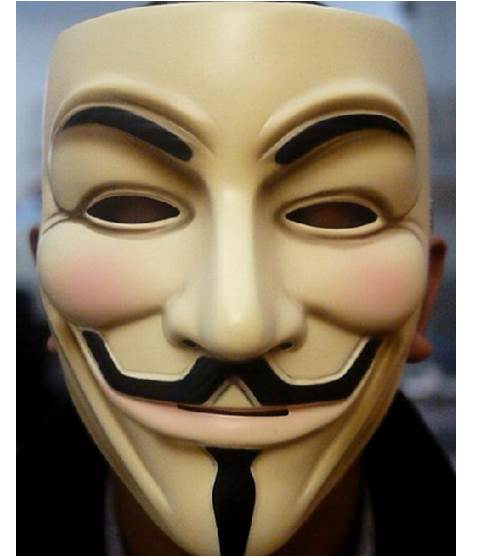

Guha Balakrishnan Frédo Durand John Guttag| (a). | (b). | (c). |

|  |  |

| Examples of videos from which we were able to extract a pulse signal. (a) is a typical view of the face along with an example of our motion signal in comparison to an ECG device. (b) is a subject wearing a mask. (c). is a video of a subject with a significant amount of added white Gaussian noise. | ||

Abstract

We

extract heart rate and beat lengths from videos by measuring subtle

head motion caused by the Newtonian reaction to the influx of blood at

each beat. Our method tracks features on the head and performs

principal component analysis (PCA) to decompose their trajectories into

a set of component motions. It then chooses the component that best

corresponds to heartbeats based on its temporal frequency spectrum.

Finally, we analyze the motion projected to this component and identify

peaks of the trajectories, which correspond to heartbeats. When

evaluated on 18 subjects, our approach reported heart rates nearly

identical to an electrocardiogram device. Additionally we were able to

capture clinically relevant information about heart rate variability.

Download paper (pdf)

Download video

Download paper (pdf)

Download video