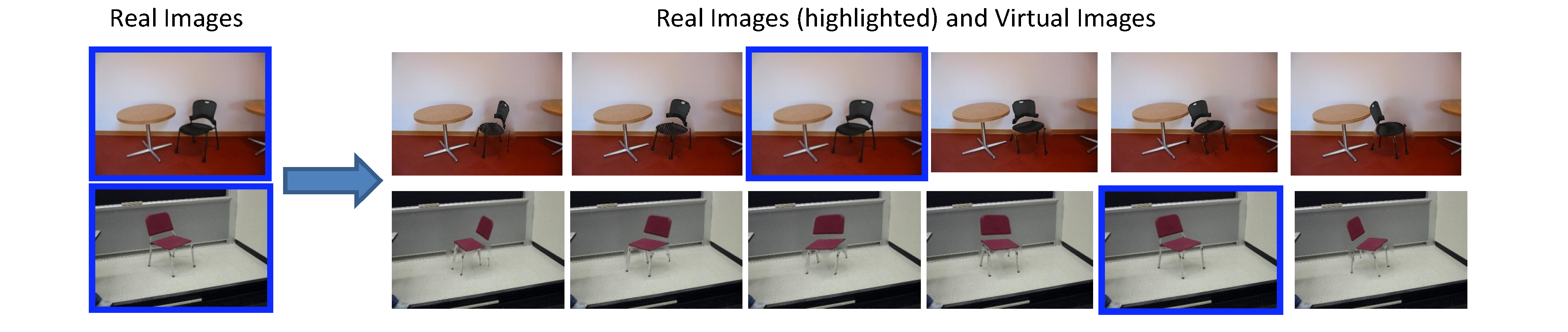

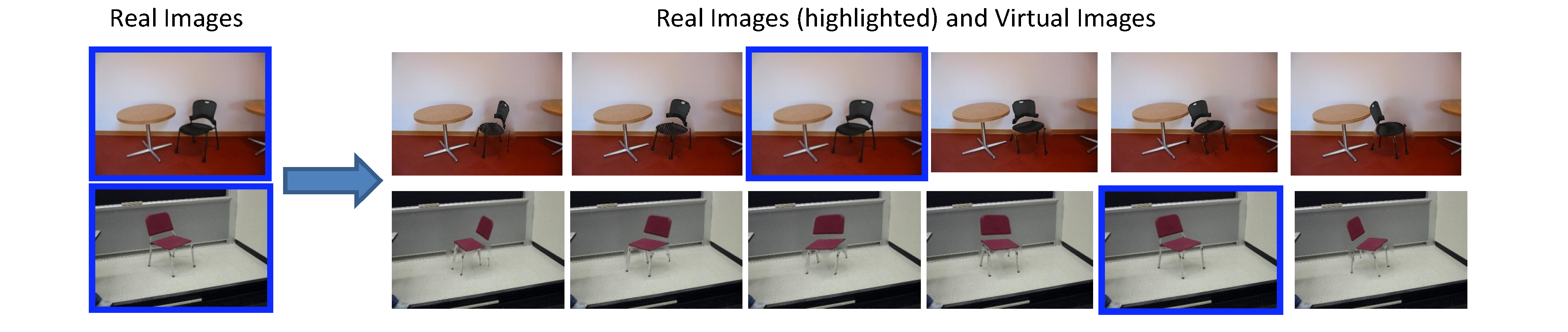

Multi-view object class recognition can be achieved using

existing approaches for single-view object class recognition, by treating

different views as entirely independent classes. This strategy requires a large

amount of training data for many viewpoints, which can be costly to obtain. In

this project, we propose the Potemkin

model, which can be constructed from as few as two views of an object of the

target class, and which can be used to transform images of objects from one

view to several other views, effectively multiplying their value for class

recognition. These transformed objects, which can be easily combined with the

background of the original image to generate a complete image in the new view,

can then be used as virtual training data for any view-dependent

2D recognition system. We show that automatically transformed images

dramatically decrease the data requirements for multi-view object class

recognition.

The Potemkin model of an object class can be viewed as a collection of parts,

which are oriented 3D primitive shapes. There are two different versions of

this model. In our CVPR'07 paper, we propose

the basic Potemkin model, which only uses a single oriented primitive for

learning the transforms from view to view. In our CVIU paper, we extend the

basic Potemkin model to use a basis set of multiple oriented primitives, and to

select among them to represent each of the parts of the target class based on a

pair of initial training images of the class.

Publications

Han-Pang Chiu, Leslie Pack Kaelbling, and Tomas Lozano-Perez,

"Learning to Generate Novel Views of Objects for Class Recognition", Computer Vision and Image Understanding (CVIU), 2009.

pdf

Han-Pang Chiu, Leslie Pack Kaelbling, and Tomas Lozano-Perez, "Virtual Training for Multi-View Object Class Recognition", CVPR, 2007. pdf