|

|

"Probabilistic ILP" - Tutorial

Topic

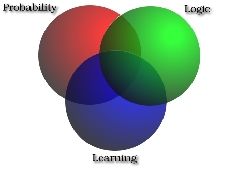

One of the central open questions in data mining, machine learning and

artificial intelligence, concerns probabilistic logic learning,

sometimes also called statistical relational learning or

probabilistic logic learning:

the integration of relational or logical representations,

probabilistic reasoning mechanisms with machine learning and data

mining principles.

In the past few years, this question has received a

lot of attention. Various different approaches have been developed

in several related, but different areas (including machine learning, statistics,

inductive logic programming, databases, and reasoning under uncertainty).

Most researchers only have exposure to one or two of the constituents

underlying probabilistic inductive logic programming:

In the past few years, this question has received a

lot of attention. Various different approaches have been developed

in several related, but different areas (including machine learning, statistics,

inductive logic programming, databases, and reasoning under uncertainty).

Most researchers only have exposure to one or two of the constituents

underlying probabilistic inductive logic programming:

The tutorial will survey and overview those developments that

lie at the intersection of logical (or relational) representations,

probabilistic reasoning and learning.

More precisely, we start from inductive logic programming

and show

- how inductive logic programming (ILP) formalisms, settings and

techniques [MD94]

can be extended to deal with probabilistic issues, and

- how these learning settings for probabilistic inductive logic programming

cover state-of-the-art

statistical relational learning approaches [DK03].

This, we hope, should allow the attendant to appreciate the

differences and commonalities between the various approaches - in particular from

a learning perspective - and between {probabilistic ILP and its underlying

constituents, ILP and statistical learning.

Before describing the tutorial in more detail, let us specify what we

mean by Probabilistic Logic Learning. The term

probabilistic in our context refers to the use of probabilistic

representations and reasoning mechanisms grounded in probability

theory, such as Bayesian networks, hidden Markov models, and stochastic

grammars. The term logic programming refers to first-order logical and

relational representations such as those studied

within the field of computational logic. The primary advantage of

using first-order logic or relational representations is that it

allows one to elegantly represent complex situations involving a

variety of objects as well as relations among the objects.

The term inductive refers to deriving

the different aspects of the probabilistic logic on the basis of data.in the context of probabilistic logic.

So,

probabilistic inductive logic programming aims at

combining its three underlying constituents: learning and

probabilistic reasoning within first-order logical and relational representations.

Frameworks developed include David Poole's probabilistic Horn abduction (PHA) [Poo93],

Ngo and Haddawy's probabilistic-logic programs (PLPs) [NH97],

Muggleton's stochastic logic programs (SLPs) [Mug96,Cuss00],

Sato's PRISM [Sat95,SK01],

Koller et al.'s probabilistic relational models (PRMs) [FGKP99,Pfe00,Get01],

Jaeger's relational Bayesian networks (RBNs) [Jae97],

Kersting and De Raedt's Bayesian logic programs (BLPs) [KD01a,KD01b],

Getoor's statistical relational models (SRMs) [Get01],

Anderson et al.'s and Kersting et al.'s relational Markov Models [ADW02,KRKD03],

Shangai et al.'s dynamic PRMS (DPRMs) [DW03], and many more.

The recent interest in probabilistic logic learning may be explained by the steady growing body of

the work addressing the pairwise intersection and by the fact

that it diverges from traditional approaches which assume data instances are structurally identical and

statistically independent or assume that relationships are

deterministic. Therefore, it is not surprising that

several workshops (SRL-00 at AAAI, SRL-03 at IJCAI, SRL-04 at ICML,

19th Machine Intelligence workshop on ``Reasoning and Uncertainty: methods and applications'',

a recent Dagstuhl seminar on ``Probabilistic, Logical and Relational Learning - Towards a Synthesis''),

research projects (EELD, APrIL I, APrIL II, ...), and (invited/honorary) talks

(such as Daphne Koller at IJCAI-01, ICML-03/KDD-03 and Foster Provost at

ICML-03) addressed this new challenge of machine learning.

| |