Recognizing materials using perceptually inspired features

Lavanya Sharan1 Ce Liu1,2 Ruth Rosenholtz1 Edward H. Adelson1

1 Massachusetts Institute of Technology 2 Microsoft Research New England

|

|

|

| [Flickr Material Database] | [IJCV paper] | [CVPR paper] |

Our world consists not only of objects and scenes but also of materials of various kinds. Being able to recognize the materials that surround us (e.g., plastic, glass, concrete) is important for humans as well as for computer vision systems. Unfortunately, materials have received little attention in the visual recognition literature, and few computer vision systems have been designed specifically to recognize materials.

We presented a system for recognizing high-level material categories (e.g., plastic) from single images at CVPR'10. In the IJCV'13 paper, we present a more complete view of our contributions. We show how the set of low and mid-level image features we proposed were inspired by studies of human material recognition, and how combining these features in an SVM framework improved recognition performance relative to our CVPR'10 results. Our system outperformed state-of-the-art recognition systems of its time (Varma and Zisserman, 2009; Hu et al., 2011) on the challenging Flickr Material Database (Sharan et al., 2009, 2014), achieving 57.1% accuracy (chance = 10%). When the performance of our recognition system is compared directly to that of human observers (84.9%), humans outperform our system quite easily. However, when we account for the spatially local nature of our image features, we find that our system rivals human performance.

This webpage summarizes our key contributions. Please refer to the journal paper for details. This work was initially presented at CVPR'10 (poster), VSS'10 (poster), and ICCP'10 (poster).

|

|

|

Figure 1: Material category recognition is distinct from reflectance estimation, texture recognition, and object category recognition. (Top row) Surfaces made of different materials can exhibit similar reflectance properties. These translucent surfaces are made of (l to r): plastic, glass, and wax. (Middle row) Surfaces with similar texture patterns can be made of different materials. These objects share the same checkerboard patterns, but they are made of (l to r): fabric, plastic, and paper. (Bottom row) Objects that belong to the same category can be made of different materials. These vehicles are made of (l to r): metal, plastic, and wood. [image credit] |

One of our key contributions has been to define the problem of recognizing high-level material categories in images as distinct from the previously studied problems of reflectance estimation (Nicodemus 1965; Marschner et al. 1999, 2005; Debevec et al. 2000; Matusik et al. 2000 etc.), texture recognition (Dana & Nayar 1998; Dana et al. 1999; Caputo et al. 2005, 2007; Varma & Zisserman 2005, 2009), and object recognition (Belongie et al. 2002; Dalal & Triggs 2005 etc). Consider Figure 1. Surfaces that belong to different material categories can exhibit similar reflectance properties (e.g., translucent), possess similar texture appearance (e.g., checkerboard), and belong to the same object category (e.g., cars). Therefore, merely recognizing reflectance, texture, or object properties does not guarantee successful material recognition.

We used the Flickr Material Database (FMD), which we had originally developed to test human material recognition (Sharan et al., 2009, 2014), to set up the problem. This database consists of 1000 images belonging to 10 common material categories, and the sheer diversity of appearances within each category makes FMD a challenging benchmark for material recognition. We formulate the material recognition problem as being able to recognize a given FMD image as belonging to one of the 10 FMD categories: fabric, foliage, glass, leather, metal, paper, plastic, stone, water, or wood.

|

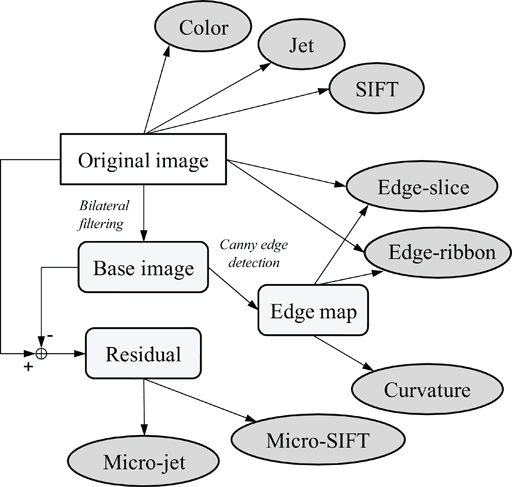

Figure 2: As described in the paper, we generated eight types of image features, shown here in ellipses: color, jet, SIFT, micro-jet, micro-SIFT, curvature, edge-slice, and edge-ribbon. |

We used a variety of low and mid-level image features based on what we know about the human perception of materials, the physics of image formation, and successful recognition systems in computer vision. Specifically, we used four groups of image features, each group designed to measure a different aspect of material appearance: color and texture, micro-texture, outline shape, and reflectance. Our features include standard features borrowed from the fields of object and texture recognition (SIFT, Lowe 2004) as well as new ones developed specifically for material recognition (edge-slice and edge-ribbon).

As shown in Figure 2, our features are derived from the original image, a base image that excludes pixel variations at fine spatial scales, a residual image that only includes pixel variations at fine spatial scales, and an edge map that retains the strong edges of the original image. Features computed on these different images capture different types of information. For example, curvature, which is derived from the edge map, captures information about object shape, an important cue for human material perception. Meanwhile, the micro-jet and micro-SIFT features, which are derived from the residual image, capture important details about the surface micro-structure.

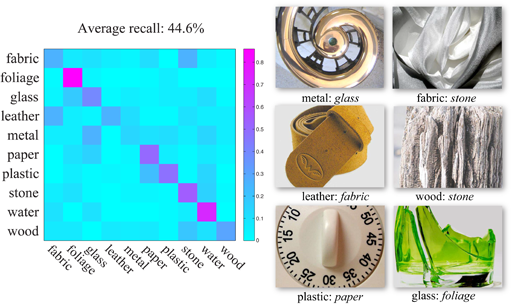

We evaluated our material recognition system using FMD in several ways:

- We compared two frameworks for combining image features: Latent Dirichlet Allocation or LDA (Blei et al. 2003) and SVM (Burges, 1998). Our SVM-based system (57.1%) outperformed our LDA-based system (42%).

- We evaluated various combinations of image features so as to identify the best-performing ones. SIFT + color + edge-slice / edge-ribbon was often the most successful combination for our LDA-based system, whereas all eight features together formed the most successful combination for our SVM-based system.

- We compared the performance of our system to a former state-of-the-art recognition system (Varma & Zisserman, 2009). Varma & Zisserman (VZ)'s 3-D texture recognition system was optimized for the CURET database (Dana et al. 1999), and it performs nearest neighbor classification in a low-dimensional feature space derived from small image patches. VZ's system achieves 23.8% accuracy on FMD. When the VZ classifier is run using various combinations of our image features, its achieves a maximum accuracy of 37.4%. Therefore, our systems outperform VZ.

- We compared the performance of our SVM-based system to that of to human observers. While humans easily outperform our system on the original FMD images (84.9%), when they are presented globally-scrambled but locally-preserved FMD images, their performance (46.9%) is similar to that of our system run on the same scrambled images (42.6%). This suggests that our SVM-based system can utilize local image information nearly as effectively as humans. Please refer to the paper for the details of this analysis.

|

|

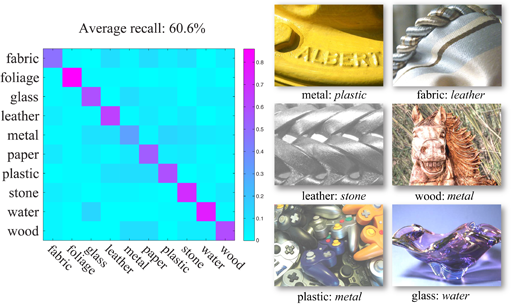

Figure 3: Confusion matrices corresponding to our (left panel) LDA-based and (right panel) SVM-based material category recognition systems. The LDA-based system uses color, SIFT, and edge-slice as features. The SVM-based system uses all eight features. For each confusion matrix, cell (i, j) denotes the probability of category i being classified as category j. Next to each confusion matrix, we present examples of misclassification. Label X: Y means that a surface made of X was misclassified as being made of Y. | |

To cite our material recognition work, please use:

C. Liu, L. Sharan, E. H. Adelson, and R. Rosenholtz, "Exploring features in a Bayesian framework for material recognition", in Proc. of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 239-246, 2010 [BibTex]

L. Sharan, C. Liu, R. Rosenholtz, and E. H. Adelson, "Recognizing materials using perceptually inspired features", International Journal of Computer Vision, vol. 108, no. 3, pp. 348-371, 2013 [BibTex]

To cite the Flickr Material Database, please use:

L. Sharan, R. Rosenholtz, and E. H. Adelson, "Accuracy and speed of material categorization in real-world images", Journal of Vision, vol. 14, no. 9, article 12, 2014 [BibTex]

This research was supported by NIH grants R01-EY019262 and R21-EY019741, and it was completed when L. S. was at Disney Research, Pittsburgh.