"Vision-based Reacquisition for Task-level Control"

Matthew WalterPostdoctoral Associate

Computer Science and Artificial Intelligence Laboratory

Massachusetts Institute of Technology

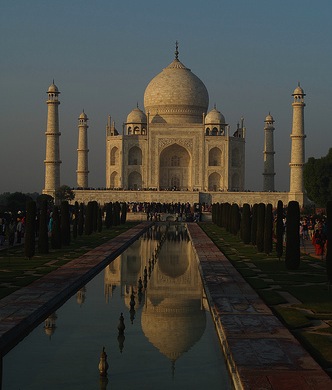

The following documents the presentation of our paper on computer vision-based object reacquisition at the 2010 ISER Conference in New Delhi, India. The presentation presents our technique to detect instances of specific objects in an environment by generating a diverse appearance model that captures the effects of lighting, viewpoint, and contextual changes. The work and paper are the result of a joint collaboration with Seth Teller at MIT together with Matthew Antone and Yuli Friedman at BAE Systems.

Walter, M.R., Friedman, Y., Antone. M., and Teller, S., Vision-based Reacquisition for Task-level Control. Proceedings of the International Symposium on Experimental Robotics (ISER), New Dehli, India, December 2010.

[bibtex] [pdf]

Abstract

We describe a vision-based algorithm that enables a robot to “reacquire” objects previously indicated by a human user through simple image-based stylus gestures. By automatically generating a multiple-view appearance model for each object, the method can reacquire the object and reconstitute the user's segmentation hints even after the robot has moved long distances or significant time has elapsed since the gesture. We demonstrate that this capability enables novel command and control mechanisms: after a human gives the robot a ``guided tour'' of object names and locations in the environment, he can dispatch the robot to fetch any particular object simply by stating its name. We implement the object reacquisition algorithm on an outdoor mobile manipulation platform and evaluate its performance under challenging conditions that include lighting and viewpoint variation, clutter, and object relocation.

Slides

Media

(2010_waverly_reacquisition)

[mp4 (h264, 8MB)]

The user first segments four objects in the scene by circling their location in a single camera image. As the robot moves through the outdoor environment, the algorithm locates and segments the objects within each new image. The method allows the robot to reacquire the objects despite changes in view aspect and scale and after losing sight of the objects.

(2010_06_14_tour)

[mp4 (h264, 8MB)]

The user teaches the object to the robot by circling it and speaking it's name. The method then automatically locates the object in subsequent images and builds an appearance-based model that captures the object. In this example, the tour involves images captured by a right-facing camera.

(2010_06_14_reacquisition_a)

[mp4 (h264, 8MB)]

After giving the robot a tour, the user can ask it to find and retrieve the object simply by speaking its name. This video shows the algorithm reacquiring the object, locating and segmenting the object, effectively reconstituting the user gesture. Note that the tour was given with one camera and while the object is reacquired in another camera, demonstrating the sharing of models across cameras.

(2010_06_14_reacquisition_b)

[mp4 (h264, 38MB)]

A demonstration of the ability to reacquire objects despite changes in context. In this case, the original object is in a new location yet, as the robot approaches, the algorithm recognizes and segments it within the stream of camera images.

(2010_tbh_mit_wheelchair)

[mp4 (h264, 12MB)]

Shared situational awareness enables the user and robot to share a common world model and, more importantly, for the robot to understand the user's model of the world. This video shows a user teaching locations within a building to a robotic wheelchair. This establishes a common world model that grounds common names to locations in the robots map of the environment. The user can then ask the robot to take him to one of these named locations.

(2010_06_truck_dropoff)

[mp4 (h264, 9MB)]

A video that shows one of the autonomous tasks that the forklift executes: placing a pallet onto a truck, which the user commands by circling the desired location in an image. The inset depicts the robot's estimate of the truck's position and orientation, which are initially unknown.

(2010_06_guided_tour)

[mp4 (h264, 8MB)]

As the robot is manually driven through the warehouse, a supervisor gives it a tour of objects by circling their location in the robot's right-facing camera images (inset) and speaking their name

(2010_06_reacquire_pickup)

[mp4 (h264, 22MB)]

As a consequence of the tour, the robot formulates a model of the environment that binds user-provided object names with the robot's representation for the object appearance. This permits the user to command the robot to pick up an object by referring to it by name. For example, “Pick up the generator and take it to the truck.” Upon grounding the object's name with it's appearance model, the robot searches for its location within one of its camera streams, locates it in the environment, and picks it up.

(2010_06_reacquisition)

[mp4 (h264, 25MB)]

A video showing the robot reacquiring several objects in the scene across different viewing angles and object scales.