Presented at IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Alaska

![]()

![]()

We introduce the patch transform, where an image is broken into non-overlapping patches, and modifications or constraints are applied in the "patch domain". A modified image is then reconstructed from the patches, subject to those constraints. When no constraints are given, the reconstruction problem reduces to solving a jigsaw puzzle. Constraints the user may specify include the spatial locations of patches, the size of the output image, or the pool of patches from which an image is reconstructed. We define terms in a Markov network to specify a good image reconstruction from patches: neighboring patches must fit to form a plausible image, and each patch should be used only once. We find an approximate solution to the Markov network using loopy belief propagation, introducing an approximation to handle the combinatorially difficult patch exclusion constraint. The resulting image reconstructions show the original image, modified to respect the user's changes. We apply the patch transform to various image editing tasks and show that the algorithm performs well on real world images.

![]()

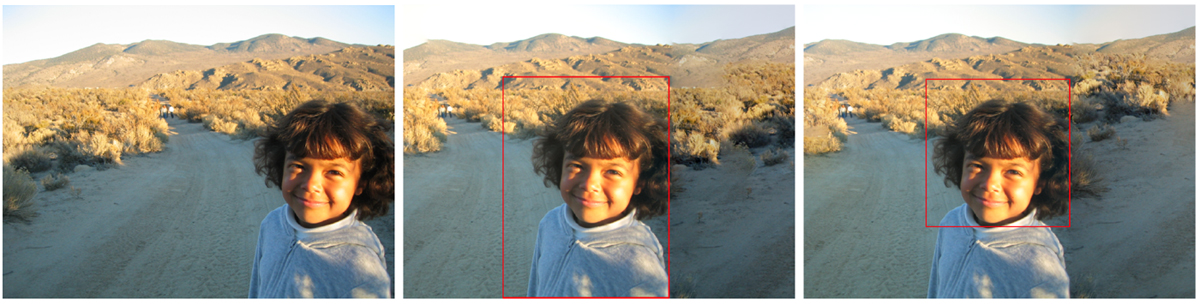

Subject recentering

|

|

| This example illustrates how the patch transform framework can be used to recenter a region / object of interest. (left) The original image. (middle) The inverse patch transform result. Notice that the overall structure and context is preserved. (right) Another inverse patch transform result. This figure shows that the proposed framework is insensitive to the size of the bounding box. |

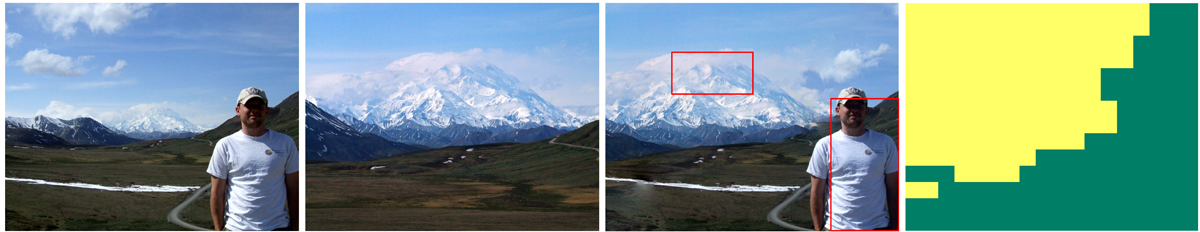

Relative position editing

|

|

| This example verifies that the proposed framework can still work well in the presence of complex background. Again, we want to recenter the woman such that the center of the building aligns with the woman. (left) The original image. (right) The inverse patch transform result with the user constraint. While the algorithm fixed the building, the algorithm reshuffled the patches in the garden to accommodate the changes in woman's position. |

Image retargetting

|

|

| In this example, the original image shown in (left) is resized such that the width and height of the output image is 80% of the original image. (right) The reconstructed image from the patch transform framework. |

Texture Control

|

|

| This example shows how the proposed framework can be used to manipulate the patch statistics of an image. The tree is specified to move to the right side of the image. (left) is the original image. (middle) is the inverse patch transform result with a constraint to use less sky patches. (right) is the inverse patch transform result with a constraint to use fewer cloud patches. |

Adding two images in the patch domain

|

|

| In this example, we collage two images shown in (left) and (mid-left). (mid-right) The inverse patch transform result. The user wants to copy the mountain from (mid-left) into the background of (left). The new, combined image looks visually pleasing (although there is some color bleeding of the foreground snow.) (right) This figure shows from which image the algorithm took the patches. The green region denotes patches from (left) and the yellow region denotes patches from (mid-left). |

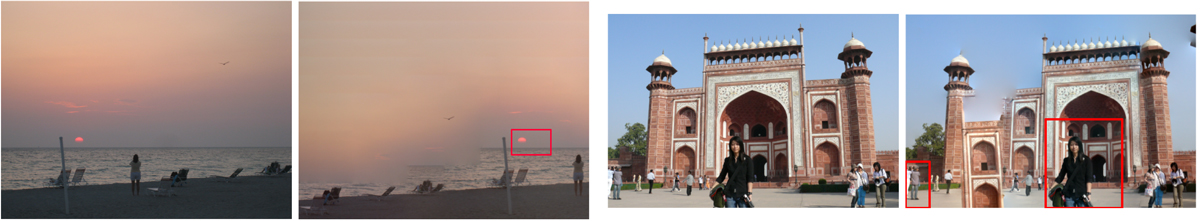

| Failure cases |

|

| These examples illustrate typical failure cases. In the left example, we move the sun to the right. Although the objects on the beach reorganize themselves to accommodate the user constraint, the sky patches propagate into the sea losing the overall structure of the image. In the right example, we move the person and a part of the temple to the right, and fixed the tourists at the left bottom corner. It's very hard to find a visually pleasing image with these constraints because some structures cannot be reorganized to generate natural looking structures. |

![]()

This research is partially funded by ONR-MURI grant N00014-06-1-0734 and by Shell Research. The first author is partially supported by Samsung Scholarship Foundation. Authors would like to thank Myung Jin Choi, Ce Liu, Anat Levin, and Hyun Sung Chang for fruitful discussions. Authors would also like to thank Flickr for images.

![]()

Last update: July 20 2008 Thanks to Ce Liu for the page template.