Abstract

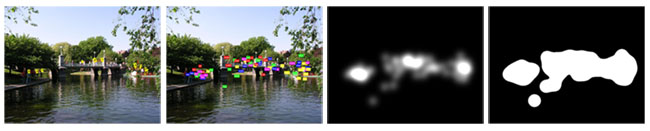

For many applications in graphics, design, and human computer interaction, it is essential to understand where humans look in a scene. Where eye tracking devices are not a viable option, models of saliency can be used to predict fixation locations. Most saliency approaches are based on bottom-up computation that does not consider top-down image semantics and often does not match actual eye movements. To address this problem, we collected eye tracking data of 15 viewers on 1003 images and use this database as training and testing examples to learn a model of saliency based on low, middle and high-level image features. This large database of eye tracking data is publicly available with this paper.

Files

| Paper | PDF (7 MB) |

| Posters | ICCV Poster (18 MB), Teaser poster (9 MB) |

| Slides | PDF (24.2 MB),

Keynote (55.4 MB)

|

| Eye tracking database |

Readme, Code,

Image Stimuli, Eye tracking Data,

Human Fixation Maps

|

| Our saliency model |

Readme,

Code (4.1MB), Saliency Maps of our images from our model |

| Train and test a new model | Readme, Code (6.9MB) |

Related Videos

BibTex

@InProceedings{Judd_2009,

author = {Tilke Judd and Krista Ehinger and Fr{\'e}do Durand and Antonio Torralba},

title = {Learning to Predict Where Humans Look},

booktitle = {IEEE International Conference on Computer Vision (ICCV)},

year = {2009}

}

Acknowledgments

This work was supported by NSF CAREER awards 0447561 and IIS 0747120. Frédo Durand acknowledges a Microsoft Research New Faculty Fellowship and a Sloan Fellowship, in addition to Royal Dutch Shell, the Quanta T-Party, and the MIT-Singapore GAMBIT lab. Tilke Judd was supported by a Xerox graduate fellowship. We thank Aude Oliva for the use of her eye tracker and Barbara Hidalgo-Sotelo for help with eye tracking. We thank Nicolas Pinto and Yann LeTallec for insightful discussions, and the ICCV reviewers for their feedback on this work.