|

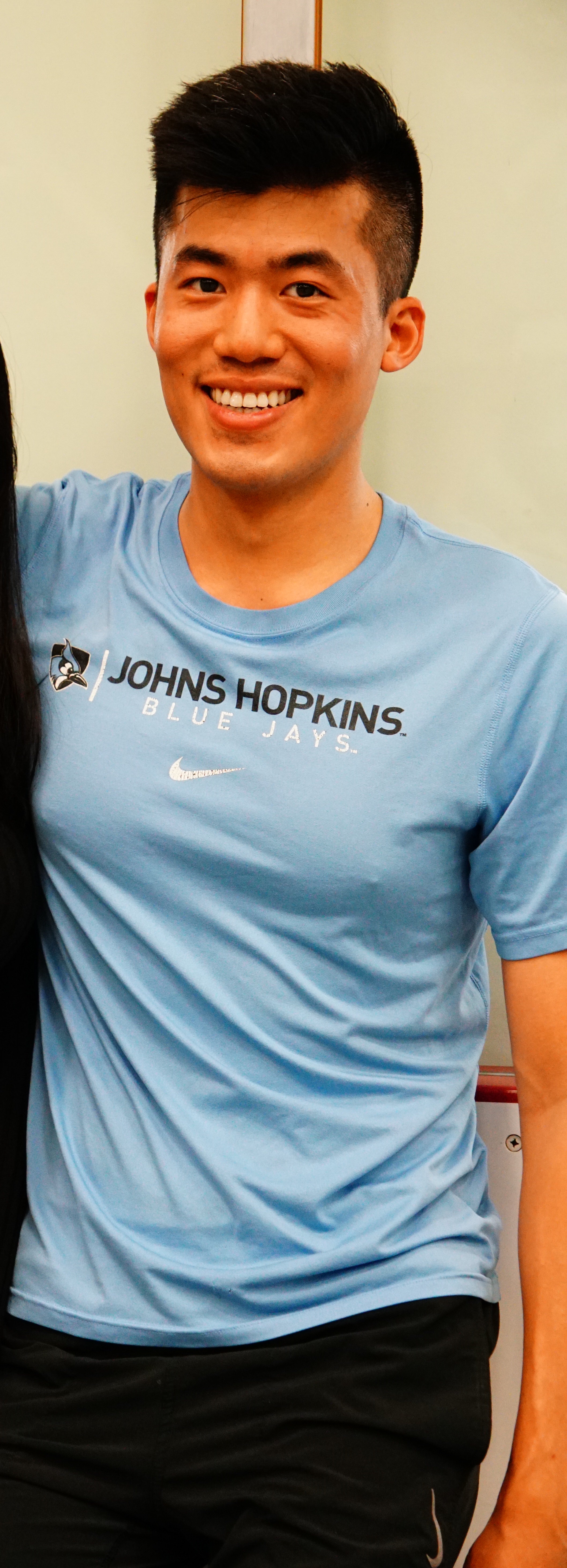

Cheng-I Jeff Lai

clai24 at mit dot edu

|

|

Hello! I am a 4th year Ph.D. student in computer science at MIT Computer Science and Artificial Intelligence Laboratory, advised by James Glass in the Spoken Language Systems Group.

My long-term professional goal is to democratize advanced speech technologies to under-explored domains, languages, and users.

My current research interest is on self-supervised learning, audio-visual learning, and their applications in speech.

Specifically, I think a lot about:

- Grounded Language Acquisition

Discovering grammar, words, phones from speech with distant supervision.

- Speech Generation via Self-Supervision

Leveraging self-supervised representations for speech translation or modeling.

I worked on the following topics in the past:

- Sparse Speech Processing

Reduced architectural complexity via pruning for large speech models.

- Self-Supervised Learning in Speech

Designed self-supervised representations for different speech/audio tasks.

- Speaker-Adaptive Speech Synthesis

Improved speaker similarity in zero-shot/few-shot speech synthesis.

Previously, I completed my B.S. in electrical engineering from Johns Hopkins University advised by Najim Dehak and Jesús Villalba.

I also have long-term collaborations with Yang Zhang and David Cox at MIT-IBM Watson AI Lab on low-resource language learning, and with Erica Cooper and Junichi Yamagishi at National Institute of Inforamtics on speech synthesis.

Outside of school, I have spent several summers interning at research labs in academia and indsutry: University of Edinburgh, National Institute of Inforamtics, Amazon AWS, MIT-IBM Watson AI Lab, and Meta Fundamental AI Research (FAIR).

If you have questions or are interested in my work, please reach me at my email (clai24 at mit dot edu). I am always open to collaborations!

|

|

|

Recent News

- (Summer 2022) I spent a summer Meta AI (FAIR accel), working on multi-modal word discovery for textless direct speech-to-speech translation (poster).

- (Summer 2022) ContentVec (speaker disentanglement of Hubert representation) is accepted at ICML 2022, and S3-Router (improved version of PARP) is accepted at NeurIPS 2022.

- (May 2022) A MIT News article describing our recent work on cross-modal discrete representation learning.

- (April 2022) I gave a guest lecture for MIT's speech processing class (6.345) on the SUPERB benchmark and sparsity in speech.

- (March 2022) Our recent work SSAST was presented at AAAI 2022, TTS-Pruning was accepted at ICASSP 2022, and Cross-Modal VQ and SUPERB-SG was accepted at ACL 2022.

- (Fall 2021) PARP will appear at NeurIPS as Spotlight presentation! Code and pretrained models coming soon. A short presentation is available here, and a short article in MIT News is available here. Give it a try with our colab demo.

- (November 2020) Motivated by Nelson Liu's blog post, I also put my PhD Statement of Purpose online for those interested!

|

Featured Publications * indicates equal contribution

Audio-Visual Neural Syntax Acquisition

Cheng-I Jeff Lai*, Freda Shi*, Puyuan Peng*, Yoon Kim, Kevin Gimpel, Shiyu Chang, Yung-Sung Chuang, Saurabhchand Bhati, David Cox, David Harwath, Yang Zhang, Karen Livescu, James Glass

ASRU, 2023

PARP: Prune, Adjust and Re-Prune for Self-Supervised Speech Recognition

Cheng-I Jeff Lai, Yang Zhang*, Alexander H. Liu*, Shiyu Chang*, Yi-Lun Liao, Yung-Sung Chuang, Kaizhi Qian, Sameer Khurana, David Cox, James Glass

NeurIPS, 2021 (Spotlight 3%)

arxiv /

project page /

colab demo /

OpenReview /

4 min presentation /

15 min presentation /

poster /

MIT News /

IEEE Spectrum /

ACM Tech News /

知乎

|

More Publications * indicates equal contribution

SUPERB-SG: Enhanced Speech processing Universal PERformance Benchmark for Semantic and Generative Capabilities

Hsiang-Sheng Tsai, Heng-Jui Chang, Wen-Chin Huang, Zili Huang, Kushal Lakhotia, Shu-wen Yang, Shuyan Dong, Andy T. Liu, Cheng-I Jeff Lai, Jiatong Shi, Xuankai Chang, Phil Hall, Hsuan-Jui Chen, Shang-Wen Li, Shinji Watanabe, Abdelrahman Mohamed, Hung-Yi Lee

ACL, 2022

arxiv /

code

On the Interplay between Sparsity, Naturalness, Intelligibility, and Prosody in Speech Synthesis

Cheng-I Jeff Lai, Erica Cooper*, Yang Zhang*, Shiyu Chang, Kaizhi Qian, Yi-Lun Liao, Yung-Sung Chuang, Alexander H. Liu, Junichi Yamagishi, David Cox, James Glass

ICASSP, 2022

arxiv /

project page /

listening samples /

15 min presentation

SUPERB: Speech processing Universal PERformance Benchmark

Shu-wen Yang, Po-Han Chi*, Yung-Sung Chuang*, Cheng-I Jeff Lai*, Kushal Lakhotia*, Yist Y. Lin*, Andy T. Liu*, Jiatong Shi*, Xuankai Chang, Guan-Ting Lin, Tzu-Hsien Huang, Wei-Cheng Tseng, Ko-tik Lee, Da-Rong Liu, Zili Huang, Shuyan Dong, Shang-Wen Li, Shinji Watanabe, Abdelrahman Mohamed, Hung-Yi Lee

Interspeech, 2021

arxiv /

code /

website

|

|

Talks

- The SUPERB benchmark [Slides]

MIT 6.345 guest lecture (April 2022).

- Making Machines Understand Uncommon Spoken Languages [Event Page, Slides, Recordings]

MIT ROCSA 5x5 talk (April 2022).

MIT Horizon (January 2022).

- Finding Sparse Subnetworks for Self-Supervised Speech Recognition and Speech Synthesis [Slides]

MIT 6.345 guest lecture (April 2022).

Georgia Institute of Technology (December 2021).

A*STAR, Singapore (November 2021).

ASAPP, New York (November 2021).

National Institute of Informatics, Japan (November 2021).

MIT Embodied Intelligence student seminar (October 2021).

MIT-IBM 5k language learning seminar (September 2021).

- Semi-Supervised Trainings for Semantics Understanding from Speech [Slides, Recordings]

National Institute of Informatics, Japan (February 2021).

Biometrics Research Laboratory, NEC Corporation, Japan (January 2021).

JHU speech reading group (November 2020).

MIT Spoken Language Systems group (October 2020).

Amazon Web Services, Lex (July & August 2020).

- Deep Learning Frameworks for Spoofing Detection and Speaker Representation [Slides]

Biometrics Research Laboratory, NEC Corporation, Japan (July 2019).

National Institute of Informatics, Japan (July 2019).

- Deep Learning in Artificial Intelligence

College of Science and Technology, Nanhua University, Taiwan (June 2019).

College of Chinese Medicine, China Medical University, Taiwan (May 2019).

- Attentive Filtering Network for Audio Replay Attacks Detection [Slides]

Gulf Coast Undergraduate Research Symposium, Rice University (October 2018).

Center for Language and Speech Processing, Johns Hopkins University (October 2018).

Centre for Speech Technology Research, University of Edinburgh (August 2018).

|

|

Services

- Program Committee: AAAI 2022 SAS, ACL 2021 MetaNLP, NeurIPS 2020 SAS

- Reviewer: NAACL, ACL, EMNLP, EACL, ICML, NeurIPS, SLT, IEEE/ACM TASLP, Computer Speech & Language

|

|

Selected Awards

- Merrill Lynch Fellowship, Department of EECS, MIT (2019-2020)

- Departmental and General Honors, JHU (2019)

- Vredenburg Scholarship, JHU (2018)

|

|