Next: Prior Load Balancing Techniques Up: Distributed, Secure Load Balancing Previous: Model

k-Choices is a greedy, cost-based load balancing algorithm for structured overlays. It matches nodes' workload goals with guesses about how their choices of identifiers will affect both their own workloads and those of their neighbors. At each VS insertion, k-Choices minimizes the discrepancy between work and capacity by sampling from a small set of potential IDs. By limiting the number of potential IDs, k-Choices is practical for networks containing malicious participants.

k-Choices functions primarily at node join time as shown in k-Choices Node Join in Figure 3. When a node

joins, it chooses a total target workload and an upper bound on the

number of VSs to create (lines 1-2). Then, it invokes k-Choices

VS Join and reduces its remaining capacity by the anticipated work

of that VS (line 3). This continues until it has created ![]() VSs or reached its target workload. Making several VSs together at

join time amortizes the cost of sampling.

VSs or reached its target workload. Making several VSs together at

join time amortizes the cost of sampling.

The join for each VS is composed of four steps, as shown in Figure

2 and in k-Choices VS Join in Figure

3. A small menu of potential IDs is chosen,

limited by a well-known constant ![]() (lines 1-2). These

IDs are verifiable because they are all based on the certified

(lines 1-2). These

IDs are verifiable because they are all based on the certified ![]() and

because

and

because ![]() is bounded. To verify that a node is using a valid ID,

is bounded. To verify that a node is using a valid ID, ![]() ,

another node simply has to check that there exists some

,

another node simply has to check that there exists some ![]() such that

such that ![]() . Next, each potential ID's successor is

probed to discover what is likely to happen were this VS to be placed

at this location (line 4). It guesses that the current work for this

location will be split based on the percentage of the address space

the joining VS will take on (lines 6-7). The node uses this to compute

the change from the current situation (line 8). Each term in the cost

function is the difference between target work and real work. The

first two terms are the sum of the differences if this VS is created

and the last is the current situation. We normalize each term based

on the node's capacity. Thus, the lower the cost, the smaller the

difference between target and actual work. The last step of the join

process is to join at the ID with lowest cost. Because nodes

set their targets lower than their capacities, if all nodes minimized

the mismatch

. Next, each potential ID's successor is

probed to discover what is likely to happen were this VS to be placed

at this location (line 4). It guesses that the current work for this

location will be split based on the percentage of the address space

the joining VS will take on (lines 6-7). The node uses this to compute

the change from the current situation (line 8). Each term in the cost

function is the difference between target work and real work. The

first two terms are the sum of the differences if this VS is created

and the last is the current situation. We normalize each term based

on the node's capacity. Thus, the lower the cost, the smaller the

difference between target and actual work. The last step of the join

process is to join at the ID with lowest cost. Because nodes

set their targets lower than their capacities, if all nodes minimized

the mismatch

![]() , then loss would be zero.

, then loss would be zero.

If nodes do not attempt to perform any additional load balancing after joining, we say they are passive. Being passive has its advantages: no additional churn is induced through VS relocation. However, over time one of the other potential IDs for this VS can become significantly better in terms of improving target/workload mismatch.

If we permit reselection of IDs, we say that k-Choices is

active. To minimize network probing, nodes reselect only a

single VS ID at a time. They pick the ![]() VSset with the

maximum mismatch. They check if any new ID for

VSset with the

maximum mismatch. They check if any new ID for ![]() improves the

aggregate mismatches of themselves and their neighbors by

improves the

aggregate mismatches of themselves and their neighbors by

![]() , a parameter that dampens improvements of minimal

benefit. If it does, the movement is performed.

, a parameter that dampens improvements of minimal

benefit. If it does, the movement is performed. ![]() is

application-dependent: when a system is used for routing, moving

will be relatively painless, as VSs can gracefully notify incoming

pointers of their departure; if objects are stored and need to be

sent over the network, the cost might be significantly greater.

Nodes only examine the possibility of relocating if they

are overloaded or underloaded. If nodes have relocated more than

VSset.size times and are still overloaded or underloaded, they

create or destroy VSs within the range

is

application-dependent: when a system is used for routing, moving

will be relatively painless, as VSs can gracefully notify incoming

pointers of their departure; if objects are stored and need to be

sent over the network, the cost might be significantly greater.

Nodes only examine the possibility of relocating if they

are overloaded or underloaded. If nodes have relocated more than

VSset.size times and are still overloaded or underloaded, they

create or destroy VSs within the range ![]() .

In practice we found that nodes did not create more than a handful

of additional VSs.

.

In practice we found that nodes did not create more than a handful

of additional VSs.

k-Choices possesses several attractive features and makes certain assumptions. When run in passive mode, it adds no reactive churn. In fact, without an active component, it requires natural churn. By making a good choice before routing is set up, objects are stored, or computations are started, k-Choices lessens or eliminates this reactive load balancing penalty. We assume that nodes do not lie or that Distributed Algorithmic Mechanism Design techniques could be used to encourage the truthfulness of the information they provide about load [19,41], another reason why verifiable IDs are important. We also assume that the system is not primarily being used for range queries. Limited ID assignment provably cannot balance load in this case [26].

Note that VSs could keep more accurate track of where work is landing

in their namespace to make ![]() and

and ![]() more accurate.

Instead, we decided to use a simple exponentially-weighted

moving average to reduce the amount of state sent during probing.

more accurate.

Instead, we decided to use a simple exponentially-weighted

moving average to reduce the amount of state sent during probing.

Optimal ID Choice. k-Choices exhibits diminishing returns as

![]() approaches the size of the namespace

approaches the size of the namespace ![]() . When

. When ![]() , each joining VS would sample every possible ID (assuming a

perfect hash function). In fact, it is feasible to find the ID (or

IDs) with the lowest cost by examining only a few variables for each

existing VS. While even this sampling would be prohibitively

expensive in an implementation, performing it ``offline'' within a

simulator is not.

, each joining VS would sample every possible ID (assuming a

perfect hash function). In fact, it is feasible to find the ID (or

IDs) with the lowest cost by examining only a few variables for each

existing VS. While even this sampling would be prohibitively

expensive in an implementation, performing it ``offline'' within a

simulator is not.

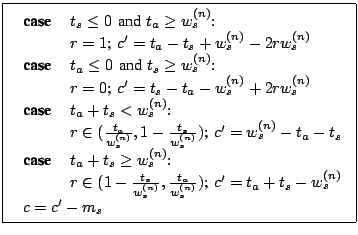

For each potential successor ![]() , we know its

target

, we know its

target ![]() and its actual work

and its actual work ![]() . The goal is to find

the percent of the address space

. The goal is to find

the percent of the address space ![]() between

between ![]() and

and ![]() that

gives the minimum cost

that

gives the minimum cost ![]() and to find what the cost is for this

and to find what the cost is for this ![]() .

The optimal ID choice will be the

.

The optimal ID choice will be the

![]() with the globally lowest cost. We know that

with the globally lowest cost. We know that

![]() and

that

and

that

![]() is fixed regardless of the

is fixed regardless of the ![]() chosen. If we do not normalize for each node's capacity, there are

four mutually exclusive cases for

chosen. If we do not normalize for each node's capacity, there are

four mutually exclusive cases for ![]() and

and ![]() :

:

In cases 1 and 2, we do not eliminate ![]() because IDs

cannot be identical. The actual choice will need to be a small

distance away.

because IDs

cannot be identical. The actual choice will need to be a small

distance away.