|

|

Projects

|

Close Proximity Robotic Assistant in Automotive Final Assembly

The goal of this project is to improve the efficiency of close proximity human-robot collaboration in automotive assembly manufacturing plant. We have developed an online task segmentation algorithm which can recognize partial activities as it progresses, as well as can predict the future activities. This algorithm also can detect the start time and the end time of an activity accurately, as accurate timings are important to generate an accurate plan for a robot. A planner then uses this information to generate a time-variant motion plan for the robot to assist the person. We recently demonstrated a live demo at a real automotive assembly manufacturing plant in the Honda North America and the Marysville Auto Plant in Ohio.

Learn more

|

|

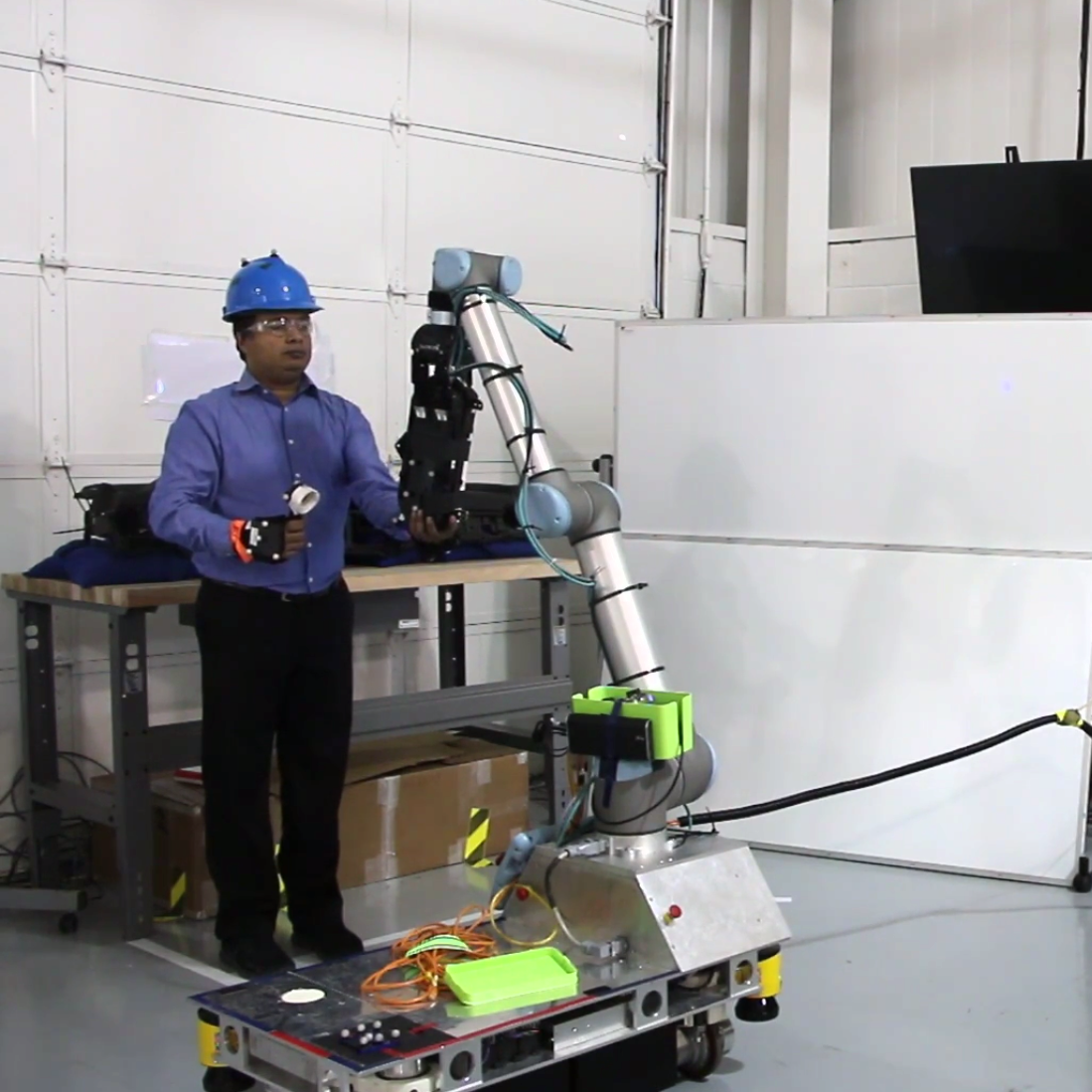

An Intelligent Material Delivery System to Improve Human-Robot Workflow and Productivity in Assembly Manufacturing

A significant portion of assembly manufacturing processes is the physical movement of raw materials and finished products. Often, the worker who is performing an assembly task needs to bring raw materials to and from an assembly table where he/she will assemble the parts. In these circumstances, a person needs to perform non-value added tasks to keep the production line moving. To reduce the human involvements in these non-value added tasks, we are currently developing intelligent planning and scheduling techniques for IMDS robots by modeling human-workflow.

|

|

Non-verbal Behavior Understanding and Synthesis

We developed methods for robots to detect human head and hand gestures to improve the fluency of human-robot conversations. We also incorporated a neural network based multimodal data fusion model to help robots to make appropriate decisions during a conversation.

|

|

Coordination Dynamics in Multi-human Multi-robot Teams

As robots enter human environments, they will be expected to collaborate and coordinate their actions with people. How robots engage in this process can influence the dynamics of the team, particularly in multihuman, multi-robot situations. We investigated how the presence of robots affects group coordination when both the anticipation algorithms they employ and their number (single robot or multi-robot) vary.

Learn more

|

|

Adaptation Mechanism for Robots in Human-Robot Teams

Inspired from psychology and neuroscience literature, we are developing methods for robots to better understand changes in human group dynamics, so that the robots can leverage this knowledge to adapt and to coordinate with human groups.

Learn more

|

|

Anticipating Human Activities by Leveraging Group Dynamics in a Human-Robot Team

In order to be effective teammates, robots need to be able to understand high-level human behavior to recognize, anticipate, and adapt to human motion. We have designed a new approach to enable robots to perceive human group motion in real-time, anticipate future actions, and synthesize their own motion accordingly. We explore this within the context of joint action, where humans and robots move together synchronously. Learn more

|

|

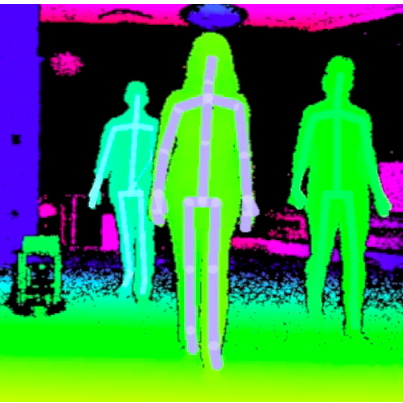

Coordinated Action in Human-Robot Teams

To be effective team members, it is important for robots to understand the high-level behaviors of collocated humans. This is a challenging perceptual task when both the robots and people are in motion. In this project, we investigated an event-based model for multiple robots to automatically measure synchronous joint action of a group while both the robots and co-present humans are moving. We validated our model through an experiment where two people marched both synchronously and asynchronously, while being followed by two mobile robots. Learn more

|

|

Measuring Entrainment in a Group

Group interaction is an important aspect of human social behavior. During some group events, the activities performed by each group member continually influence the activities of others. This process of influence can lead to synchronized group activity, or the entrainment of the group. In this project, we investigated synchronous joint action in a group, and presented a novel method to automatically detect synchronous joint action which takes multiple types of discrete, task-level events into consideration. Learn more

|

|

A Time-Synchronous Multi-Modal Data Capture System

In this project, we proposed a timing model to handle real-time data capture and processing from multiple modalities. Based on this proposed model, we developed a client-server based system to ensure time-synchronous capturing of all actions made by the group members in a group. We validated this system through an HRI study, where a pair of human participants interacted with each other and a robot during a dance-off, and we measured their expressiveness from multiple Kinect sensors in real-time. Learn more

|

|

A Real Time Eye Tracking and Gaze Estimation System

Eye tracking and gaze estimation techniques have been extensively investigated by researchers in the computer vision and the psychology community for the last few decades. Still it remains a challenging task due to the individuality of the eyes, variability in shape, scale, location, and lighting conditions. In my MS thesis, we designed and implemented a low-cost eye-tracking system using only an on-the-shelf webcam. This eye-tracking system is robust to small head rotations, changes in lighting, and all but dramatic head movements. We also designed and implemented a gaze-estimation method which used a simple yet powerful machine learning-based calibration technique to estimate gaze positions. Learn more

|

|