Student Research Opportunities

Contact: Xuhao Chen

We are looking for grad & undergrad students to join our lab. Feel free to reach out if you are interested in machine learning systems, computer architecture, and/or high performance computing. Our projects have the potential to become MEng thesis work. We have 6-A program opportunities available. If you are interested, please send your CV to cxh@mit.edu and fill in the recruiting form.

Research Summary: The AI revolution is transforming various industries and having a significant impact on society. However, AI is computationally expensive and hard to scale, which poses a great challenge in computer system design. Our lab is broadly interested in computer system architectures and high performance computing, particularly for scaling AI and ML computation.

Top-tier system & HPC conferences [OSDI, SOSP], [ASPLOS], [ISCA], [VLDB], [SIGMOD] [SC], [PPoPP].

Interesting MLSys topics: Vector Similarity Search, Recommendation System, Retrieval-Augmented Generation, Graph Machine Learning, Graph Algorithms, GPU Acceleration, LLM Serving/Inference

Below are some ongoing research projects.

Scalable Vector Database [Elx Link]

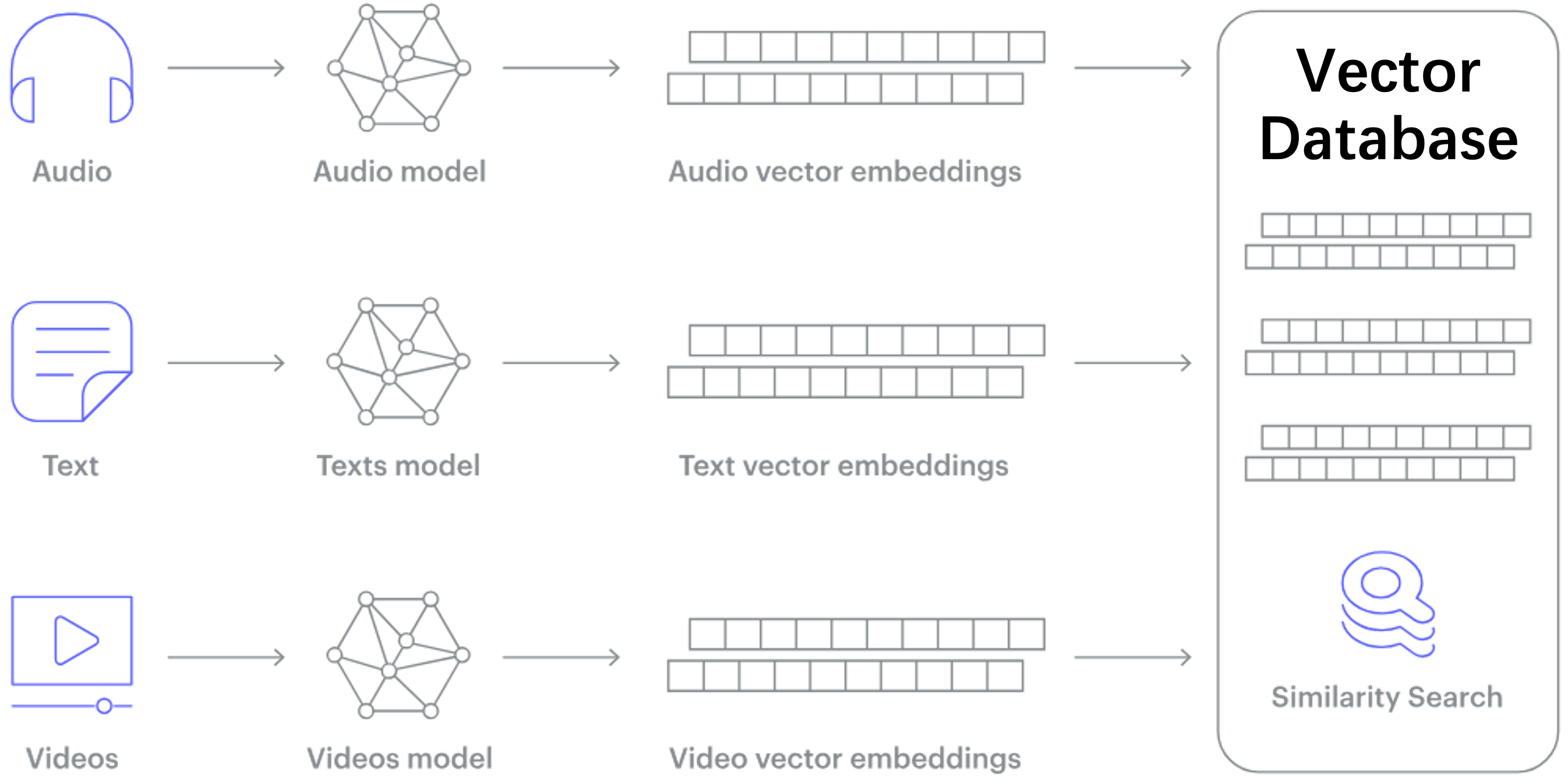

Recent advances in deep learning models map almost all types of data (e.g., images, videos, documents) into high-dimension vectors. Queries on high-dimensional vectors enable complex semantic-analysis that was previously difficult if not impossible, thus they become the cornerstone for many important online services like search, eCommerce, and recommendation systems.

In this project we aim to build a massive-scale Vector Database on the multi-CPU and multi-GPU platform. In a Vector Database, the major operation is to search the k closest vectors to a given query vector, known as k-Nearest-Neighbor (kNN) search. Due to massive data scale, Approximate Nearest-Neighbor (ANN) search is used in practice instead. One of the most promising ANN approaches is the graph-based approach, which first constructs a proximity graph on the dataset, connecting pairs of vectors that are close to each other, then performs a graph traversal on the proximity graph for each query to find the closest vectors to a query vector. In this project we will build a vector database using graph-based ANN search algorithm that supports billion-scale datasets.

Qualifications:

- Strong programming skills in C/C++/Python language

- Experience with design and analysis of algorithms, e.g., MIT 6.1220 (previously 6.046)

- Experience with performance engineering is a plus, e.g., MIT 6.1060 (previously 6.172)

- GPU/CUDA programming is a plus

References

- A Real-Time Adaptive Multi-Stream GPU System for Online Approximate Nearest Neighborhood Search , CIKM 2024

- ParlayANN, PPoPP 2024.

- CAGRA, ICDE 2024

- iQAN, PPoPP 2023.

- Billion-scale similarity search with GPUs

- HNSW

- DiskANN [Video] [Slides] [Slides2]

- intel/ScalableVectorSearch

- IntelLabs/VectorSearchDatasets

Zero-Knowledge Proof [Elx Link]

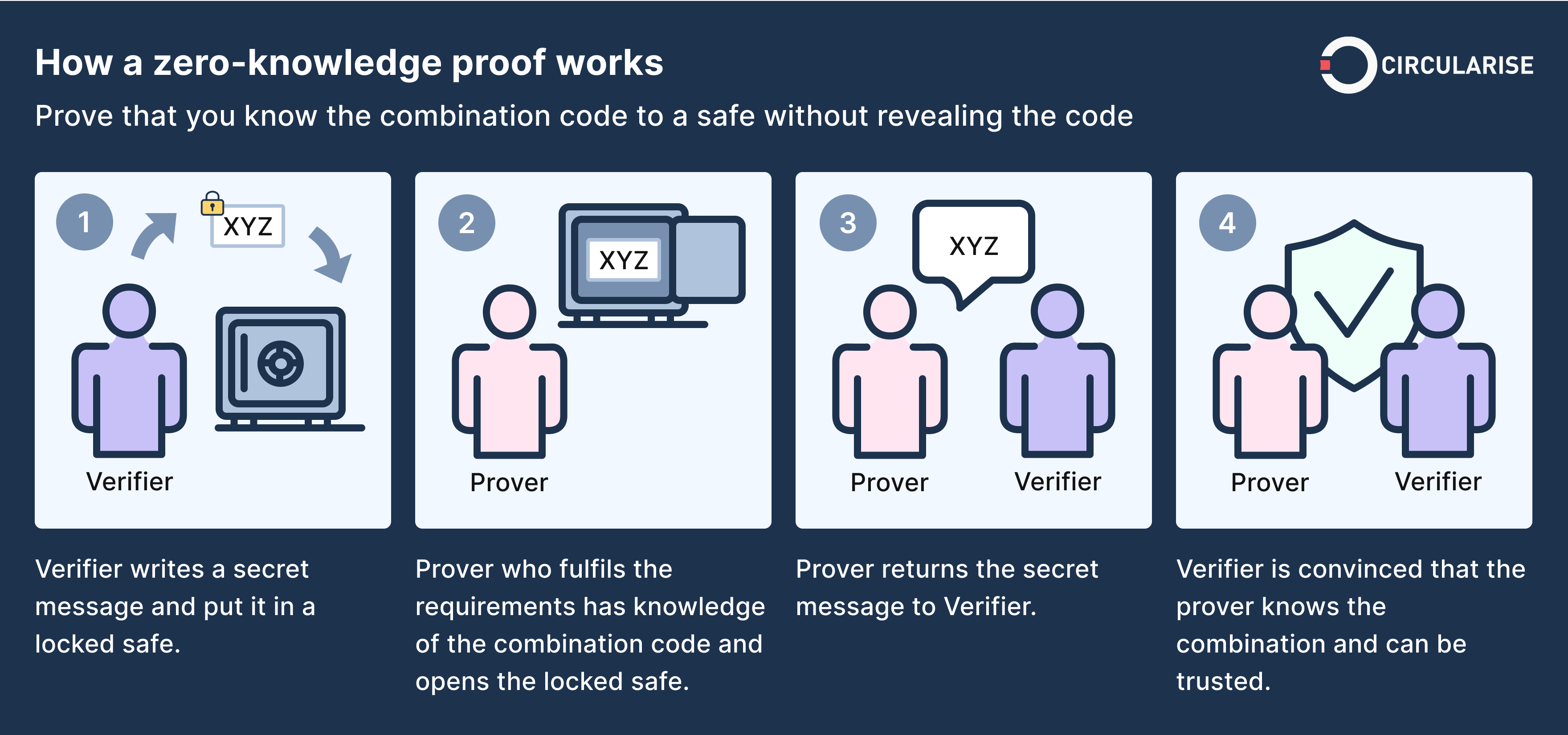

Zero-knowledge proof (ZKP) is a cryptographic method of proving the validity of a statement without revealing anything other than the validity of the statement itself. This “zero-knowledge” property is attractive for many privacy-preserving applications, such as blockchain and cryptocurrency systems. Despite its great potential, ZKP is notoriously compute intensive, which hampers its real-world adoption. Recent advances in cryptography, known as zk-SNARK, have brought ZKP closer to practical use. Although zk-SNARK enables fast verification of the proof, proof generation in ZKP is still quite expensive and slow.

In this project, we will explore ZKP acceleration by using algorithm innovations, software performance engineering, and parallel hardware like GPU, FPGA or even ASIC. We aim to investigate and implement efficient algorithms for accelerating elliptic curve computation. We will also explore acceleration opportunities for the major operations, e.g., finite field arithmetic, Multi-scalar Multiplication (MSM) and Number-theoretic transformations (NTT).

Qualifications:

- Strong programming skills in C/C++ language

- Experience with design and analysis of algorithms, e.g., MIT 6.1220 (previously 6.046)

- Experience with performance engineering is a plus, e.g., MIT 6.1060 (previously 6.172)

- GPU/CUDA and/or Rust/Web Assembly/Javascript programming is a plus

References

- Accelerating Multi-Scalar Multiplication for Efficient Zero Knowledge Proofs with Multi-GPU Systems, ASPLOS 2024.

- GZKP. ASPLOS 2023.

- MSMAC: Accelerating Multi-Scalar Multiplication for Zero-Knowledge Proof. DAC 2024

- PipeZK: Accelerating Zero-Knowledge Proof with a Pipelined Architecture. ISCA 2021

- Hardcaml MSM: A High-Performance Split CPU-FPGA Multi-Scalar Multiplication Engine. FPGA 2024

- Accelerating Zero-Knowledge Proofs Through Hardware-Algorithm Co-Design. MICRO 2024

Deep Recommendation System [Elx Link]

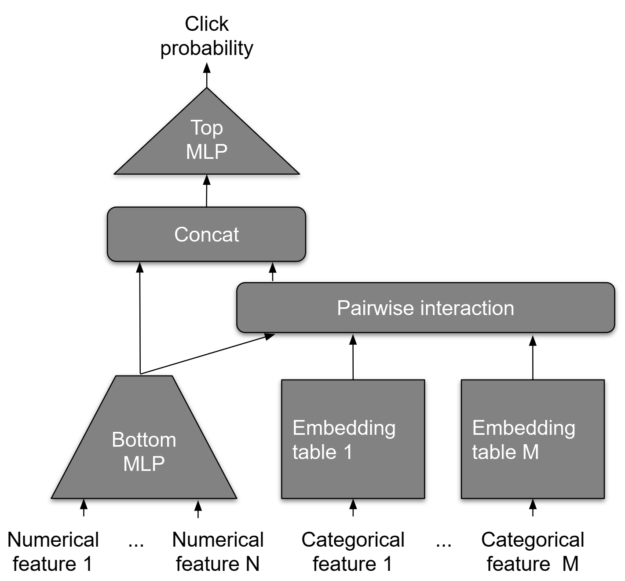

Deep Learning Recommendation Models (DLRMs) are widely used across industry to provide personalized recommendations to users and consumers. Specifically, they are the backbone behind user engagement for industries such as ecommerce, entertainment, and social networks. DLRMs have two stages: (1) training, in which the model learns to minimize the difference between predicted and actual user interactions, and (2) inference, in which the model provides recommendations based on new data. Traditionally, GPUs have been the hardware component of choice for DLRM training because of the high computational demand. In contrast, CPUs have been widely used for DLRM inference due to tight latency requirements that restrict the batch size. An existing bottleneck in inference is the high computational and memory bandwidth, which contribute greatly to loads on data centers and computing clusters.

In this project, we will focus on exploring GPU optimizations to the embedding stage of the DLRM inference pipeline, which has traditionally only utilized CPUs. Initially, we would like to explore CPU-GPU coupled schemes, for instance using GPUs as extra cache space (e.g. to store more embeddings or to memoize sparse feature computations), and multi-GPU cluster computation in order to further accelerate inference for more complex models. The goal is to coalesce existing inference frameworks, profile them, and implement novel ones to exhibit substantial speedup for DLRM inference on GPUs.

Qualifications:

- Strong programming skills in C/C++/Python language

- Experience with design and analysis of algorithms, e.g., MIT 6.1220 (previously 6.046)

- Experience with performance engineering is a plus, e.g., MIT 6.1060 (previously 6.172)

- GPU/CUDA programming is a plus

References

- Optimizing CPU Performance for Recommendation Systems At-Scale. ISCA ’23.

- GRACE, ASPLOS 2023.

- EVStore. ASPLOS ‘23.

Graph AI Systems [Elx Link]

Deep Learning is good at capturing hidden patterns of Euclidean data (images, text, videos). But what about applications where data is generated from non-Euclidean domains, represented as graphs with complex relationships and interdependencies between objects? That’s where Graph AI or Graph ML come in. Handling the complexity of graph data and graph algorithms requires innovations in every layer of the computer system, including both software and hardware.

In this project we will design and build efficient graph AI systems to support scalable graph AI computing. In particular, we will build software frameworks for Graph AI and ML, e.g., graph neural networks (GNN), graph pattern mining (GPM) and graph sampling, and hardware accelerators that further enhance system efficiency and scalability.

Qualifications:

- Strong programming skills in C/C++/Python language

- Experience with design and analysis of algorithms, e.g., MIT 6.1220 (previously 6.046)

- Basic understanding of computer architecture, e.g., MIT 6.1910 (previously 6.004)

- Experience with performance engineering is a plus, e.g., MIT 6.1060 (previously 6.172)

- GPU/CUDA programming is a plus

- Familiarity with deep learning frameworks is a plus, e.g., PyTorch

References

- F^2CGT VLDB 2024

- gSampler, SOSP 2023 [Code]

- NextDoor, EuroSys 2021 [Code]

- Scalable graph sampling on gpus with compressed graph, CIKM 2022

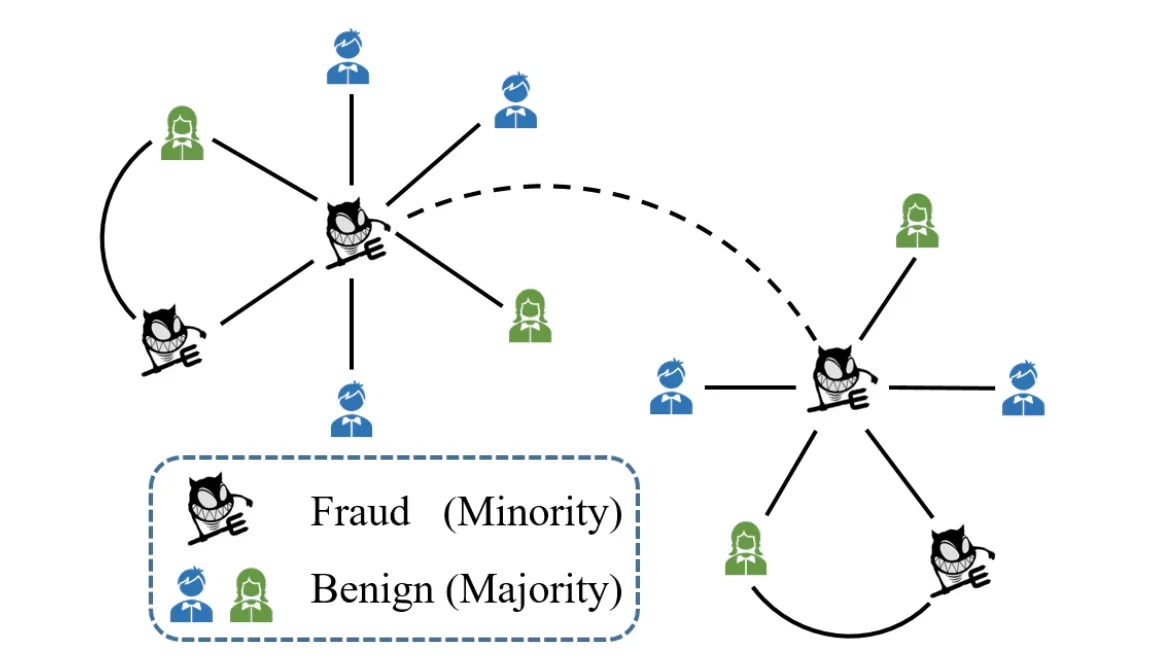

Graph AI for Financial Security [Elx Link]

The advent of cryptocurrency introduced by Bitcoin ignited an explosion of technological and entrepreneurial interest in payment processing. Dampening this excitement was Bitcoin’s bad reputation. Many criminals used Bitcoin’s pseudonymity to hide in plain sight, conducting ransomware attacks and operating dark marketplaces for the exchange of illegal goods and services.

This project offers a golden opportunity to apply machine learning for financial forensics. The data of Bitcoin transactions naturally forms a financial transaction graph, in which we can apply graph machine learning and graph pattern mining techniques to automatically detect illegal activities. We will explore the identification and clustering of frequent subgraphs to uncover money laundering patterns, and conduct link predictions on the wallets (nodes) to unveil the malicious actor behind the scene.

Qualifications:

- Strong programming skills in Python and C/C++ language

- Background and prior experience with design and analysis of algorithms (e.g., 6.046)

- Some familiarity with deep learning frameworks such as PyTorch

References

AI/ML for Performance Engineering [Elx Link]

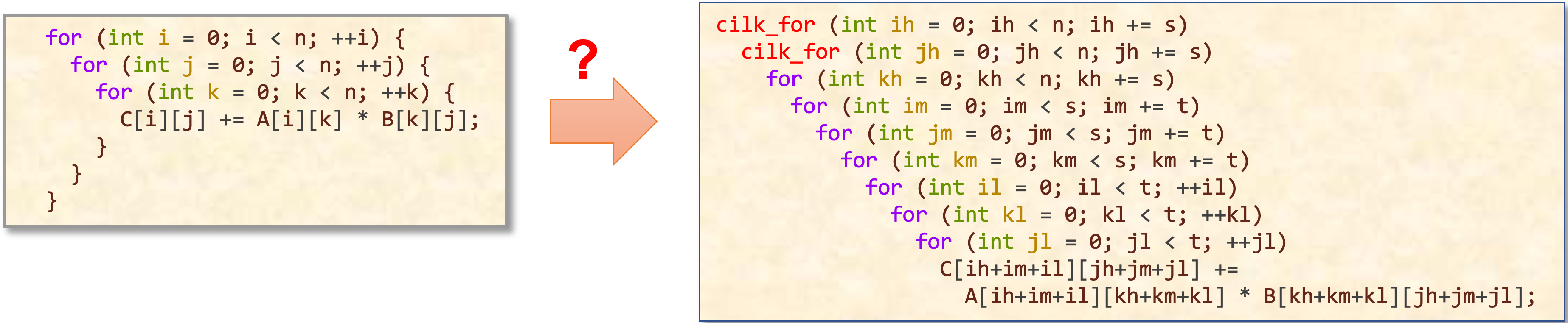

Generative AI, such as Large Language Models (LLMs), has been successfully used to generate computer programs, a.k.a code generation. However, its model performance degrades substantially when asked to do code optimization a.k.a. software performance engineering (SPE), i.e., generate not just correct but fast code.

This project aims to leverage the capabilities of LLMs to revolutionize the area of automatic code optimization. We focus on transforming existing sequential code into high-performance, parallelized code, optimized for specific parallel hardware.

Qualifications:

- Strong programming skills in Python and C/C++ language

- Background and prior experience with design and analysis of algorithms (e.g., 6.046)

- Familiarity with LLMs such as ChatGPT and Code Llama

References

- Performance-Aligned LLMs for Generating Fast Code

- Learning Performance Improving Code Edits

- Can Large Language Models Write Parallel Code?

- MPIrigen: MPI Code Generation through Domain-Specific Language Models

- The Landscape and Challenges of HPC Research and LLMs

Efficient Robotics Computing [Elx Link]

The advancement of robotics technology is rapidly changing the world we live in. With predictions of 20 million robots by 2030 and a market capitalization of US$210 billion by 2025, it is clear that robotics will play an increasingly important role in society. To become widespread, robots need to meet the demands of real-world environments, which necessitates them being autonomous and capable of performing complex artificial intelligence (AI) tasks in real-time.

In this project we aim to build software and hardware systems for Robotics.

Qualifications:

- Strong programming skills in C/C++/Python language

- Experience with design and analysis of algorithms, e.g., MIT 6.1220 (previously 6.046)

- Experience with performance engineering is a plus, e.g., MIT 6.1060 (previously 6.172)

- Parallel OpenMP or GPU/CUDA programming is a plus

References

- Phillip B Gibbons (CMU)'s lab. Tartan: Microarchitecting a Robotic Processor, ISCA 2024

- Tor Aamodt (UBC)'s Lab. Collision Prediction for Robotics Accelerators, ISCA 2024

- Tor Aamodt (UBC)'s Lab. Energy-Efficient Realtime Motion Planning. ISCA 2023.

- Phillip B Gibbons (CMU)'s lab. Agents of Autonomy: A Systematic Study of Robotics on Modern Hardware. SIGMETRICS 2023.

- Sabrina M. Neuman, Vijay Janapa Reddi (Harvard)'s Lab. RoboShape. ISCA 2023.

- Lydia Kavraki (Rice)'s Lab Sophia Shao (Berkeley)'s lab. RoSÉ

- A Survey of FPGA-Based Robotic Computing

- Phillip B Gibbons (CMU)'s lab. RACOD ISCA 2022.