GA-ANN-GA Control

Model in Space Travel

Ying-Peng Zhang

Zhi-Zhuo Zhang

Introduction

This

project is in the context of the control of interstellar aeronautics. By

analysis and comparison of the Genetic Algorithm (GA) and the traditional

algorithms in the area, a new algorithm based on GA is put forward to settle

the complex control problem. And the experimental results show that the scheme

is feasible in practice. In our algorithm, we improve the performance of GA by

the following scheme: we bring forward a new coding scheme based on which new

mutation operators are designed and the cross operator is discarded. A new

heuristic Genetic pattern derived from "Required Genetic" is put

forward and realized. Further, a kind of universal model of mixed intelligent

system: "GA-ANN-GA" is brought forward and is proved to be advanced and reliable.

What's more, we adopted a lot of advanced ideal of software development, such

as Model-View-Controller (MVC), Test Driven Design(TDD) and so on .So the code will

be robust and transplantable. We expect this universal laboratory achievement

is easy to transplant and expand. Finally, we sum up the experiences of the

work, discuss its deficiencies and propose some possible corresponding

solutions.

Originality

1. Invent a instruction-time control series encoding method and prove its effectiveness.

2. Present a novel control model “GA-ANN-GA”, which shows great properties in Robotics.

3. Prove adding linear units in forward neural network can great improve the GA training and accelerate the training converge.

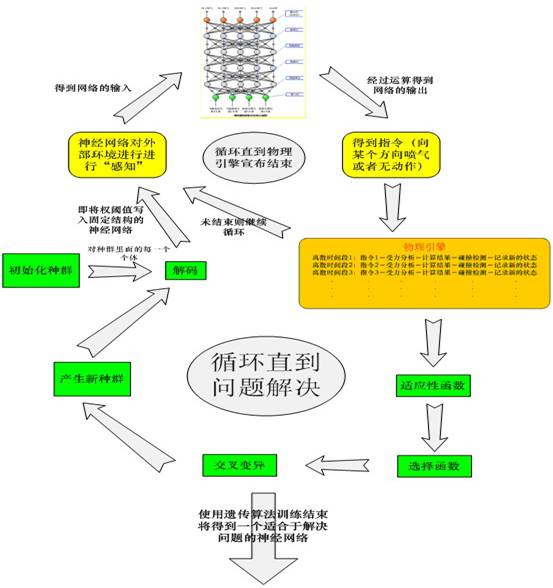

GA-ANN-GA Control Model

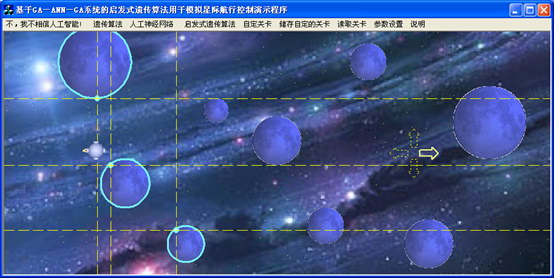

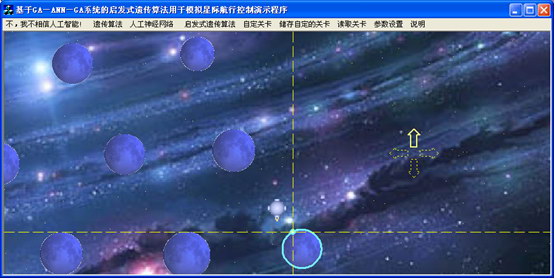

A Simple Robotics problem is the navigation. Given a map full of obstacles, the Robot should find its way to the target place without hitting any obstacle. If the whole map can be sensed by the Robot, it may be turn to be a optimal problem, how to find the shortest path. But in fact, more generality, the Robot can only sense a small part of the map which is near the current location. It turns to be a “Sense-Action” problem. How to train a Robot and what kind of learner is more suitable? Our “GA-ANN-GA” model give you a powerful framework to solve these two concerning problems. Following illustration will be given in the background of space travel agent, which is required not only to avoid hitting aerolites in the space and to optimize multi-objectives including energy cost, safety degree and travel time.

For better understanding, we simplify the space environment in Newton’s classic Mechanic frame and ignore the gravitation between space ship and aerolites. The motion of the space ship is controlled its thrusters of different directions, for example, there are 4 thrusters (left, right, up, down) in 2D version and 6 thrusters in 3D version. Our AI agent can control which thruster is active or none is active in the given small time span.

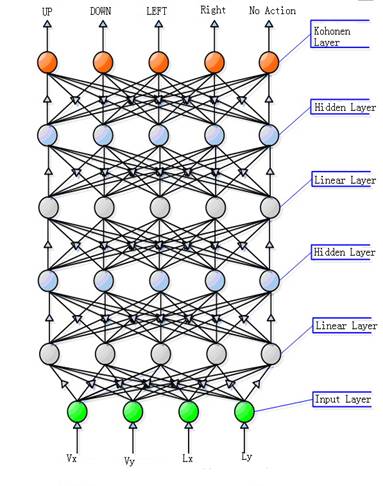

GA-ANN Learner

Applying GA to train a artificial neural network has been proved to be more effective to back propagation training in many literatures. Here, we apply GA to update the weight of each connection in forward neural network with the same idea. The structure of network is fixed, shown as follow:

Input Layer:

![]() are the speed components

of the space ship in 2D version.

are the speed components

of the space ship in 2D version.

![]() are the location

components of the sensed aerolite in 2D version.

are the location

components of the sensed aerolite in 2D version.

Hidden Layer:

There are 5 sigmoid units in each hidden layer and works as sensors in BP Network.

Linear Layer:

There are 5 linear units in each linear layer and works a linear mapping function.

Output Layer:

The output layer is Kohonen layer (compete layer) with 5 output

units standing for different action or no action.

You may wonder how we can train that network, because the output stands for proper action respect to sensors’ data. Actually, unlike other case, one input should be given to the learner with the corresponding correct output. But as you know here, many possible action can be a good output, and we don’t know it is good or not until the action series lead the space ship reach the target place safely. So, who is supervisor to tell our agent “good job” when it makes the right action and tell it wrong when bad action occurs? The answer is another GA trainer.

GA Trainer

As we has said before, once the whole map can be sensible, the problem becomes no more automatic control problem but a optimize problem. This idea really works in the training phase, because we make the map by ourselves and let a spaceship agent practice in it. What’s more, we can take another GA solver for this optimize problem and find good solution (a series of actions). In this way, the GA trainer finds a good action combination to the given map by itself first, and then it trains the GA-ANN learner. The general framework is shown as follow:

More details about how to configure the GAs and how the chromosomes are encoded can be found in:

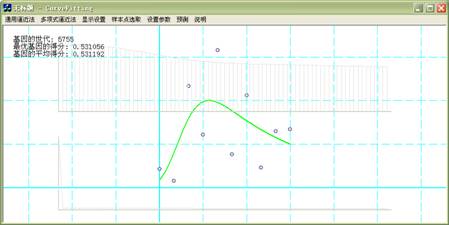

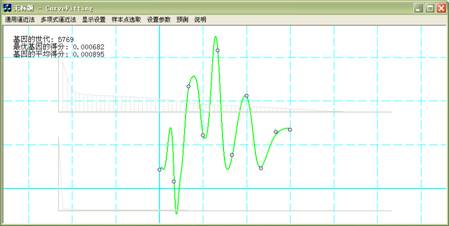

Little More Bit about the Linear Unit

All guys know linear units can not enhance the capability of expression of the neural network, however, we prove that adding the linear units can boost the GA training. The key idea here is when we add a linear layer in the neural network; more connections will be added too. Suppose the original optimal weight configure is W, and the optimal weight configure after adding linear layers is W’. | W | < | W ‘|, which means the optimal solutions are scattered by some linear transformation but not concentrate on some singular points in solution space. A simple experiment about a 2D function fitting is carried out to test the performance before and after adding linear layers.

GA-ANN without Linear Layer

GA-ANN With a Linear Layer

More detail about this technology can be

found in The

New Technology of evolving Artificial Neural Networks Weights by Genetic

Algorithms.

Download

We have develop demo game to show the algorithms performance, including ANN only, GA only, and GA-ANN-GA algorithm. Also, you can try to control the spaceship by yourself, and you will find how hard the task is to the human but how easy job to the AI Robot.

Evolving Neural Networks (2006 CAAI Proceeding)