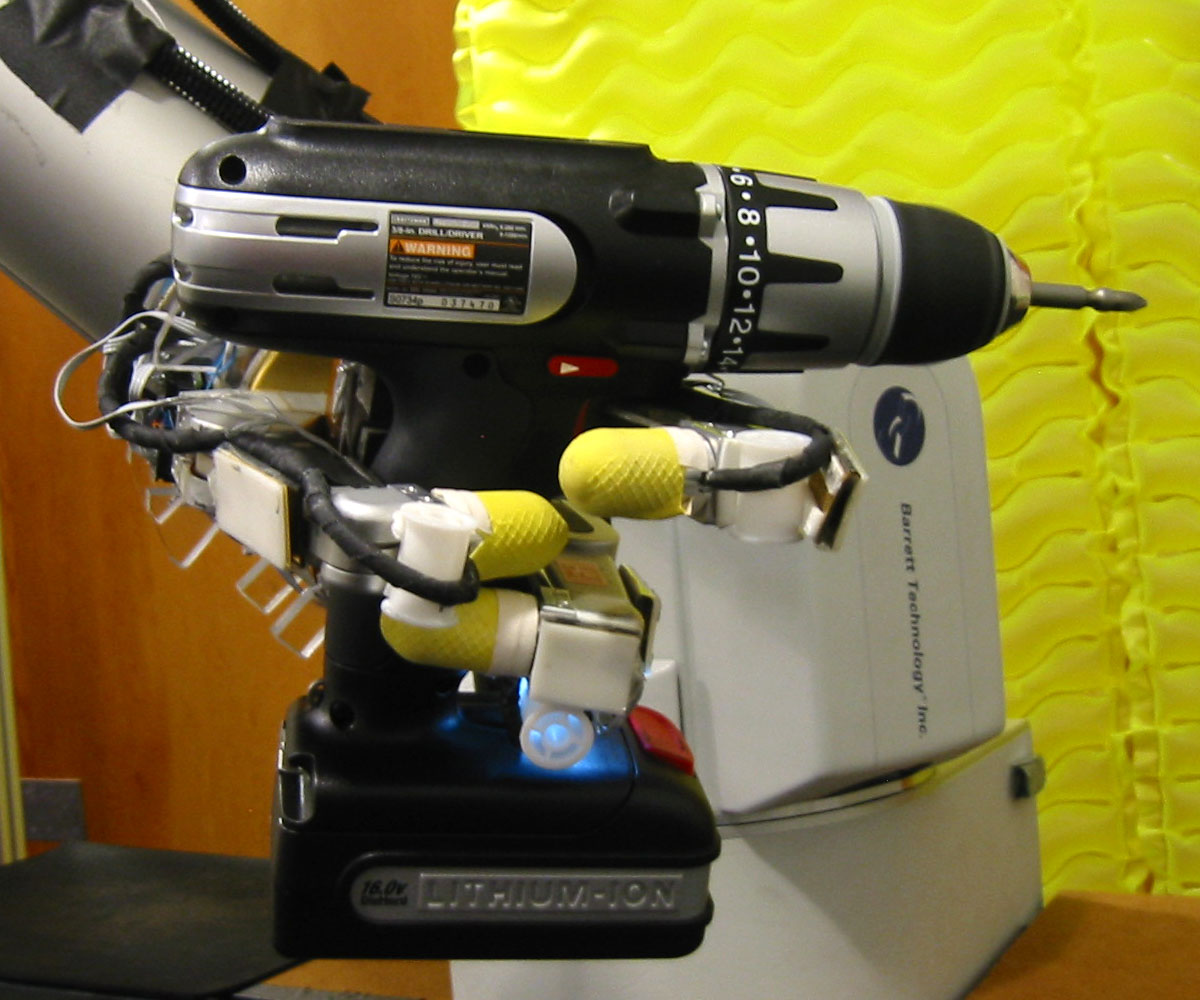

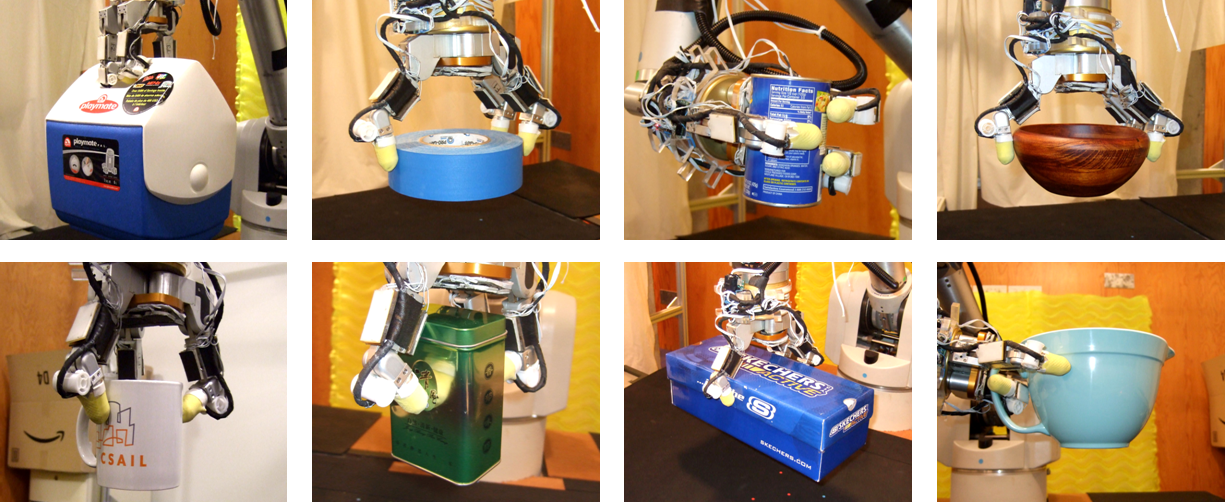

Abstract: We describe a framework for robustly grasping 3D objects of known shape in the presence of significant, but bounded uncertainty. We maintain a belief state (a probability distribution over world states), model the problem as a partially observable Markov decision process (POMDP), and select actions using forward search through the belief space. Our actions are world-relative trajectories, or fixed trajectories expressed relative to the most-likely state of the world. We localize the object, ensure its reachability, and robustly grasp it at a goal position by using information-gathering, reorientation, and goal actions, and choose among candidate actions in a tractable way on-line by computing and storing the observation models needed for belief update off-line. This framework is used to successfully grasp objects (including a powerdrill and a Brita pitcher) despite significant uncertainty, both in simulation and with an actual robot arm.

Papers:

"Robust Belief-Based Execution of Manipulation Programs," Kaijen Hsiao, Tomas Lozano-Perez, and Leslie Pack Kaelbling, WAFR 2008

"Relatively Robust Grasping," Kaijen Hsiao, Tomas Lozano-Perez, and Leslie Pack Kaelbling, ICAPS 2009 Workshop on Bridging the Gap Between Task and Motion Planning

More details in Chapters 3-4 of my thesis.

Videos:

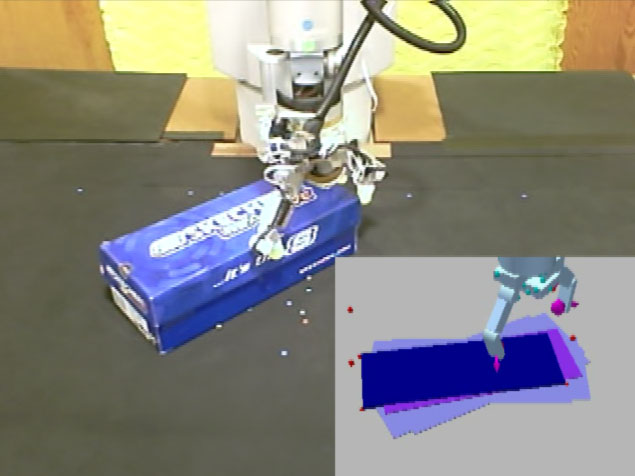

In the following videos, the inset window with the simulated robot is a visualization of the belief state, as in the above picture. The red spheres sit at the vertices of the object mesh placed at the most likely state, and the dark blue box also shows the location of the most likely state. The purple box shows the location of the mean of the belief state, and the light blue boxes show the variance of the belief state in the form of the locations of various states that are one standard deviation away from the mean in each of the three dimensions of uncertainty (x, y, and theta). The magenta spheres and arrows that appear when the robot touches the object show the contact locations and normals as reported by the sensors, and the cyan spheres that largely overlap the hand are showing where the robot controllers are trying to move the hand.

To see a video of grasping a powerdrill, click here.

To see a video of grasping a Brita pitcher, click here.

To see videos of grasping the other eight objects, click here.

To see videos of the failed grasps, click here.