My doctoral thesis explores enabling robots to perform complex manipulation tasks that require reasoning over force, motion and contact constraints across a sequence of actions. To do so, I focus on developing planning algorithms using abstractions of mechanics in the context of multi-step robotic manipulation, while accounting for uncertainty. I apply this in the context of three domains: in-hand manipulation, forceful manipulation and briefly-dynamic manipulation. Click each graphic to expand it and learn more.

In-Hand Manipulation

Planning Forceful Manipulation

Briefly-Dynamic Manipulation

×

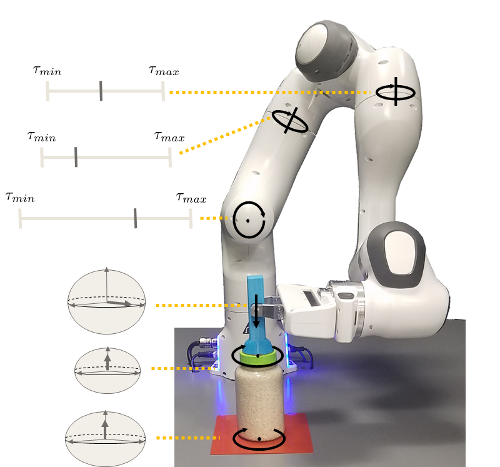

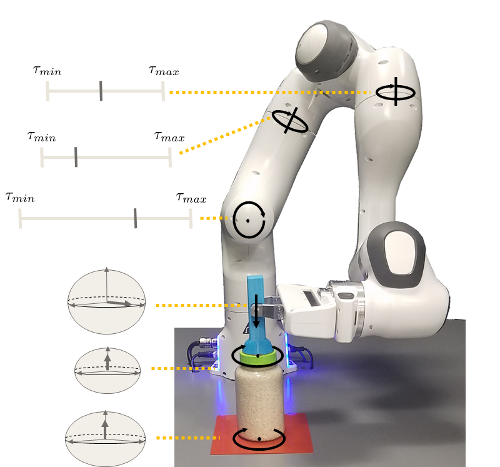

Our goal is to enable robots to multi-stage forceful manipulation tasks, where the robot must reason over task, motion and force constraints. While all tasks that involve contact are technically forceful, we refer to forceful manipulation tasks as those where the ability to generate and transmit the necessary forces to objects and their environment is an active limiting factor which has to be considered. First, we formulated this as a constrained manipulation planning problem where the sequence of actions was given and the robot needed to reason over choices such as the grasp, poses and paths that satisify the constraints [3, 4]. We then formulated forceful manipulation as a task and motion planning (TAMP) problem wherein the robot much plan both the sequence of parameterized actions and the constrained continuous and discrete parameters of those actions [1, 2]. We also show how cost-sensitive planning can be used to find strategies and parameters that are robust to uncertainty in the physical parameters.

[1]

Robust Planning for Multi-stage Forceful Manipulation. Rachel Holladay, Tomás Lozano-Pérez, Alberto Rodriguez.

International Journal of Robotics Research (IJRR), 2023.

[2]

Planning for Multi-stage Forceful Manipulation. Rachel Holladay, Tomás Lozano-Pérez, Alberto Rodriguez.

IEEE Interational Conference on Robotics and Automation (ICRA), 2021.

[3]

Force-and-Motion Constrained Planning for Tool Use. Rachel Holladay. Master's Thesis, MIT 2019.

[4]

Force-and-Motion Constrained Planning for Tool Use. Rachel Holladay, Tomás Lozano-Pérez, Alberto Rodriguez.

IEEE/RSJ Interational Conference on Intelligent Robots and Systems (IROS), 2019.

×

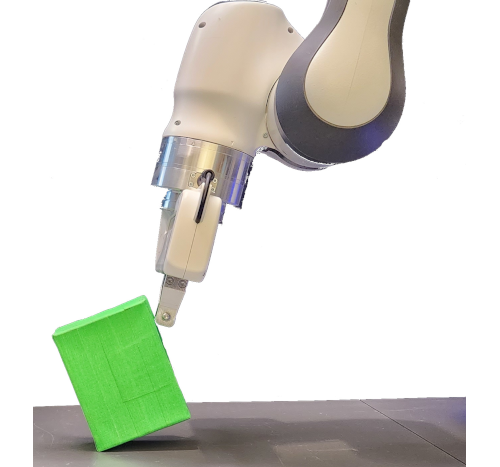

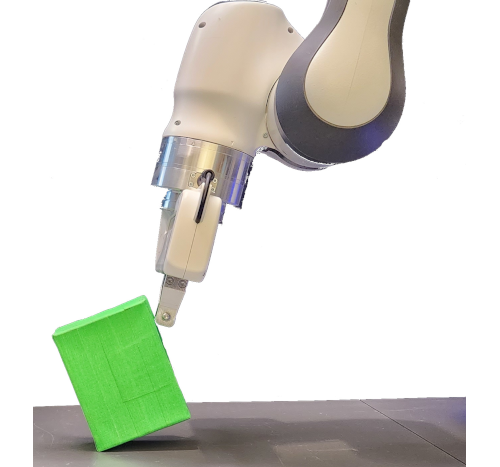

This research presents the mechanics and algorithms to compute the set of feasible motions of an object pushed in a plane. This set is known as the motion cone and was previously described for non-prehensile manipulation tasks in the horizontal plane. We generalize its construction to a broader set of planar tasks, such as those where external forces including gravity influence the dynamics of pushing, or prehensile tasks, where there are complex frictional interactions between the gripper, object, and pusher. We show that the motion cone is defined by a set of low-curvature surfaces and approximate it by a polyhedral cone. We verify its validity with thousands of pushing experiments recorded with a motion tracking system. Motion cones abstract the algebra involved in the dynamics of frictional pushing and can be used for simulation, planning, and control. In this work, we demonstrate their use for the dynamic propagation step in a sampling-based planning algorithm. By constraining the planner to explore only through the interior of motion cones, we obtain manipulation strategies that are robust against bounded uncertainties in the frictional parameters of the system. Our planner generates in-hand manipulation trajectories that involve sequences of continuous pushes, from different sides of the object when necessary, with 5–1,000 times speed improvements to equivalent algorithms.

Planar In-Hand Manipulation via Motion Cones. Nikhil Chavan-Dafle,

Rachel Holladay and Alberto Rodriguez.

International Journal of Robotics Research (IJRR), 2019.

In-Hand Manipulation via Motion Cones. Nikhil Chavan Dafle,

Rachel Holladay, and Alberto Rodriguez.

Robotics: Science and Systems (RSS), 2018.

Best Student Paper Award.

×

Our goal is to enable robots to generate sequences of actions where some of those actions, such as toppling or shoving, have a bounded, or brief, period of being dynamic. Two critical challenges in planning briefly-dynamic manipulation is that there is both considerable uncertainty in the outcome of the action (e.g. how far will an object slide?) and that some action outcomes are particularly disastrous (e.g. the object slides off the table and out of reach). In this case it is critical for the robot to be able to model when an action is irrecoverable, i.e., when the outcome of an action makes it impossible for the robot to achieve its goal. Critically, actions may be irrecoverable because they immediately make the goal unachievable, e.g. by pushing the object off the edge, or because they are a slippery slope to eventual failure, e.g. by pushing the object into a cramped corner where the object is likely to get stuck. By avoiding both of these cases and restricting itself to ‘safe’, i.e. recoverable, actions, the robot can account for possible inaccuracies in the model by iteratively adjusting its plan on-line and continuing to act. In the tabletop setting, I am currently exploring how a learned, low-fidelity model can capture when an action is irrecoverable and integrating this model into a decision-making framework.

Work in Progress

Throughout my PhD I have had the joy of collaborating on several different endeavors including authoring a survey paper on Task and Motion Planning (TAMP), developing a gripper for in-hand manipulation and competing in the Amazon Robotics Challenge 2017 as part of the MIT-Princeton team. Click each graphic to expand it and learn more.

Task and Motion Planning

Gripper for In-Hand Manipulation

Amazon Robotics Challenge

×

The problem of planning for a robot that operates in environments containing a large number of objects, taking actions to move itself through the world as well as to change the state of the objects, is known as task and motion planning (TAMP). TAMP problems contain elements of discrete task planning, discrete–continuous mathematical programming, and continuous motion planning and thus cannot be effectively addressed by any of these fields directly. In this work, we define a class of TAMP problems and survey algorithms for solving them, characterizing the solution methods in terms of their strategies for solving the continuous-space subproblems and their techniques for integrating the discrete and continuous components of the search.

Integrated Task and Motion Planning. Caelan Reed Garrett, Rohan Chitnis,

Rachel Holladay, Beomjoon Kim, Tom Silver, Leslie Pack Kaelbling, and Tomás Lozano-Pérez. Annual Review of Control, Robotics, and Autonomous Systems, 2021.

×

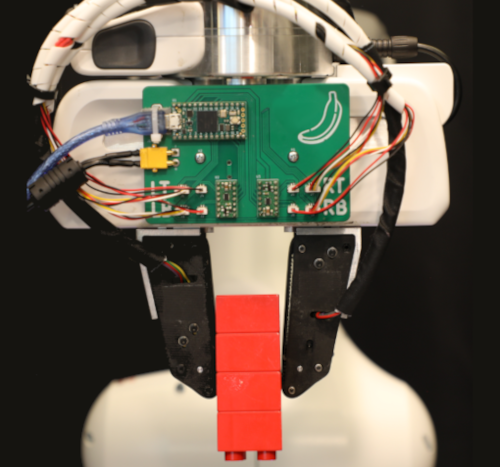

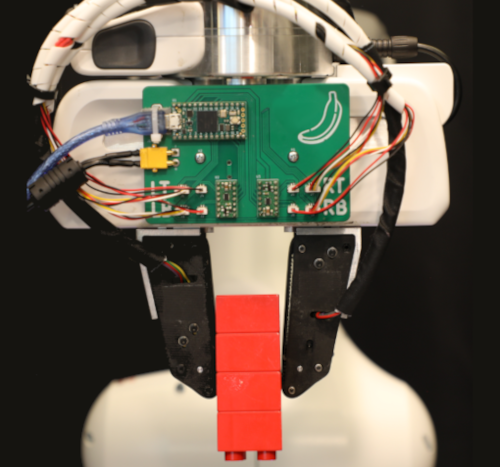

Many manipulation tasks require task-specific grasps that may initially be unavailable, requiring the robot to transition from grasp to grasp. Current approaches to in-hand manipulation often involve complex hardware or algorithms, which limits their use in real-world tasks. In this work, we present an integrated approach to in-hand manipulation through Belt Orienting Phalanges (BOP), maximizing gripper capability by considering both hardware and algorithm design. BOP is a parallel-jaw gripper where each finger has two sets of belts. Together, the two fingers’ belts can impart forces and torques on to a grasped object to control its roll, pitch and translation. These movements form the basis of motion primitives that can be sequenced together for in-hand manipulation of objects as well as for complex motions such as syringe actuation and fingernail-style lifting. We characterize these motion primitives, develop a grasp-to-grasp motion planner, and demonstrate the potential of BOP through the real-world example of screwing a light bulb into a socket.

In-Hand Manipulation with a Simple Belted Parallel-Jaw Gripper. Gregory Xie,

Rachel Holladay, Lillian Chin, Daniela Rus.

IEEE Robotics and Automation Letters, 2023.

×

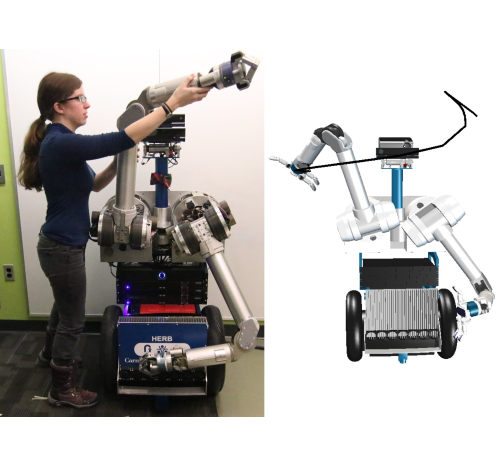

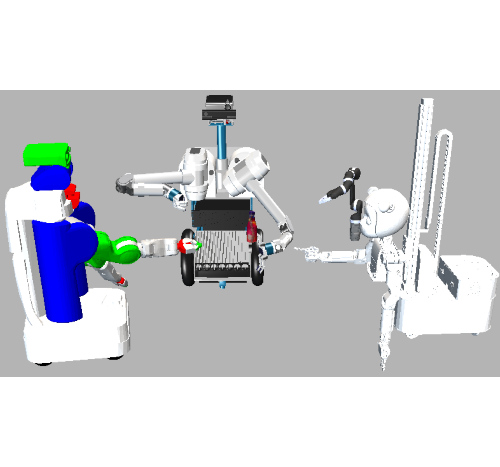

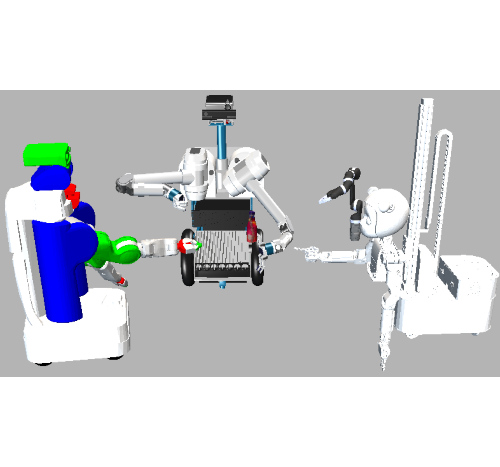

As a member of the MCube Lab, I was a member of the MIT-Princeton Team for the 2017 Amazon Robotics Challenge (ARC), which focused on grasping and stowing objects in clutter. The team developed a robotic pick-and-place system that is capable of grasping and recognizing both known and novel objects in cluttered environments. The key new feature of the system is that it handles a wide range of object categories without needing any task-specific training data for novel objects. To achieve this, it first uses an object-agnostic grasping framework to map from visual observations to actions: inferring dense pixel-wise probability maps of the affordances for four different grasping primitive actions. It then executes the action with the highest affordance and recognizes picked objects with a cross-domain image classification framework that matches observed images to product images. Since product images are readily available for a wide range of objects (e.g., from the web), the system works out-of-the-box for novel objects without requiring any additional data collection or re-training. Exhaustive experimental results demonstrate that our multi-affordance grasping achieves high success rates for a wide variety of objects in clutter, and our recognition algorithm achieves high accuracy for both known and novel grasped objects. The approach, as used in the challenge, took first place in the stowing task. All code, datasets, and pre-trained models are available online at

http://arc.cs.princeton.edu/

Robotic Pick-and-Place of Novel Objects in Clutter with Multi-Affordance Grasping and Cross-Domain Image Matching. Andy Zeng, Shuran Song, Kuan-Ting Yu, Elliott Donlon, Francois Hogan, Maria Bauza, Daolin Ma, Orion Taylor, Melody Liu, Eudald Romo, Nima Fazeli, Ferran Alet, Nikhil Chavan Dafle,

Rachel Holladay, Isabella Morona, Prem Qu Nair, Druck Green, Ian Taylor, Weber Liu, Thomas Funkhouser, Alberto Rodriguez.

International Journal of Robotics Research (IJRR), 2019.

Robotic Pick-and-Place of Novel Objects in Clutter with Multi-Affordance Grasping and Cross-Domain Image Matching. Andy Zeng, Shuran Song, Kuan-Ting Yu, Elliott Donlon, Francois R Hogan, Maria Bauza, Daolin Ma, Orion Taylor, Melody Liu, Eudald Romo, Nima Fazeli, Ferran Alet, Nikhil Chavan Dafle,

Rachel Holladay, Isabella Morona, Prem Qu Nair, Druck Green, Ian Taylor, Weber Liu, Thomas Funkhouser, and Alberto Rodriguez.

IEEE Interational Conference on Robotics and Automation (ICRA), 2018.

Amazon Robotics Best Systems Paper Award in Manipulation.

As an undergraduate, my research laid at the intersection of motion planning and human-robot interaction. I focused on enabling robots to clearly communicate their intent and creating more capable assistive robots. My undergraduate thesis focused on constrained motion planning in task space. Click each graphic to expand it and learn more.

Constrained Motion Planning in Task Space

Communicating Robotic Intent

Assistive Robotics

×

This work focuses on the constraint asking the robot’s end effector (hand) to trace out a shape [2]. Formally, our goal is to produce a configuration space path that closely follows a desired task space path despite the presence of obstacles. Adapting metrics from computational geometry, we show that the discrete Frechet distance metric is an effective and natural tool for capturing the notion of closeness between two paths in task space. We then introduce two algorithmic approaches for efficiently planning with this metric. The first is a trajectory optimization approach that directly optimizes to minimize the Fréchet distance between the produced path and the desired task space path [3]. The second approach searches along a configuration space embedding graph of the task space path [1].

[1]

Minimizing Task Space Fréchet Error via Efficient Incremental Graph Search.

Rachel Holladay, Oren Salzman, and Siddhartha Srinivasa.

IEEE Robotics and Automation Letters, 2019.

[2]

Following Paths in Task Space: Distance Metrics and Planning Algorithms. Rachel Holladay. Undergraduate Honors Thesis, CMU 2017.

Allen Newell Award for Excellence in Undergraduate Research.

[3]

Distance Metrics and Algorithms for Task Space Path Optimization. Rachel Holladay and Siddhartha Srinivasa.

IEEE/RSJ Interational Conference on Intelligent Robots and Systems (IROS), 2016.

×

Through several projects, we have explored how robots can express or hide their intent through motion. The first project investigated on how to generate robotic motion that is deceptive, motion that communicates false information or hides information all together [2, 4]. We present an analysis of deceptive motion with a mathematical model that enables the robot to autonomously generate deceptive motion and a study on the implications of deceptive motion for human robot interactions. Following this we focused on enabling robots to use deictic gestures. We presented a mathematical model for legible pointing and discussed how the robot will sometimes need to trade off efficiency for the sake of clarity [3]. To generalize greatures to the robot's configuration space, we developed RoGuE (Robot Gesture Engine) as a motion planning approach to generating gestures, parameterizing gestures as task-space constraints on robot trajectories and goals [1].

[1]

RoGuE: Robot Gesture Engine. Rachel Holladay and Siddhartha Srinivasa.

AAAI Spring Symposium: "Enabling Computing Research in Socially Intelligent Human-Robot Interaction: A Community-Driven Modular Research Platform". 2016.

[2]

Deceptive Robot Motion: Synthesis, Analysis and Experiments. Anca Dragan,

Rachel Holladay, and Siddhartha Srinivasa.

Autonomous Robots, 2015.

[3]

Legible Robot Pointing. Rachel Holladay, Anca Dragan, and Siddhartha Srinivasa.

IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), 2014.

[4]

An Analysis of Deceptive Robot Motion. Anca Dragan,

Rachel Holladay, and Siddhartha Srinivasa.

Robotics: Science and Systems (RSS), 2014.

×

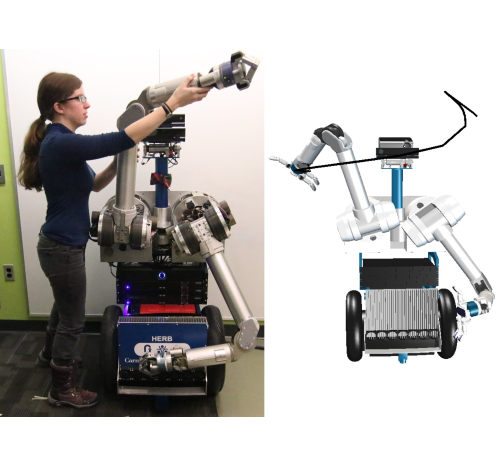

Assistive robotic arms are increasingly enabling users with upper extremity disabilities to perform activities of daily living on their own. To develop more intelligent assistive arms we began by focusing on the shared autonomy, teleoperation setting. Often these high-dimensional robotic systems are controlled with simple, low-dimensional interfaces like joysticks and sip-and-puff interfaces that require the user to switch between multiple control modes. However, our interviews with daily users of the Kinova JACO arm identified mode switching as a key problem, both in terms of time and cognitive load. We further confirmed objectively that mode switching consumes about 17.4% of execution time even for able-bodied users controlling the JACO. Our key insight is that using even a simple model of mode switching, like time optimality, and a simple intervention, like automatically switching modes, significantly improves user satisfaction [2]. Also within the shared autonomy paradigm, we explored how to generate robot arm trajectories that move the robot out of the way, minimize how much the scene the robot occludes for the user [1].

[1]

Visibility Optimization in Robot Manipulation Tasks. Rachel Holladay, Laura Herlant, Henny Admoni and Siddhartha Srinivasa.

IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) "Workshop on Human-Oriented Approaches for Assistive and Rehabilitation Robotics (HUMORARR)", 2016.

[2]

Assistive Teleoperation of Robot Arms via Automatic Time-Optimal Mode Switching. Laura Herlant,

Rachel Holladay, and Siddhartha Srinivasa.

Human Robot Interaction (HRI), 2016.