| Tianfan Xue | Hossein Mobahi | Frédo Durand | William T. Freeman |

| MIT Computer Science and Artificial Intelligence Laboratory |

|

|

|

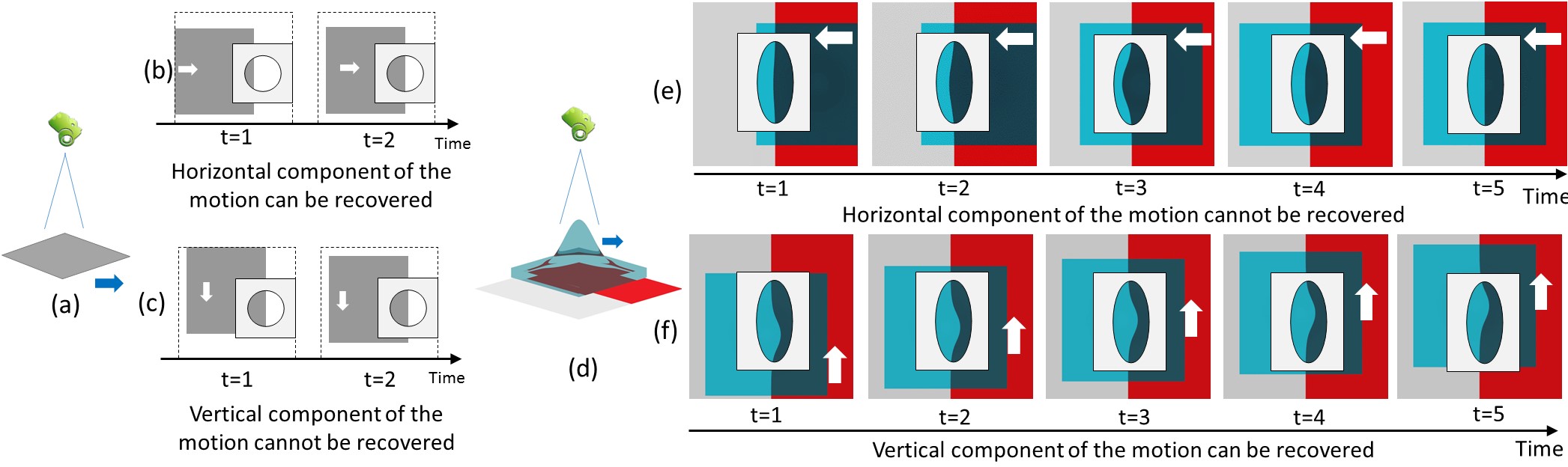

| The aperture problem for a refractive object. A camera is imaging an opaque moving object (gray). (b) When a vertical edge is observed within the aperture (the white circular mask), we can resolve the horizontal component of the motion. (c) The vertical component of the motion is ambiguous, because when the object moves vertically, no change is observed through this aperture. (d) A camera is viewing a stationary and planar background (white and red) through a moving Gaussian-shaped glass (blue). (e) The horizontal motion is ambiguous, because the observed sequence is symmetric. That is, if the glass moves in the opposite direction, the same sequence will be observed. (f) The vertical motion can be recovered, e.g. by tracking the observed tip of the bump |

Abstract

When viewed through a small aperture, a moving image provides incomplete information about the local motion. Only the component of motion along the local image gradient is constrained. In an essential part of optical flow algorithms, information must be aggregated from nearby image locations in order to estimate all components of motion. This limitation of local evidence for estimating optical flow is called ''the aperture problem''.

We pose and solve a generalization of the aperture problem for moving refractive elements. We consider a common setup in air flow imaging or telescope observation: a camera is viewing a static background, and an unknown refractive elements undergoing unknown motion between them. Then we are addressing this fundamental question: what does the local image motion tell us about the motion of refractive elements?

We show that the information gleaned through a local aperture for this case is very different than that for optical flow. In optical flow, the movement of 1D structure already constrains the motion in a certain direction. However, we cannot infer any information about the refractive motion from the movement of 1D structure in the observed sequence, and can only recover one component of the motion from 2D structure. Results on both simulated and real sequences are shown to illustrate our theory.

@article{xue2015aperture, author = {Tianfan Xue and Hossein Mobahi and Fr\'{e}do Durand and William T. Freeman}, title = {Refraction Wiggles for Measuring Fluid Depth and Velocity from Video}, journal = {Proc. of IEEE Conference on Computer Vision and Pattern Recognition (CVPR)}, year = {2015}, }

Download

Paper: pdf

Poster: pdf

Video Demo: movRelated Publications

Refraction Wiggles for Measuring Fluid Depth and Velocity from Video, T. Xue, M. Rubinstein, N. Wadhwa, A. Levin, F. Durand, W. T. Freeman, ECCV 2014

Acknowledgements

We thank the CVPR reviewers for their comments. We also acknowledge support from Shell Research, ONR MURI grant N00014-09-1-1051, and NSF CISE 1111415 Analyzing Images Over Time.