|

Market-Based Dynamic Load Balancing for StreamIt Programs |

|

Market-Based Dynamic Load Balancing for StreamIt Programs |

|

|

Faculty: Una-May O'Reilly

|

|

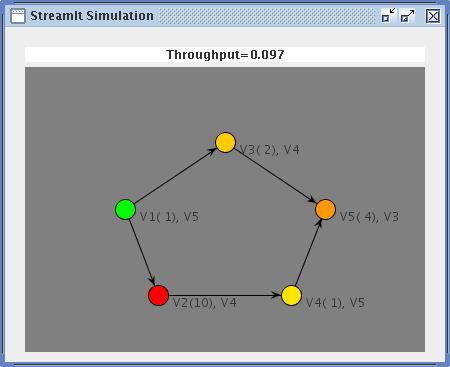

| A graphical representation of the StreamIt simulator. The green node is the starting node (where the data originates). All other nodes are color coded: yellow means its input buffer is empty, red means its input buffer is full. One can see that the red node (V2) is a bottleneck because its buffer is full even though its immediate downstream neighbor (V4) has room in ints input buffer. |

We regard the graph computation of the StreamIt program as a supply chain where the input data of the program represents the raw materials that enter a supply chain. Each filter between the first and last filter of the the stream pipeline is a middle-man in the supply chain. Each middle-man is refining the raw materials of its producers (i.e. data from its input buffers) into an enhanced good or data element and selling them to the filter(s) downstream from it. At the output end of the supply chain, the final producer takes its refined goods to market and is paid for them.

Allocation of money through the pipeline sweeps upstream from final filter to initial filter. Each filter considers how to split the income it gets per unit of output (i.e. we arbitrarily set the final filter's income per unit to the unit price) between the prices it will pay for its input goods from its producers and the price it is willing to pay for the processing of its input goods. If the filter has found itself idle for some fraction of the previous checkpoint interval, with available inputs, then it should logically allocate more money to processing and pay less for its inputs which would potentially slow down their delivery. Optimally, a filter would never be idle and not be over-paying its producers. If a filter is always busy and has more than enough resources in its input buffers, it should pay a little less for the resources and pay a little more to get a faster processor. If a filter is idle because its downstream buyer's buffers are too full for new items, it should decrease what it pays for a processor (since the speed is wasted). These "spending behaviours" constitute a filter's disbursement policy. We intend to implement at least one disbursement policy (which will be parameterized) and will investigate how a policy (whether the same one for all filters or a different per filter) affects throughput of the pipeline and achieves high performing dynamic load balancing.

The interface between the market and StreamIt simulator is the runtime handler, whose job is to derive the mapping from filters (stream graph nodes) to computers (cluster graph nodes). We have implemented a baseline instantiation of the runtime handler, the static load balancer, which maps according to the cluster graph produced by the StreamIt compiler. We are optimistic that the runtime overhead introduced by the runtime handler will be minimal compared to the speedup we get.

We have also implemented our first alternative runtime handler whose mappings can change dynamically throughout the computation according to the prices filters post as an indication of what they are willing to spend for executing their work on a processor. As per a "natural" market, processors that are faster and less loaded will cost the most. The runtime handler is parameterized and we intend to explore the impact different parameter regimes have on stream throughput. At this date, the initial experimental framework is already in place: the first set of experiments will test simple variations of stream and cluster graphs with the different runtime handlers and disbursement policies.

[1] Michal Karczmarek, William Thies and Saman Amarasinghe. Phased Scheduling of Stream Programs. In LCTES'03, San Diego, California, USA, June 2003.

[2] Carl A. Waldspurger and Tad Hogg and Bernardo A. Huberman and Jeffrey O. Kephart and W. Scott Stornetta. Spawn: A Distributed Computational Economy. In IEEE Transactions on Software Engineering, February 1992.

[3] Brent N. Chun and Philip Buonadonna and Alvin AuYoung and Chaki Ng and David C. Parkes Mirage: A Microeconomic Resource Allocation System for Sensornet Testbeds. In Proceedings of the 2nd IEEE Workshop on Embedded Networked Sensors, May 2005.