Active clothing perception

The goal of this project is to build a robot system that can autonomously explore the natural clothes. There are three challenges: 1. the framework should work for general objects; 2. the robot should be able to learn multiple properties of the clothing in order to get a comprehensive understanding of it; 3. the exploration is conducted autonomously by robots.

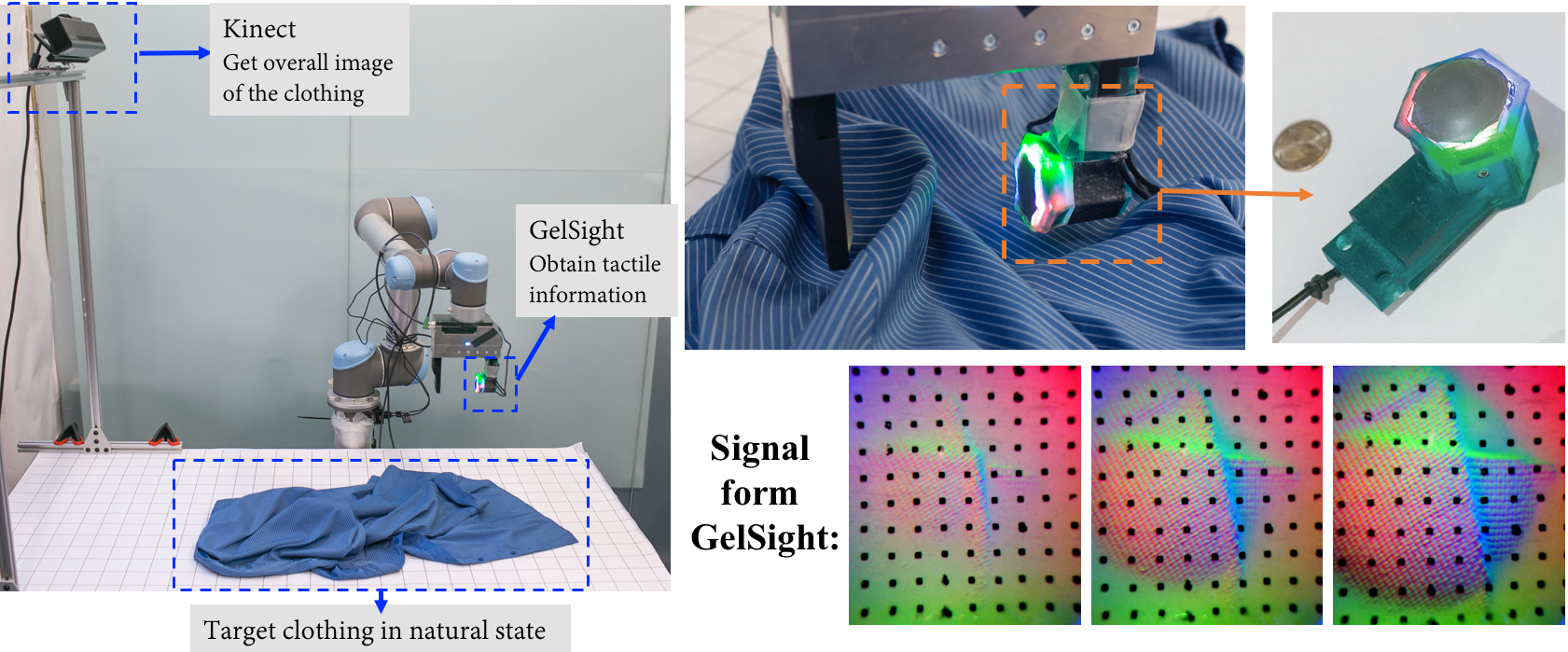

The robot system we build is shown in the following picture: a robot arm and gripper with GelSight sensor to explore the clothing, and an external Kinect sensor captures the shape of the clothing. The robot chooses a point from the Kinect depth image to conduct the exploration, and then it moves to the point and squeezes the clothing with a GelSight finger.

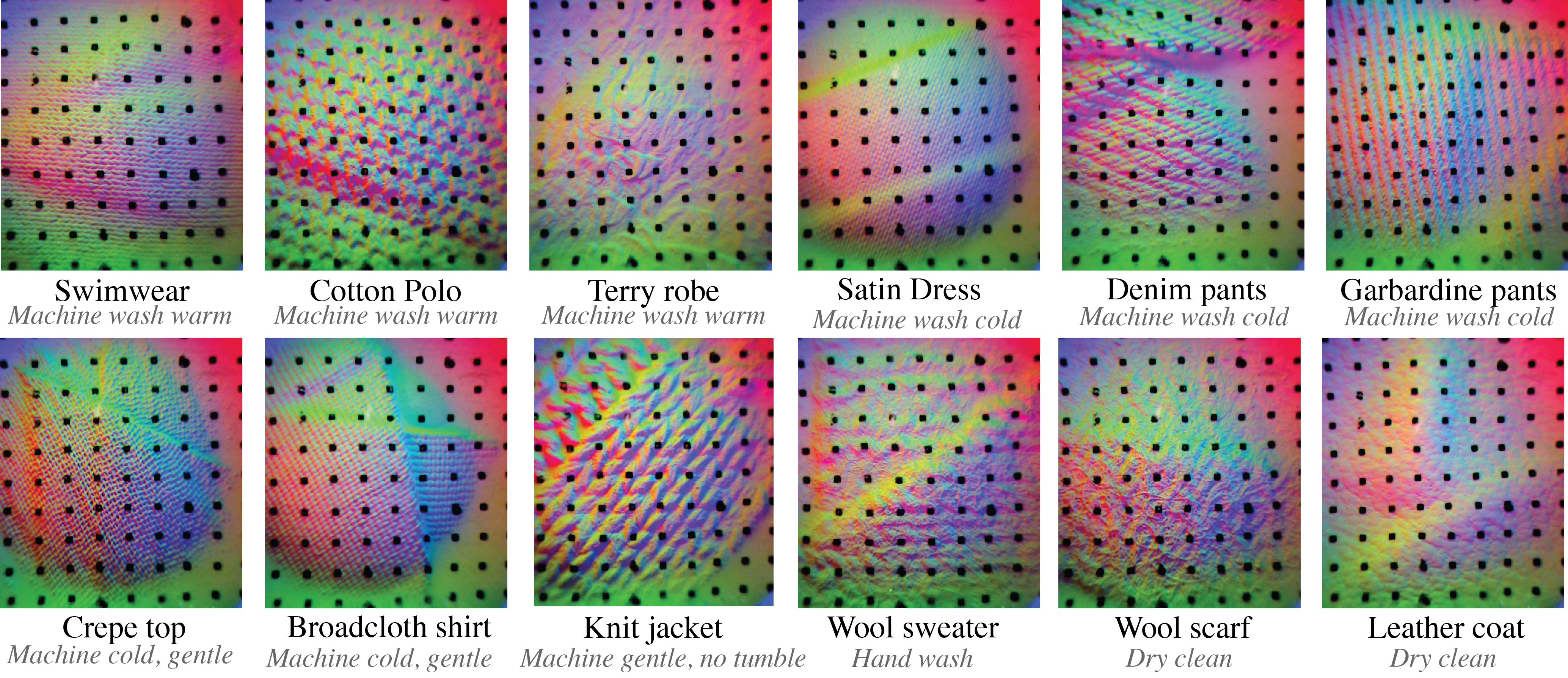

Examples of GelSight images when squeezing on different clothes are shown below:

We use convolutional neural networks (CNN) to recognize the properties from the tactile data. We hand-labeled 11 properties of the clothes, inluding the physical properties, like thickness, fuzziness, softness and endurance, and semantic properties like wearing season and preferred washing methods.

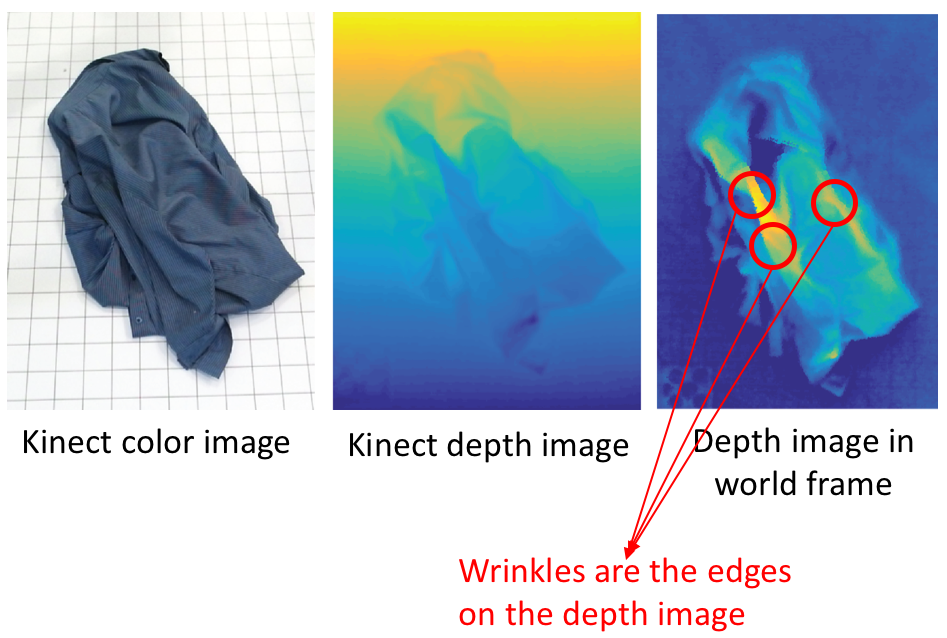

To explore the clothing, we assume the wrinkles on the clothing are the best parts to squeeze. We find the wrinkles from the Kinect depth images: as shown in the following example, after tranfering to the world coordinate, the wrinkles are represented as the edges in the depth image. Note that some wrinkles are not good points for exploration, and we further evaluate the exploring locations using CNN.

To explore the clothing, we assume the wrinkles on the clothing are the best parts to squeeze. We find the wrinkles from the Kinect depth images: as shown in the following example, after tranfering to the world coordinate, the wrinkles are represented as the edges in the depth image. Note that some wrinkles are not good points for exploration, and we further evaluate the exploring locations using CNN.

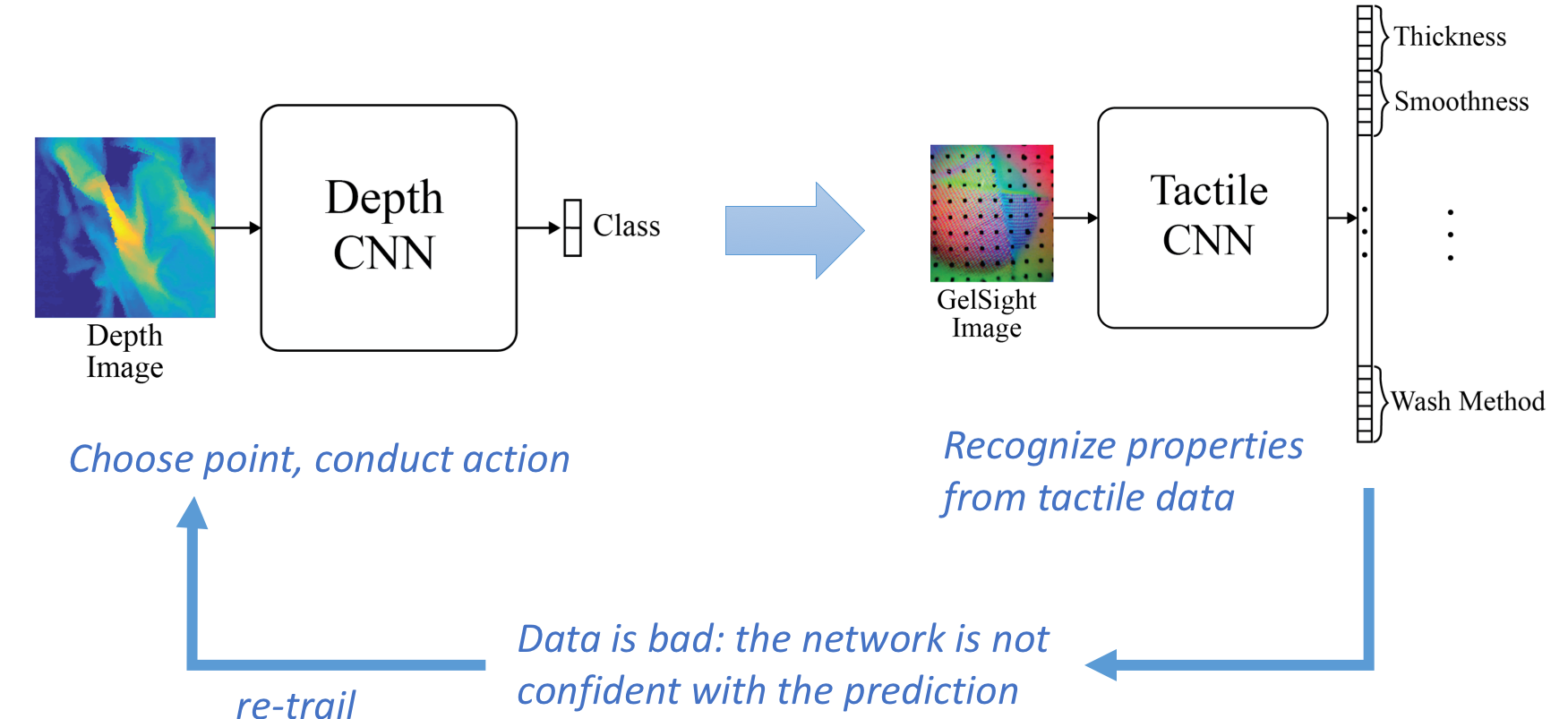

After one exploration, we also evaluate the quality of the obtained tactile data. If the data is not good, and the robot is not confident about the property estimation result, it will re-generate the exploration procedure. The circulation is shown as the following diagram:

With the re-trial mechanism, the robot gets better preperty estimation results. Here is an example of the robot sorting laundary into different washing baskets after multiple trials, until it gets good data.